Generative AI applications powered by large language models (LLMs) hold immense potential across various industries. However, building, deploying, and monitoring these powerful models is a complex undertaking. This is where NVIDIA and New Relic join forces to provide a streamlined path for developing, deploying, and monitoring AI-powered enterprise applications in production.

NVIDIA NIM, part of NVIDIA AI Enterprise, is a set of cloud-native microservices that offer models as optimized containers. These containers can be deployed on clouds, data centers, or workstations, enabling the easy creation of generative AI applications such as copilots and chatbots.

New Relic AI Monitoring seamlessly integrates with NVIDIA NIM, providing full-stack observability for applications built on a wide range of AI models supported by NIM, including Meta's Llama 3, Mistral Large, and Mixtral 8x22B, among others. This integration helps organizations confidently deploy and monitor AI applications built with NVIDIA NIM, accelerate time-to-market, and improve ROI.

What is NVIDIA NIM?

NVIDIA NIM is a set of inference microservices that provides pre-built, optimized LLM models that simplify the deployment across NVIDIA accelerated infrastructure in the data center and cloud. This eliminates the need for companies to spend valuable time and resources optimizing models to run on different infrastructure, creating APIs for developers to build applications, and maintaining security and support for these models in production. Here's how NIM tackles the challenges of generative AI:

- Pre-built and optimized models: NIM offers a library of pre-trained LLM models, saving you the effort and expertise required for model development. These models are specifically optimized for NVIDIA GPUs, ensuring efficient performance.

- Simplified deployment: NIM utilizes containerized microservices, making deployment a breeze. These pre-packaged units include everything needed to run the model, allowing for quick and easy deployment on various platforms, from cloud environments to on-premise data centers

- Enhanced security: For applications requiring robust security, the NIM self-hosted model deployment option provides complete control over your data.

Getting started with New Relic AI Monitoring for NVIDIA NIM

New Relic AI Monitoring delivers the power of observability to the entire AI stack for applications built with NVIDIA NIM. This enables you to effortlessly monitor, debug, and optimize your AI applications for performance, quality, and cost, while ensuring data privacy and security. Here are step-by-step instructions to get you started with monitoring AI applications built with NVIDIA NIM

Step 1: Instrument your AI application built with NVIDIA NIM

First, you'll need to set up instrumentation for your application. Here's how:

- Sign up for a free New Relic account or log in if you have one already.

- Click on Add Data.

- In the search bar, type NVIDIA and select NVIDIA NIM.

- Choose your application’s programming language (Python, Node.js).

- Follow the guided onboarding process provided by New Relic. This process will walk you through instrumenting your AI application built with NVIDIA NIM. We have named our sample app “local-nim”.

Step 2: Access AI Monitoring

Once your application is instrumented, you can start using AI Monitoring:

- Navigate to All Capabilities in your New Relic dashboard.

- Click on AI Monitoring.

- In the AI Monitoring section, under All Entities, you'll see the “local-nim” sample app we built using NVIDIA NIM.

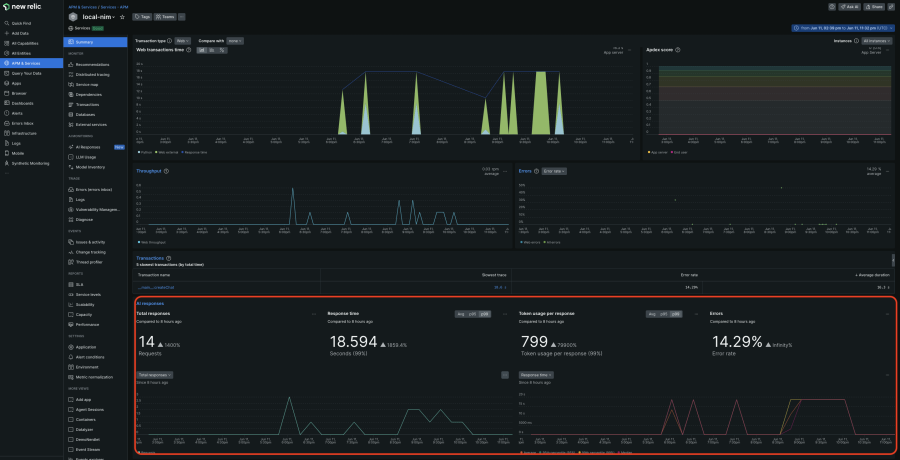

- Click on the local-nim app to access the APM 360 summary with the integrated AI monitoring view. This unified view gives you instant insights into the AI layer’s key metrics, such as the total number of requests, average response time, token usage, and response error rates. These results appear in context, alongside your APM golden signals, infrastructure insights, and logs. By correlating all this information in one place, you can quickly identify the source of issues and drill down deeper for further analysis.

Step 3: Deep dive with AI response tracing

For a more detailed analysis, the deep tracing view is incredibly useful:

- In the APM 360 summary, click on AI responses navigation on the left and select the response you want to drill into.

- Here, you can see the entire path from the initial user input to final response, including metadata like token count, model information, and actual user interactions. This allows you to quickly root cause the issue.

Step 4: Explore model inventory

Model Inventory provides a comprehensive view of model usage across all your services and accounts. This helps you isolate any model-related performance, errors, and cost issues.

- Go back to the AI Monitoring section.

- Click on Model Inventory and view performance, error, and cost metrics by models

Step 5: Compare models for optimal choice

To choose the model that best fits your cost and performance needs:

- Click on Compare Models

- Select the models, service, and the time ranges you want to compare in the drop down list

Step 6: Enhance privacy and security

Complementing the robust security advantage of NVIDIA NIM self-hosted models, New Relic allows you to exclude monitoring of sensitive data (PII) in your AI requests and responses:

- Click on Drop Filters and create filters to target specific data types within the six events offered

Accelerating AI in Production with New Relic and NVIDIA

New Relic AI Monitoring and NVIDIA NIM offer a compelling solution for organizations seeking to expedite AI in production. By leveraging pre-built models, streamlined deployment, and comprehensive monitoring, businesses can deliver high-performing generative AI applications faster, resulting in significant cost savings and a swifter path to ROI. This collaboration marks a significant step forward in democratizing access to AI and its transformative potential.

Sign up for a free New Relic account and get started with monitoring your NVIDIA NIM applications today.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.