In the dynamic web ecosystem, systems are scaling and becoming increasingly distributed, elevating the importance of observability, automation, and robust testing frameworks. At the helm of building resilient systems is chaos engineering, a practice where failures are intentionally injected into the system to identify weaknesses before they cause real issues. New Relic runs weekly chaos experiments in our pre-production environment to unearth and address potential system failures, particularly in complex environments like relational databases.

Amazon Aurora, among other databases, poses unique challenges given its distributed nature, architecture, and failover method for providing high availability. This post will cover how to leverage observability and chaos engineering to ensure your services can handle database degradations.

Chaos testing for databases

Chaos testing provides invaluable insights into system resilience and prepares teams for real-world scenarios. Here are key benefits of chaos testing at the database layer:

-

Validate failover and application robustness: This highlights weaknesses in application error handling and driver configurations, offering an opportunity to reinforce system defenses.

-

Mitigate potential outages and protect data: Regular chaos experiments reduce surprises, minimize outages, and safeguard against data loss by improving incident response times.

-

Ensure effective observability and alerting: Chaos scenarios test our monitoring infrastructure and alerting mechanisms, allowing teams to refine their incident response strategies.

-

Deepen system understanding and improve documentation: Teams enhance their knowledge and create thorough documentation, which aids future troubleshooting and incident management.

-

Optimize capacity and performance: Chaos testing emphasizes stress points and capacity limits, helping teams plan and scale their infrastructure more accurately.

Getting started

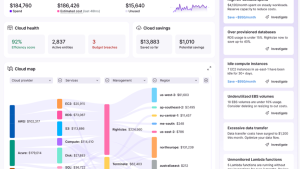

To successfully implement chaos testing, it's essential to set up observability and monitoring. Below, we detail the key components:

-

New Relic infrastructure agent: A vital component that monitors your system's performance. With special integrations for MySQL and PostgreSQL, it provides insights specific to those databases.

-

New Relic Amazon Cloudwatch Metrics Stream using Kinesis Firehose: Utilize the AWS CloudWatch metric streams to send a continuous flow of AWS CloudWatch metrics into New Relic, including a real-time monitoring data for Aurora databases.

-

New Relic log stream using Kinesis Firehose: Configure to stream Aurora MySQL and PostgreSQL logs directly into New Relic for comprehensive log analysis and observability.

New Relic Go agent for APM: New Relic for Go monitors your Go language applications and microservices to help you identify and solve performance issues.

By integrating these components into your cloud architecture, you can lay the foundation for detailed observability that is critical for conducting and learning from chaos experiments.

Database observability preparation

It's important to not just see system changes but observe them—understanding the context and implications over time through automated monitoring that transforms raw data into actionable insights.

Client side

It's vital to monitor the application's behavior as it interacts with the database. Use New Relic APM for real-time application performance monitoring to observe:

-

Error rate: Monitor the frequency of errors in the application during the chaos experiment.

-

Throughput: Keep track of the number of transactions your application handles.

-

Response time: Measure how long it takes for your application to respond to requests during different test phases.

-

Alerting: Configure alerts for anomalies in error rates and throughput, enabling rapid response to issues the chaos test might reveal.

Server side

On the flip side, it's equally necessary to monitor what's happening inside the database service itself. Here's what to keep an eye on:

-

CloudWatch Aurora metrics: Capitalize on Amazon CloudWatch to track key performance indicators for Aurora, including CPU utilization, input/output operations per second (IOPS), and more.

-

Database logs: Manage database log data and examine results from Aurora to identify any unusual patterns or issues found server side from the test.

-

Throughput analysis: Compare throughput prior to, during, and after the chaos test to understand its impact on performance.

By pairing application-side data from New Relic APM with CloudWatch metrics, we achieve a multi-faceted view, crucial for conducting and deriving maximum value from chaos experiments.

Remember: Observability isn't a mere checklist—it's strategically deciding what to measure, setting up proper alerting, and understanding how data across the stack gives us the insights needed to hypothesize, validate, and iterate.

Executing an Aurora failover

Failover is a critical procedure for maintaining data availability during unexpected disruptions. The Amazon Aurora failover process is designed to minimize downtime by automatically redirecting database operations to a standby instance. Understanding this operation can guide you in preparing your systems for resilience.

Here's a succinct breakdown of what occurs during an Aurora failover:

-

Instance selection: Aurora chooses a suitable reader instance to promote to primary status. The selection factors in the instance's specifications, its availability zone, and predefined failover priorities. For an in-depth look at this process, AWS provides comprehensive details in their section on high availability for Amazon Aurora.

-

Instance promotion: The selected reader instance is restarted in read/write mode to serve as the new primary instance.

-

Primary instance demotion: Concurrently, the old primary node is restarted in read-only mode, preparing it to join the pool of reader instances.

-

DNS endpoint updates: To reflect the new roles, Aurora updates its DNS records. The writer endpoint points to the new primary instance, while the reader endpoint aggregates the old primary node into its list of readers. The DNS change uses a time-to-live (TTL) configuration of 5 seconds, but client-side caching may affect how quickly this change is recognized.

During the failover transition, brief connection interruptions are expected. Clients should be prepared for a few seconds of disruption as Aurora shifts traffic to the new WRITER and adjusts the READER setup. Typically, these interruptions last around 5 seconds, as per AWS's observations documented for failover.

Facilitating a smooth failover demands that our applications and drivers are attuned to handle these transitions gracefully. In the subsequent sections, we discuss driver configurations and optimal settings for your client applications to manage these transitions with minimum impact.

Identifying and troubleshooting driver issues

Monitoring for driver and system errors is an integral part of understanding how your applications weather a database failover. Watch your APM client side errors and those recorded database server side in logs for signs of error related to writing to a reader / replica role.

APM and log events

Here are some examples of the errors you might encounter when a MySQL or PostgreSQL database switches to a read-only state during failover, indicating the application attempted a write operation that isn't allowed:

PostgreSQL:

nested exception is org.postgresql.util.PSQLException:

ERROR: cannot execute nextval() in a read-only transaction

MySQL:

Error 1290 (HY000): The MySQL server is running with the

--read-only option so it cannot execute this statement

MySQL applications written in Go might log errors that emphasize the read-only nature of the database following a failover:

Error 1290 (HY000): The MySQL server is running with the --read-only option so it cannot execute this statement

main.(*Env).execQueryHandler (/src/handlers.go:204)

http.HandlerFunc.ServeHTTP (/usr/local/go/src/net/http/server.go:2136)

…in.configureServer.WrapHandleFunc.WrapHandle.func19 (/src/vendor/github.com/newrelic/go-agent/v3/newrelic/instrumentation.go:98)

http.HandlerFunc.ServeHTTP (/usr/local/go/src/net/http/server.go:2136)

main.configureServer.WrapHandleFunc.func10 (/src/vendor/github.com/newrelic/go-agent/v3/newrelic/instrumentation.go:184)

http.HandlerFunc.ServeHTTP (/usr/local/go/src/net/http/server.go:2136)

mux.(*Router).ServeHTTP (/src/vendor/github.com/gorilla/mux/mux.go:212)

http.(*ServeMux).ServeHTTP (/usr/local/go/src/net/http/server.go:2514)

http.serverHandler.ServeHTTP (/usr/local/go/src/net/http/server.go:2938)

http.(*conn).serve (/usr/local/go/src/net/http/server.go:2009)

runtime.goexit (/usr/local/go/src/runtime/asm_amd64.s:1650)

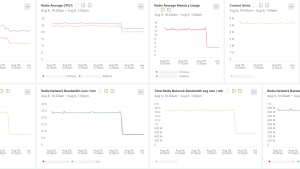

With a bad driver configuration, post failover the error rate might continue at a high rate indefinitely, which can be seen here with New Relic APM:

Identifying these error messages quickly is crucial for a swift incident response. Whether you're employing New Relic APM to analyze application performance or you're diving into more granular log data, staying vigilant for these error patterns during and after a failover can greatly assist in your troubleshooting process.

Driver configuration and best practices

Database connection drivers need to be properly tuned to handle failovers for planned maintenance operations and also for unplanned scenarios.

Driver configuration

To weather the disruptions of failover events, database connection drivers require precise tuning. This not only prepares your system for planned maintenance but also lays the groundwork for coping with unplanned scenarios.

Here's what you should keep in mind to configure your database and cache drivers:

-

DNS TTL settings: Ensure your operating system, any DNS management layer, and the database driver follow AWS's 5-second DNS TTL policy.

-

Use smart database drivers: Opt for drivers that effectively manage DNS caching and monitor instance states for automatic reconnection if the primary status changes.

-

Specify driver options: For AWS databases, consider the specialized JDBC driver for MySQL and JDBC driver for PostgreSQL. If smart drivers aren't available, manage connection lifetime settings to ensure connections are refreshed in line with the DNS TTL.

Configure connection max-lifetime: Without the option for a smart database driver, an alternative option is to configure your connection pooler to have a max lifetime for each connection. After passing threshold X period of time, it must be closed and then reopened. Leveraging a setting like this will help ensure DNS.

Example Go service driver configuration:

// DB driver configuration settings. Adjust db driver configuration and

// connection pool behavior ref: https://go.dev/doc/database/manage-connections

// Limit the total number of connections this app instance

// can be used on the database server.

db.SetMaxOpenConns(10)

// Limit the number of idle connections maintained.

db.SetMaxIdleConns(3)

// Reopen connections periodically to ensure RW failovers are followed within 1m.

db.SetConnMaxLifetime(60 * time.Second)

// Close idle connections after a 30 second period of non-activity.

db.SetConnMaxIdleTime(30 * time.Second)

Observability, metrics, and alerts

In the chaotic environment of failover experiments, effective observability is your eyes and ears. Structured monitoring and alerting ensure you remain informed and ready to act.

-

New Relic APM: Helps you understand the client side viewpoint. What does the application see for its own activity against the database server? What was the response time, throughput, and error rate?

-

Infrastructure agent with integrations for databases: Monitors your system's performance. With special integrations for MySQL and PostgreSQL, it provides insights specific to those databases.

-

New Relic Amazon Cloudwatch Metrics Stream using Kinesis Firehose: Utilize metric streams to send a continuous flow of CloudWatch metrics into New Relic, including a real-time monitoring data for Aurora databases.

-

Alerting: Set up the appropriate alerts for your situation. Strong candidates are often error rate, throughput and response time. For failover situations, error rate in particular is important, but write throughput and response time monitoring can provide additional value for many situations.

This error rate represents the positive experience contained within a minute.

Future chaos experiments

As you continue to refine your resilience strategies, the scope and complexity of your chaos experiments can expand. Anticipate explorations into new services, the impact of different scale and load patterns, and more sophisticated failure simulations.

Next steps

For further reading on Aurora's reliability best practices, AWS driver usage, or to deep-dive into the nuances of chaos engineering, here are some resources:

- Chaos engineering in the age of observability

- Achieving observability readiness using the New Relic observability platform

- Improve application availability on Amazon Aurora

- Best Practices - DNS caching: Amazon Aurora MySQL Database Administrator’s Handbook

- Amazon JDBC wrapper

- Why is my connection redirected to the reader instance when I try to connect to my Amazon Aurora writer endpoint?

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.