As you’d expect, at New Relic, we’re experts at applying observability to the operational aspects of our systems. The Unified Data Streams (UDS) team takes great pride in our instrumentation and alerting practices. We have a keen focus on maintaining a reliable, highly available data platform. But beyond being highly available, our reliability practices must also ensure our platform provides accurate results at all times. Incorrect results, obviously, will only erode trust in the services we provide. If it’s incorrect, we might as well not produce any data at all.

Building reliability into our data platform is a big topic, especially when we’re talking about data streams. In this post, though, I’ll explain a critical step we took toward improving the reliability of our testing practices.

Finding room for improvement in testing

In a nutshell, the UDS team is responsible for filtering and aggregating all incoming New Relic customer data so it can be sent to the teams that manage the various products in our platform at a volume they can handle. When it comes to testing, our strategy for white box testing (in which the tester is fully aware of the design and implementation of the code) requires a high percentage of unit test coverage for all code written by the team. We use JaCoCo and JUnit to write tests for all our code, which we run on every build. For builds to pass, test coverage is typically set at 99%.

Our black box test strategy (in which the tester has no knowledge of the design and implementation of the code) hasn’t been as strong as our white box testing. As we began to grow more rapidly and scale our services, it became clear that we needed to increase confidence in our reliability practices by catching problems in our platform before our customers could.

To address this lack, we hired a software development engineer in test (SDET) to join the UDS team. SDETs have a rare blend of strong engineering skills and a passion for finding bugs in software. They play a critical role not only in writing, developing, and executing tests, but they’re also able to participate in the design and creation of the software they’ll ultimately test. The UDS SDET, however, would be responsible for building a functional test suite that would test the correctness of our systems from end to end.

A mission for our embedded SDET

In embedding on SDET on the team, my goal was simple: A dedicated test engineer who is less familiar with the internal workings of our service would bring a new perspective to testing on the UDS team— a perspective closer to how our customers think things work. For example, a customer looks at an API definition and makes certain assumptions about what it does based on its name, its parameters, and their personal experience with other APIs. Our developers, on the other hand, make assumptions about how well our customers will understand that API. An SDET’s job is to identify and eliminate the developer’s assumptions through testing.

So, the UDS SDET’s mission would be two-fold:

- Set up an integration test environment in the public cloud to run tests on our streaming services platform. By using the public cloud, we wouldn’t be constrained by capacity in our data centers or by needing support from other New Relic teams.

- Build a functional test framework that includes an extensive suite of tests to validate the integrity of our customers' data. The SDET would create test data to replicate different accounts, so tests can catch and notify us when unexpected results are discovered. Functional tests are designed to test specific parts of service to ensure they’re functioning as intended. For the UDS team, our functional tests would need to cover parts of our code that:

- Create, read, update, and delete (CRUD) operations on internal APIs

- Appropriately throttle accounts that hit data limits

- Handle late and early arriving event data per agreed upon business rules

- Ensure API endpoints that integrate with GraphQL are functional

Seeing the benefits of the SDET

Having worked with the SDET and the functional test framework for some time now, the UDS team has enjoyed a number of benefits. Specifically, we’ve:

- Reduced the scope and time it takes to run game days at the end of our feature development sprints, resulting in faster delivery of features to customers without compromising quality

- Improved our confidence in the software we ship thanks to increased coverage where it was formerly incomplete or missing

- Reduced the time to troubleshoot issues raised by customer support by using quick functional tests to reproduce the issue

- Resolved previously undetected problems, such as identifying and updating out-of-date software in our service that could have impacted customers

- Improved our deployment process, bringing it much closer to true CI/CD

- Isolated complexity by allowing the team to get the right data in the right place for feature testing when time was of the essence.

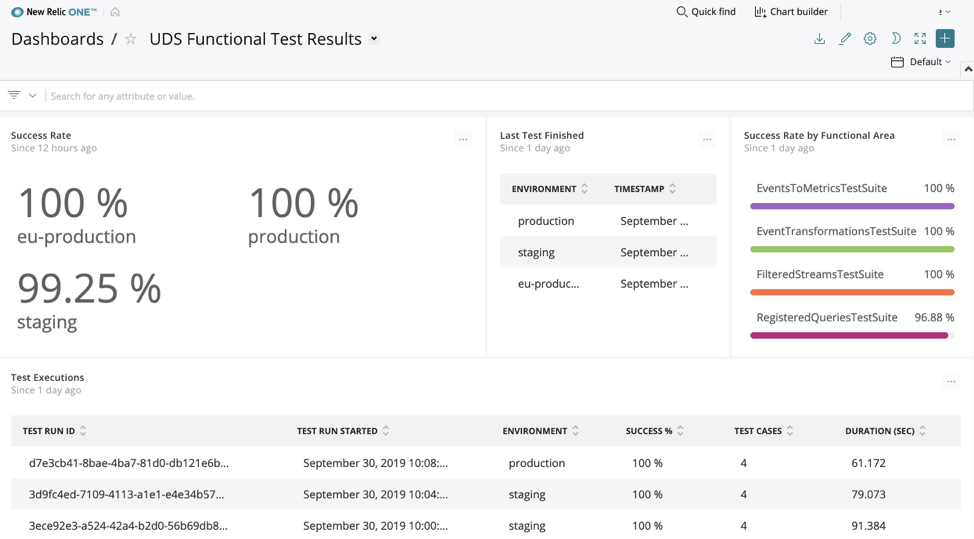

And in the spirit of drinking our champagne, our SDET built a New Relic One dashboard, so the team can track the full set of functional tests and their most current results:

The end result of the SDET’s work is a sophisticated functional test framework, called “Sea Dragon,” that now plays a pivotal role in the team's build and deploy process.

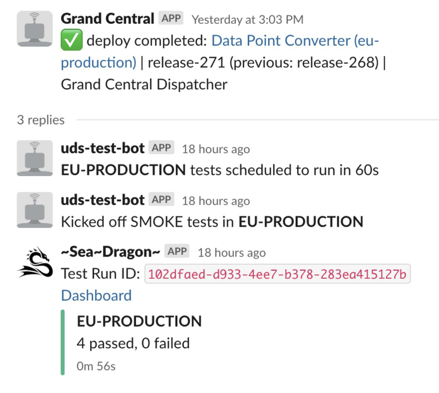

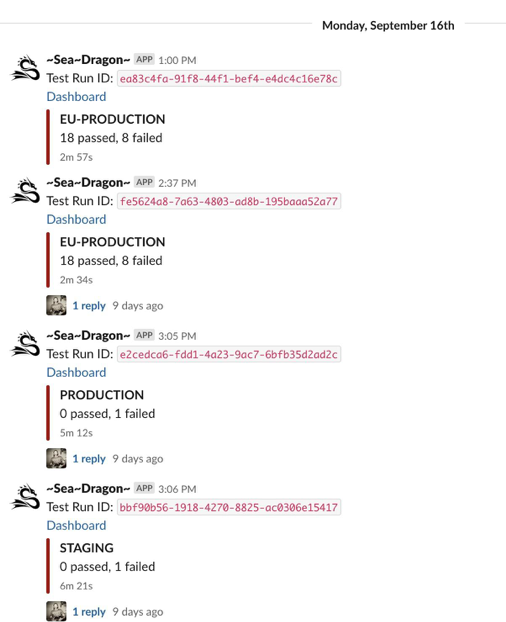

When a UDS team members starts a deployment in our deploy pipeline (called Grand Central), a test bot automatically kicks off the appropriate unit tests. If the deployment is successful, the bot then kicks off a series of smoke test:

Finally, an accompanying bot provides a handy Slack integration to keep the team up to date on deployments:

As a manager at New Relic, I’m responsible for making sure my team delivers new features for our platform while at the same maintaining operational excellence, even as the platform—and the company—continues to grow at a rapid pace. Hiring an SDET and building a functional test suite has greatly improved our team’s velocity and build process, reduced our bug count, and increased our confidence that our software is reliable and delivering the value we promise our users. Running a functional test framework will remain a central part of our reliability work going forward.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.