Running observability at scale means handling massive streams of telemetry data including millions of spans, traces, and metrics every second. This data is what powers New Relic’s ability to provide real-time insights into application and infrastructure performance for our customers. But ingesting and processing data in real-time at that scale comes with a price tag, especially when it involves high-performance systems like Redis that sit at the core of our distributed tracing pipeline.

Distributed tracing tracks requests across multiple services, to help find the root cause of errors or poor performance across an entire distributed system. Our Distributed Tracing Pipeline team manages the system that ingest, transforms, connects and summarizes trace data from multiple agents. Last year, the team rolled out a new pipeline that simplified our overall architecture and reduced operational complexity. The redesign allowed us to deprecate older services, consolidate responsibilities into smaller, well-defined components, and most importantly, run both Infinite Tracing and standard distributed tracing through a single, unified pipeline. The result was a cleaner, more maintainable, and more reliable system with fewer moving parts. But soon after the rollout, we noticed a significant increase in Redis storage and network costs.

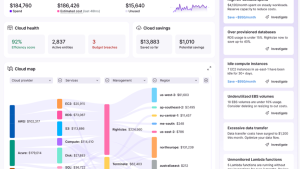

At New Relic, we use our own platform to monitor everything we build. So when costs spiked, we turned to New Relic itself to dig into the data. What followed was a two-part optimization journey: first, tuning our Redis infrastructure by upgrading and right-sizing clusters, and then rethinking how we store data to make compression and memory use far more efficient.

In this blog, we’ll walk through that journey; what we changed, what we learned, and how those changes helped us cut Redis costs by more than half while improving overall performance and scalability.

Infrastructure optimization

When we first noticed the cost spike, our initial focus was on improving efficiency at the infrastructure level. We wanted to ensure our Redis setup was as optimized as possible for both performance and cost.

We took two immediate steps:

Migrate to better instance types

We upgraded our Redis clusters to the latest version and moved from m6 to r6g instance types. Though both instance types belong to the same generation of ARM‑based EC2 instances, r6g is memory‑optimized (8 GB RAM per vCPU versus 4 GB on m6g). After running the new pipeline for a few months we found our CPU usage was really low compared to our memory utilization. Switching from m6 to r6g allowed us to reduce the number of nodes in our cluster without sacrificing throughput, making it a better fit for our high-data volume but simple Redis workload. This change was also the perfect opportunity to upgrade Redis versions, giving us access to the enhanced stability and operational improvements available in Redis 7.x.

Right-size Redis clusters

In several production cells, Redis clusters had been deliberately over-scaled to handle spikes in traffic. While this provided ample headroom, it also led to unnecessary cost. Using our telemetry data from our Infrastructure AWS integrations, we analyzed usage patterns and memory consumption. Based on those insights, we manually downscaled the clusters to match actual usage while maintaining safe performance margins.

These two steps brought the first round of optimization, cost savings and better resource utilization. During this process, we also identified another potential area of improvement in how data is stored in Redis, which later became the foundation for a much larger optimization effort.

Why we needed to rethink how we store data

After optimizing our Redis infrastructure, we continued to watch its performance closely using New Relic as we rolled the changes out across different environments. Everything looked healthy. We saw immediate cost improvements and the memory and network usage matched what we expected for the incoming data volume.

At New Relic, however, we’re always looking for ways to make our systems more efficient. During one of those explorations, an engineer was studying how data was stored in Redis and noticed something interesting about how span data was being compressed.

Our tracing pipeline receives several spans per Kafka batch, groups them by trace key, and then pushes those spans to Redis. In Redis, each trace key maps to a list of spans, which our Redis client compressed for us when serializing in-memory objects for storage:encodedTraceKey: String = [non-empty list of compressed spans: ByteArray]

This ensures that each span is compressed before being pushed to Redis.

Though, the setup was working as intended and each span was being compressed before storage, but our engineers began to wonder if there was room for optimization. What if, instead of storing individual spans, we stored batches of spans together? Could that make compression more efficient and reduce storage or memory overhead?

Rethinking storage with Redis batching

After realizing an opportunity for optimization, the next step was to test whether grouping spans together could make compression more effective. This would mean switching our data representation in Redis from a list of individual spans to a list of serialized lists of spans. In this new structure, multiple spans would be stored together and compressed as a single unit, resulting in fewer and larger payloads being written to and read from Redis. Hypothetically, this approach could make compression more efficient and simplify Redis operations overall.

To validate the idea, our engineers ran a controlled test to collect empirical data on how batching affected compression efficiency and resource usage. The experiment was deployed in one staging and one production cell and ran for a few hours to compare compression behavior between single-span and batched-span storage. The results were immediately encouraging. On average, the compression efficiency improved from about 20 percent to 42 percent in production and from 28 percent to 52 percent in staging.

Cell | Avg batch length | Avg no compression size | Avg current compression size | Avg new compression size | New compression ratio |

test-cell-01 | 9.96 | 11.1 KB | 8.48 KB | 2.14 KB | 42.86% |

test-cell-02 | 5.08 | 6.88 KB | 4.9 KB | 1.46 KB | 52.189% |

Going further, we also considered the scenario where trace sizes were highly variable, ranging from only a few spans to the ones reaching several thousand spans. So we asked, What’s the improvement then if we don’t consider single spans-traces? Analysing based on that premise, we found that the compression ratio improves to about 68 percent for batches of more than 1 element.

These findings provided clear evidence that batching could substantially reduce Redis memory and bandwidth usage, giving the team the confidence to move forward with broader testing.

Measuring the impact

Once Redis batching was implemented, we rolled it out gradually across environments, starting in staging and then moving to production. The rollout was fully controlled by a feature flag, allowing us to enable or disable batching safely while monitoring results in real time. Using New Relic dashboards, we tracked Redis memory usage, network traffic, and overall system performance at each stage of deployment.

And the results did not disappoint. New Relic’s data ingest operates on a cellular architecture, which means that our ingest is split up into many different independent clusters (or cells). So, we measured results at the cell level as well. The data shown here comes from a single staging cell, which provided a clear view of the performance gains we could expect. In that cell, our Redis memory usage dropped significantly, with the maximum memory used across the cluster reduced by 66 percent. Network traffic between services improved in the same way with a 66 percent reduction.

The distributed tracing pipeline continued to perform reliably throughout, confirming that large efficiency gains can be achieved without compromising stability or throughput.

Key takeaways

This optimization journey showed that even small architectural adjustments can lead to meaningful cost and performance improvements at scale. By combining detailed observability data with a careful review of how data is stored and transmitted, we uncovered simple but high-impact opportunities for efficiency.

- Start with infrastructure fundamentals. Upgrading to better instance types and right-sizing clusters can deliver quick wins without deep code changes.

- Look beyond infrastructure. Sometimes, data representation matters just as much as hardware. In our case, switching from single-span compression to batched compression reduced Redis usage by more than half.

- Measure everything. Observability was key to identifying the issue, validating the fix, and quantifying its impact.

At New Relic, we believe in applying observability not just to help our customers, but to continuously improve how we run our own systems. This experience reinforced that visibility into every layer, from code to infrastructure, is the foundation of sustainable performance at scale.

Conclusion

Optimizing costs at scale is rarely about one big change. It’s about understanding how each layer of a system contributes to performance and finding practical ways to make those interactions more efficient. By combining infrastructure tuning with a smarter approach to data storage, we reduced Redis memory and bandwidth usage by 66 percent and cut our annual costs in half, all without sacrificing reliability or performance.

This experience showed that meaningful gains often come from revisiting everyday design choices. The same approach can apply to any large-scale system. Explore your own Redis and storage metrics in New Relic, and you might find similar opportunities hiding in plain sight.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.