With the rise of LLMs (large language models) in almost every area of technology, keeping an eye on how your LLMs are performing is increasingly important. LLMs, the underlying technology powering AI applications, are black boxes without predictable outputs. A change of a single word can return a completely different answer. Engineers with LLMs in production have to be prepared for the unpredictable; for example, users will submit prompts that break the system, a simple PR to fix one issue will lead to four unforeseen issues, and latency can get quickly out of hand. However, abnormal behavior in production isn’t specific to running LLMs—it’s a reality for most modern software.

Observability allows engineers to analyze the inputs and outputs of complex software, even black boxes like LLMs, providing multiple signals needed to troubleshoot in production.

This blog shows you how to set up LLM observability using OpenTelemetry for managed LLMs, like OpenAI. Then, we’ll show how you can use the data you collect to debug and optimize your application in production.

Extending LLMs with AI frameworks like LangChain

LLMs, like GPT-4, are limited by the information they were trained on and cannot access or retrieve real-time or external data. For example, if a user asks ChatGPT about the latest developments in a particular scientific field, the model can only provide information up to its last training update, potentially missing recent breakthroughs or studies.

For example, when I ask ChatGPT, “Who is Ice Spice?”, it’s stumped because its training data cuts off in January 2022, at which point the Grammy-nominated rapper wasn’t in the zeitgeist.

To be able to extend the power of LLMs, frameworks like LangChain and LlamaIndex have been developed to integrate LLMs with external databases or APIs through a technique called retrieval-augmented generation (RAG). RAG is a method where the language model first retrieves information from a database or a set of documents before generating a response. This means that when asked about the latest pop stars, the model can now pull the most recent data from external databases or search engines, providing up-to-date and relevant information.

LangChain makes it easy to implement RAG with tools, which are out-of-the-box components that enable it to interact with the real world, including things like search engines, weather APIs, and calculators.

With the DuckDuckGo search tool, a LangChain application goes through the following steps to answer the question, “How old is Ice Spice?”:

1. It creates a plan of action by asking the LLM for the tool it needs to gather the additional information to properly answer the query. In this case, it wants the application to use the search tool to gather the additional information about Ice Spice.

2. Next, the LangChain application uses the DuckDuckGo search engine tool included with LangChain to query “How old is Ice Spice?”, which returns the relevant paragraphs. The tool then returns information about Ice Spice, including her age and biographical information:

“Ice Spice is an American rapper born on January 1, 2000, in the Bronx, New York. She rose to fame in 2022 with her viral songs ‘Munch (Feelin' U)’, \"Bikini Bottom\" and \"In Ha Mood\", and her collaborations with Lil Tjay, PinkPantheress, Nicki Minaj, and others. She has been nominated for several awards and honors.”

3. Then, with the additional context gathered by the search tool, the LangChain application queries the LLM again with the following prompt:

Answer “How old is Ice Spice” using the following information “Ice Spice is an American rapper born on January 1, 2000 in the Bronx, New York. She rose to fame in 2022 with her viral songs \"Munch (Feelin' U)\", \"Bikini Bottom\" and \"In Ha Mood\", and her collaborations with Lil Tjay, PinkPantheress, Nicki Minaj and others. She has been nominated for several awards and honors.".

With the additional context included in the input, it is able to deduce that Ice Spice is 23 years old.

Debugging LangChain applications

If a LangChain application experiences performance issues or unexpected behavior, traces can help identify the exact step or component responsible. Traces can highlight anomalous patterns or deviations in the workflow, which might not be apparent through code analysis or standard logging. This level of detail is crucial for efficiently diagnosing and resolving issues in LangChain applications, where problems can arise from various tools, APIs, or LLMs’ outputs.

Let’s say a user asked the question, “What is Ice Spice’s age divided by the number of Nick Cannon’s kids?” With RAG with the DuckDuckGo search tool, it should be able to get both metrics. However, it returns a faulty value.

When looking through the logs for the LangChain run, we can quickly deduce where things went wrong. The LLM incorrectly deduces that Ice Spice’s age is 42, which is Nick Cannon’s age. To prevent this in the future, we can change the prompt to break down the query further when there are multiple pieces of information needed to answer the question. But how can we scale this into production where we can keep track of thousands of queries that may be returning incorrect information?

OpenTelemetry to the rescue

OpenTelemetry is an open-source, vendor-neutral way to collect and export metrics, logs, and traces from your applications. Using its language-specific SDKs, you can collect and export traces to the backend of your choice, whether it’s a vendor like New Relic or an open-source backend like Jaeger or Zipkin. With Python specifically, you have multiple ways to get traces from your application.

Use OpenTelemetry Python auto instrumentation

With Python auto instrumentation, OpenTelemetry automatically injects code to trace your application into your application at runtime. This means that all you have to do to get data flowing into your application is install the pertinent libraries and run your application in conjunction with OpenTelemetry.

poetry add opentelemetry-instrumentation-requests

poetry add opentelemetry-exporter-otlp

poetry add opentelemetry-distro

poetry run opentelemetry-instrument --traces_exporter console,otlp \ --metrics_exporter console \ --service_name llm-playground \ --exporter_otlp_endpoint 0.0.0.0:4317 \ python main.py Even though this is easy to get started with, the out-of-the-box data that’s collected is limited at best. In this example application, the only trace attributes that are collected are the endpoint hit and the duration of the call. Any additional attributes, like token usage and temperature, need to be manually attached.

Attaching custom attributes to your traces with manual instrumentation

Manual instrumentation in Python opens up a whole new world in terms of customization and granularly documenting each step of your LangChain application. To get started, here’s how to add attributes for token usage in your Python function that calls the OpenAI API. The token completion data is taken from the chat completion usage object returned by the API.

def do_work():

with tracer.start_as_current_span("span-name") as span:

# do some work that 'span' will track

response = client.chat.completions.create( model=model, messages=conversation )

usage = response.get("usage")

span.set_attribute("llm.usage.completion_tokens",usage.get("completion_tokens"))

span.set_attribute("llm.usage.prompt_tokens", usage.get("prompt_tokens"))

span.set_attribute("llm.usage.total_tokens", usage.get("total_tokens"))

Best of both worlds with OpenLLMetry

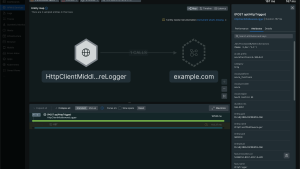

OpenLLMetry is an open-source project that helps auto-instrument LLM apps using OpenTelemetry through libraries for various components of the AI stack, including frameworks like LangChain all the way down to providers like OpenAI and Amazon Bedrock. The data that it collects can be exported via the OpenTelemetry Protocol (OTLP) to the OpenTelemetry collector or a vendor like New Relic.

To get started,

Install the SDK:

pip install traceloop-sdkThen, to start instrumenting your code, just add this line to your code:

from traceloop.sdk import Traceloop

Traceloop.init()

What can you do with the data?

Tracking errors

Tracking and addressing errors in production is a key way to iterate and improve upon your application. Because of the unpredictable nature of LLMs, it can generate incorrect or incompatible responses. In the example above, the model returned a value that was unrecognized by the math tool that the application used.

Prompt engineering is crucial to address these challenges. By designing and refining the prompts you feed into the LLM, you can guide it towards producing compatible outputs. By including examples or specifying how certain complex scenarios should be approached in your prompts, you can guide the LLM to better handle these situations.

Tracking cost

Tokens are units of text, usually a few characters or a part of a word, that an LLM processes. The number of tokens determines the amount of data the model can generate or analyze in a given request. The calculation of tokens is typically based on the specific encoding used by the LLM; for instance, GPT-3 uses a tokenizer that breaks down text into tokens representing either whole words, parts of words, or punctuation. Since LLM providers often charge based on the number of tokens processed, understanding and managing token usage becomes vital for cost control.

Efficient token management can lead to more optimized interactions with the model, as it allows for the balancing of comprehensive, quality responses against the need to minimize unnecessary token consumption. By tracking both the number of tokens sent and returned by your LLM, you can get a granular view of your costs and find ways to optimize your applications.

A/B testing LLM Performance

With the acceleration of adoption of LLMs, came the Cambrian explosion of both proprietary and open-source models. Each provider offers various models which vary in pricing based on its training data, size, and capabilities. The latency, accuracy, and cost of your application can vary widely based on the LLM chosen for your particular use case.

With access to tracing data, you can easily route various requests to different LLM models and measure its performance and cost. With real-time data from your application, you can find the optimal model for your use case.

Conclusion

LLMs are like Pandora’s box. Building with these models can add a lot of value quickly, but are unpredictable black boxes. Even though LLMs seem like magic, AI applications are still applications that are written in code. Therefore, many of the same lessons we’ve learned maintaining code at scale in production still apply.

Tracing—and observability as a whole—gives you better visibility into your application’s performance. With tracing data, you can not only track errors and cost, but experiment with various providers and variables to find the best model for your use case. Being able to constantly tweak and optimize your application with real-time data allows developers to build reliable systems with guide rails, even with the use of unpredictable technologies.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.