Log monitoring for cloud-native architectures requires a slightly different approach for modern web applications than traditional applications. In part, this is because the application landscape is much more complicated, including sourcing data from microservices, use of Kubernetes and other container technologies, and integration of open source components in many cases. All of this complexity makes it necessary to rethink your strategy for aggregating, analyzing, and storing your application logs.

Interpreting logs is a great way to see the health of your application, especially if you want to know more about those services that only exist for an instant. But, new tools and technologies also provide you with unprecedented volumes of data, making it harder to filter out noise. In this blog, we’ll look at some of the challenges with log monitoring for cloud-native architectures and describe four steps that help you define an effective strategy for your applications.

Top takeaways

- The best practices for log monitoring in cloud-native environments include using open standards, implementing a central log management solution, and avoiding collecting personal information in logs.

- Taking the wrong approach to log management in cloud-native architectures can limit your ability to respond to issues effectively or lead to you becoming locked in with a specific vendor.

What are the challenges with log monitoring for cloud-native architectures?

Historically, log monitoring was easier because most application logs had a consistent structure and format. Transforming this data and aggregating the information was simple, allowing teams to collect and analyze different logs into a single view of the environment’s performance. In a cloud-native world, this is no longer the case.

Some of the major challenges that teams face today include:

Scale

Due to the number of microservices, containers, layers of infrastructure, and orchestration taking place in cloud-native architecture, teams could easily end up dealing with hundreds of thousands of individual logs.

Ephemeral storage

In containerized environments, logs often go into the internal file system that may only exist while the app is instantiated. Teams need to collect log data into persistent storage to analyze performance and troubleshoot issues later.

Log variety

Cloud-native applications generate a wide array of data from the app and server but also use cloud services, orchestrators, and APIs to function properly. Each of these components generates valuable information that you need to collect from different instances, nodes, gateways, hosts, or proxies.

Vendor lock-in

If you use a specific vendor’s logging tools only, you may become locked into that environment and its proprietary log management solution. In multi-cloud environments, this can hamper your ability to monitor performance, troubleshoot issues, and understand dependencies if you’re using different logging tools for different service providers.

Overcoming these challenges with an intelligent model is possible if you take the right approach from the start.

Best practices for log monitoring in cloud-native architectures

Here are some of the best practices you should include in your log-monitoring strategy.

1. Implement a log management solution.

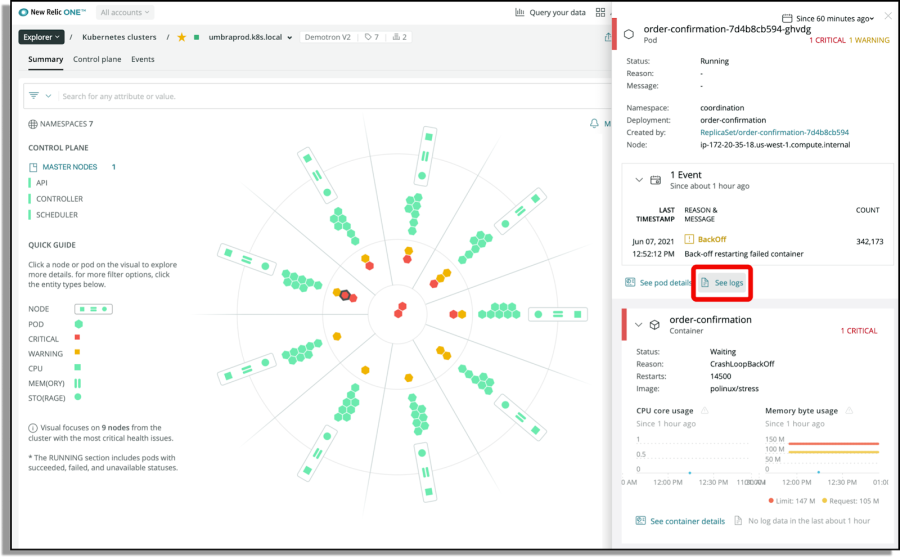

Due to the diversity of log data generated in your environment, the best option is to implement a log management solution that unifies all logs into a single collection. Managing logs from a centralized system makes it possible to aggregate all your logs automatically into a manageable set of data for further analysis. Observability platforms let you visualize and analyze data from your application, infrastructure, and end-user using a streamlined process to collect and store all log data.

2. Adopt open standards for application logs.

Open standards, such as OpenTelemetry, help you avoid vendor lock-in and optimize your log monitoring processes using vendor-neutral APIs. OpenTelemetry combines two previous standards (OpenTracing and OpenCensus) into a single collection of tools, SDKs, and APIs that enable you to instrument code, generate, collect, and export log data, traces, and metrics.

With broad language support and integrations with popular frameworks, adopting open standards for your application telemetry will also simplify your log monitoring process. OpenTelemetry is currently in beta in several languages, free, and supported by a variety of industry leaders.

3. Embrace the latest tracing and logging technologies.

After you have a centralized log management solution from an observability platform, consider using new technologies like eBPF for collecting your data. Also, look for tools that provide no-code interfaces to visualize your data and custom log parsers that enable you to easily transform and shape log information into usable formats.

With improved log generation, collection, and visualization capabilities, you can:

- Troubleshoot your application performance by tracing each service request throughout the environment.

- Improve your capacity planning, load balancing, and application security.

- Correlate transaction data with operational data to see what is happing during each request.

- Ingest information and scale log monitoring to detect patterns from your data.

4. Log only what you need.

Finally, logs need to contain the necessary metadata to provide enough context when you’re analyzing performance. With a log management solution, generating logs is easy but won’t provide any benefit if the information isn’t immediately useful. Log information should help you understand what is happening in the application or make decisions quickly.

Remember to exclude sensitive data from your logs by using anonymous identifiers for all private information. Use this Log Management Best Practices guide to develop your strategy and ensure you avoid common pitfalls from cloud-native log monitoring.

GCP-specific tips

Monitoring logs in Google Cloud Platform (GCP) is complicated. You have to deal with many challenges, including a complex environment, large scale, security and compliance issues, to name a few.

To address these challenges, it’s a best practice to invest in a specialized observability platform to effectively monitor GCP logs. These can include centralized log management, log analysis tools, real-time monitoring, and automated alerting. Here are some best practices to support you:

Centralize logs

Centralize all logs in a single location for easy analysis, troubleshooting, and monitoring.

Define log sinks

Log sinks control how your logs are routed. If you configure log sinks to route logs to different destinations, your data will be much easier to analyze. Learn how to route your log sinks with New Relic.

Set up alerts

Use alerts to trigger when there is anomalous behavior in GCP. For instance, you will want to be able to detect unusual traffic patterns (such as sudden spikes or drops in user traffic), unusual data access patterns, or potential attempts at unauthorized access, such as unusual log-in attempt patterns. With New Relic, you can set up alerts based on static thresholds or use AIOps to help find unusual patterns.

AWS-specific tips

While sharing some similarities with GCP, Amazon Web Services (AWS) presents unique metrics that require specialized monitoring. Here are some AWS-specific best practices:

Enable log rotation

Enable log rotation to avoid filling up your disk space and losing old logs. With some services, log rotation may be enabled by default.

Create CloudWatch metric filters

Metric filters allow you to define specific patterns to look for in your Cloudwatch Logs data. You can then set up graphs or alarms based on those patterns.

Set up alarms

Similarly to alerts, AWS uses alarms. Alarms can trigger actions when a threshold is breached such as high CPU usage or low free disk space. When an alarm is triggered, you can then automate actions such as sending notifications or scaling resources.

Your team needs cloud-native log monitoring

Cloud-native log monitoring is even more essential as your environment scales and becomes more complex. By using a full-stack observability tool like New Relic, your team can gain real-time visibility into the behavior of your applications and services, respond quickly to issues, and continuously improve performance and reliability across your stack.

Gain full-stack observability with New Relic log management

New Relic helps teams understand every single request in an application and optimize performance using real-time data from cloud-native architectures. With AIOps capabilities, a centralized logging solution, and distributed tracing, you can uncover bottlenecks, quickly troubleshoot issues, and improve your performance for every commit.

Next steps

To get started with New Relic log management capabilities, sign up for a free account. You’ll get one free full platform user, 100/GB a month of free data ingest, and unlimited basic users.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.