At Pomelo, we are a team with years of experience in building fintech, committed to creating backend infrastructure that customers can count on. When we started out, we wanted to learn from every mistake that we’d had in previous roles—a lot of us had experience in startups that had become quite successful. When we started at Pomelo, we all shared a common vision: to revolutionize the fintech industry and help any company in Latin America launch their own financial services swiftly and simply by using our technology. Not only that, but also making it possible for our clients to scale their businesses in a matter of weeks, not years.

Here are seven steps we took to create robust, reliable fintech infrastructure from the get-go:

1. Building an internal developer platform (IDP)

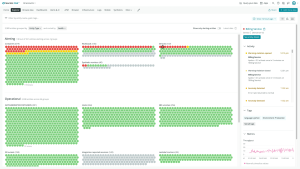

First, we built an internal development platform (IDP) called Rocket. We use Backstage, an open-source project from Spotify in combination with the Service Catalog feature on Amazon Web Services (AWS). This provides us the ability to share a full inventory of our services with our developers. Within our first three months, we had the first MVP for Rocket in place. We now use New Relic's APIs to feed some observability metrics into Rocket, so that our developers can see our quality assurance scoreboards. The developer-site unit team, for example, can access key metrics of service quality, coverage, alerts, and application time to recover.

2. Standardizing all assets on the IDP

On Rocket, we have standardized processes of how we deploy applications, how we do the scaffolding of the applications, how we deploy a database, how we're going to deploy an application, and what tools we are going to use for our observability, our telemetry. We also made decisions like which languages we would work in, choosing Java, Go, Node.js, and React for the front end. These are the only four languages that we use in the company. When adding APIs to the IDP, we build them with Swagger documentation, which is part of standardizing the scaffolding of applications.

3. Using one monitoring tool

In some of my previous roles, one of the biggest mistakes I faced was having one application to monitor infrastructure, another to monitor logs, and another for application performance monitoring (APM). It was annoying and a waste of time to investigate an incident and detect the source of the anomaly across tools. When we started Pomelo, we decided on one tool because we wanted all of our developer teams to get proficient at using that specific one. That's why we chose New Relic. Besides making it easier for us to keep up with all information, it also helped us maintain our standardization practices: every application that we build has a set of common alerts and key metrics.

4. Mapping the critical path for each business model—not each application

Common metrics for all applications will only take you so far. What we needed to do next was define the critical path for each business model, not for each application. This lets us build metrics in New Relic that match service levels for each business model. For example, some of our critical paths are for the transaction and authorization processes. They are critical path flows that we might use in multiple applications. Each team has the ability to build its own dashboard, but we also use four general dashboards that measure the uptime, throughput, error rate, and latency for each critical path. In each one of them, we have the ability to go to each application and see how the critical path is performing within that app.

5. Decentralising and supporting teams

We have decentralized teams at Pomelo: each business unit works on its own apps. As a platform team, we built Rocket in order to decentralize our knowledge, so that each team could own their own work. We have a centralized reliability team that supports individual teams to make the most use of our IDP, to understand what metrics can be created in New Relic, share good practices around them, and what they have to observe.

6. Introducing controlled chaos

The reliability team also works with each individual team to introduce chaos engineering: once a week, we test trying and breaking their application, cycling through each decentralized team. Also, we perform reliability surveillance: we look at their architecture, we assess what the bottlenecks are, and we then understand what has to be measured. Our reliability team is small, but they are like superheroes for our organization.

We execute chaos engineering for different reasons, like shutting down parts of the flow to see what the transaction alteration flow looks like, simulating traffic peaks, shutting down or raising the memory, or inserting fault injections into the data, all of that in staging environments. We use Kubernetes and containers so we are confident that what we test in our staging environment will reflect the experiences in the production environment, without us having to play around and introduce chaos into actual production. And what is great is that, for measuring a lot of these potential uses, we can use metrics and features straight out of the box from New Relic. Therefore, we have all the monitoring we need inside the platform.

7. Knowing when to prioritize speed over quality

We are committed to creating quality solutions for our clients. Along the way, we use a lot of internal processes and applications under development for our own use. For those non-client-facing products, we occasionally sacrifice quality. This is especially the case if we are in the early stages of testing out new product ideas or features. But we never sacrifice quality around the critical path of applications that clients are using.

With these processes in place, our infrastructure can scale to millions of transactions per hour without any hassle. These seven key tactics have helped us become a fintech infrastructure leader in Latin America, in which our clients know they can rely on for their applications. They know they can support their customers to manage their financial health because of their trust in, and reliance on, us.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.