Once upon a time, when I was but a wee monitoring engineer, I was noodling around in my monitoring solution and adjusted the trigger rules for an alert.

The result? I issued 772 tickets. Twice, in 15 minutes.

The worst part was that I didn't even know I'd done it until I got a visit from all seven of the NOC staff who were on helpdesk duty. They graciously "invited" me down to their area to help close the 1,544 tickets, and "encouraged" me never to do such a thing again.

With the benefit of time and experience, I can appreciate that day as a learning moment. While I’m certainly to blame for issuing the tickets, maybe the monitoring tool I was using at the time shouldn’t have automatically triggered alerts without so much as a “Are you sure about this?” warning pop-up. And maybe it should have had a solution for closing tickets en masse.

What is an alert?

An alert is a notice to get someone to do something about an immediate problem. It starts a chain of actions that culminates in a notification such as a ticket to us humans.

The “someone” part of the alert is just as vital as the message itself. If you create an alert that doesn’t consider when and how to engage a human, it's incomplete. Alerts should do a number of things:

- Respect employees’ time. People are the most expensive and constrained resource.

- Provide context. Alerts should show what actions have already been taken and any related data points so the person who picks up the baton can problem solve efficiently.

- Be urgent and non-optional. There should be no such thing as “for your information” alerts.

What alerts shouldn’t be

"But Leon," I hear you say, "With my current alert set up, I don't need to do anything the first time they come in. But if I see four alerts come in over 20 minutes, that's when I know there's a real problem."

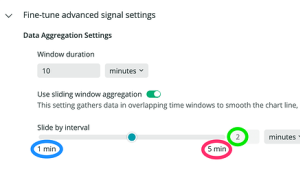

In my opinion, if you need an alert when a condition has occurred x times in y minutes, just set up the alert to trigger like that. Then when you get a notification, you know it's real and you need to act right away.

If you have a trigger but don't need a human to do something, then it's not an alert. What could it be? In the simplest possible terms:

- If no human needs to be involved, it's pure automation.

- If it doesn't need a response right now, it's a report.

- If the thing you're observing isn't a problem, it's a dashboard.

- If nothing actually needs to be done, you should delete it.

To elaborate on the last bullet point: If no action can be taken (at all, ever) on the data in question, then by definition you don't need the alert. Stop wasting cycles (and, likely, money) collecting, sending, and processing it.

Monitoring is more than setting up alerts

Monitoring and observability are hard concepts with a frequently-shifting set of terms, concepts, and methodologies. (And that's to say nothing about the level of vendor buzzwords and misusage of terms!)

Nevertheless, it's important to differentiate the end result (alerts) from the actions that enable those results. Your alerting maturity will suffer if you think of monitoring as simply the means by which you generate alerts. Monitoring is simply the ongoing, systematic collection of data from a set of target systems. Everything else—dashboards, reports, alerts, and the rest—is a happy byproduct of doing monitoring in the first place.

How do alerts relate to observability?

Without getting too deeply philosophical, observability is partially about how important data about your application comes to you and the level of sophistication used to process and analyze that data once it's collected. But alerts themselves aren’t the sum total of observability, nor are they synonymous with monitoring.

Over the course of my career, I've seen plenty of examples of entire organizations that drastically misunderstand this. At a job, I once discovered that the calculation used for their systems uptime report was this:

100% - (the number of tickets created in that period) = uptime percentage

If a system had an issue that cut a single ticket during a 30-day period, the uptime was reported as 99%. This clearly is nonsensical, but what should they have done? I find alerts can often be most valuable when they actually feed back into the monitoring system as events. These events can be displayed to help contextualize changes in performance, capacity, and so on.

Let’s walk through a hypothetical example.

Sample alert scenario

Let’s say your monitoring detects an issue with the online order entry system. Completed transactions (sales) have dropped by greater than 20% and this persisted for over 30 minutes. At the same time, the number of users connected to the web front end dropped greater than 30% in the same time period. The hardware utilization (CPU, RAM, etc.) hasn't dropped in parallel.

Let’s assume that your current monitoring system does a few things. First, it attempts evasive maneuvers by flushing the application pool, restarting key services, and using orchestration to re-build the environment. Next, it creates an event that feeds back into the monitoring system as a flag to say "I did this thing at this time due to this trigger."

Let’s say that ten minutes later, conditions haven’t changed. Now the system increases capacity, in the form of additional containers in the load-balanced cluster or by re-provisioning the VM with additional CPU. Once again, the monitoring process also creates an event flag for the actions it took.

Ten minutes later, conditions still haven't changed. Now an alert notifies a human via a ticket and another event flag is inserted back into the monitoring pipeline.

What’s causing these conditions? In my imaginary situation, an orchestration rule to migrate the entire system to a new region failed. This means that users experienced unacceptable delays in page-load and processing times. Second, a library update caused a couple of key subroutines to fail. Pages were loading, but items weren't displaying correctly so customers couldn't actually select them to purchase.

A good monitoring tool would give enough context that the person on call could suss out this issue and resolve it. The event flags prove helpful after the fact, because months later when looking at performance graphs, sales charts, and so on, the flags provide instant insight into why there were spikes or dips, as well as what was done about it.

Improving your alerts

As I emphasized in my live talk at DevOpsDays, creating mature and effective alerts means committing to having an open, honest, and ongoing conversation with the folks who are receiving those alerts. Even when the recipient is yourself.

Every so often, you need to review whether your alerts are still functioning as expected because it's possible that the application has changed significantly and no longer behaves in the way the alert expects. There are innumerable reasons why that would be, such as a change in usage conditions.

You don’t actually need to know why the alert is no longer a good fit. Instead, The goal is to understand what isn't working and adjust. That could mean a minor tweak to trigger conditions or actions. Or it might mean scrapping the whole thing.

But if you never ask, you'll be stuck with an ever-growing list of alerts which, at best, do nothing but take up space and at worst become more of the background noise that causes devs, ops, and everyone in-between to double down on their assertion that alerts in general suck.

Next steps

If you’re looking for the simplest example of setting up New Relic, check out this blog post. Meanwhile, in my next blog on this topic, I’m going to dive into some specifics around alerting, such as multi-element triggers, trigger actions, and more. In the meanwhile, you can try your hand at building alerts by creating a New Relic account, checking out these instructions, and getting to work.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.