Enterprise data warehouses (EDWs) have been the bedrock of data analytics for decades, evolving alongside the ever-changing technology landscape. The EDW technologies have continued to evolve to meet the growth in volume, variety, and velocity in data, enabling a broader set of business use cases.

The first generation of EDWs, which dominated for an extended period, relied solely on SQL databases. These systems were effective for managing relatively small datasets that could comfortably reside on a single on-premises server like IBM Db2, Oracle, or SQL Server.

As businesses demanded the ability to process larger volumes of data with fast response times, the next generation of EDWs emerged. This generation was characterized by specialized appliances like Netezza (now part of IBM), Teradata, and Oracle Exadata. This was the era of high performance computing (HPC), the combined optimized hardware and software to deliver superior performance and scalability for data warehousing workloads.

Then came the era of Hadoop, a distributed computing framework that revolutionized big data processing. Hadoop was the first truly open-source massive parallel processing (MPP) software that could run on commodity hardware. Hadoop unlocked multiple big data processing use cases and led to acceleration in the new layer of tools that become part of the ecosystem.

Hadoop, despite its revolutionary impact and widespread adoption, had some drawbacks. It featured a tight deep coupling between the storage and compute and required a large data platform team with specialized expertise to set up, manage, and maintain the Hadoop clusters. This led to the rise of next-generation cloud-based EDW and data lakes, such as Snowflake, BigQuery and Databricks, which addressed some of these challenges.

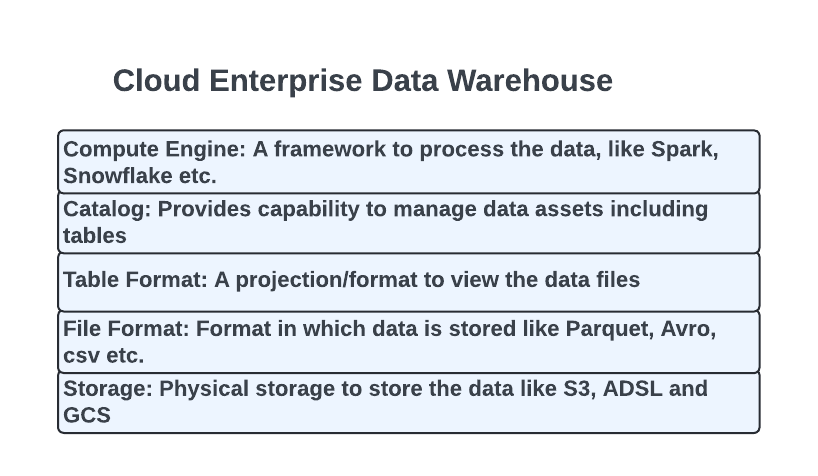

While the current generation of cloud EDW and data lake technologies have made significant strides in addressing complexity, speed, and ease of management, the landscape is once again evolving. The industry is now prioritizing cost reduction, vendor lock-in avoidance, interoperability, and the use of optimized compute engines tailored to specific workloads

The factors that are driving the change include:

- Cost: While cloud data warehouse solutions enable quick onboarding and adoption, they can ultimately lead to higher costs.

- Fit for purpose compute engines: Diverse workloads like ingestion, transformation, artificial intelligence (AI)/machine learning (ML), ad-hoc querying, and reporting each demand a variety of compute frameworks. Each compute framework is built from the ground up to solve specific workloads. For example, Spark, with its Resilient Distributed Datasets (RDDs), excels at managing large datasets in memory and performing iterative data operations.

- Vendor lock-in: In most cloud-based EDW technologies, all layers—such as storage, file format, table format, catalog, and compute engine—are managed by a single vendor. This setup can lead to vendor lock-in, limiting flexibility and potentially increasing costs.

Elements and layers of data lakes and EDW

What is changing now

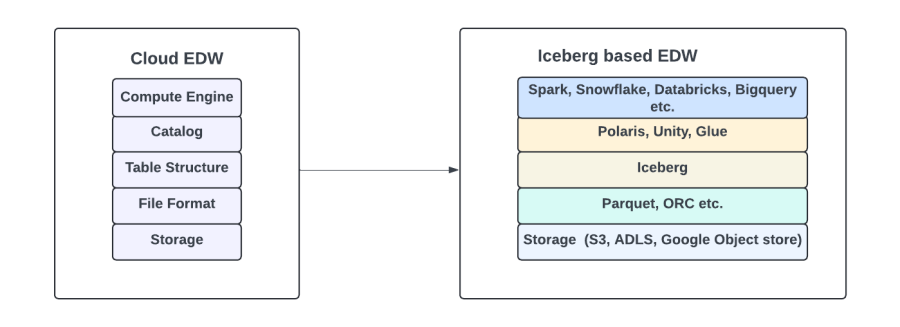

Emergence of open-source table format technologies such as Iceberg have further decoupled layers of an EDW. Iceberg is an open-source table format that offers a multitude of capabilities to support EDW features on cloud storage like S3. Key features include schema evolution, support for multiple compute engines, time travel, and ACID (atomicity, consistency, isolation, and durability) support, all contributing to its popularity.

Link to open source Apache Iceberg.

Iceberg-based data lake architecture

The primary objective of a data lake or an EDW is to provide capabilities that allow businesses to develop accurate and timely insights at the lowest possible cost, while optimizing data accessibility and promoting data-driven decision-making across the organization.

Iceberg allows you to store all your data in cloud storage, such as AWS S3, using multiple file formats like Parquet, ORC, JSON, and Avro.

Iceberg provides table specification on top of the data files, and also manages, organizes, and tracks all of the files that make up a table. Management of the metadata is done by Iceberg through three sets of files:

- Metadata file: Contains table-wide metadata info, and includes unique table ID, and current and previous snapshots.

- Manifest list: Contains a list of manifest files, the path to each manifest file, partition spec ID, etc.

- Manifest file: Contains a list of data files that make up a table, including stats about each data file; for example, path to the data file, data format (Parquet), stats, record count, null value count, and lower and upper bounds.

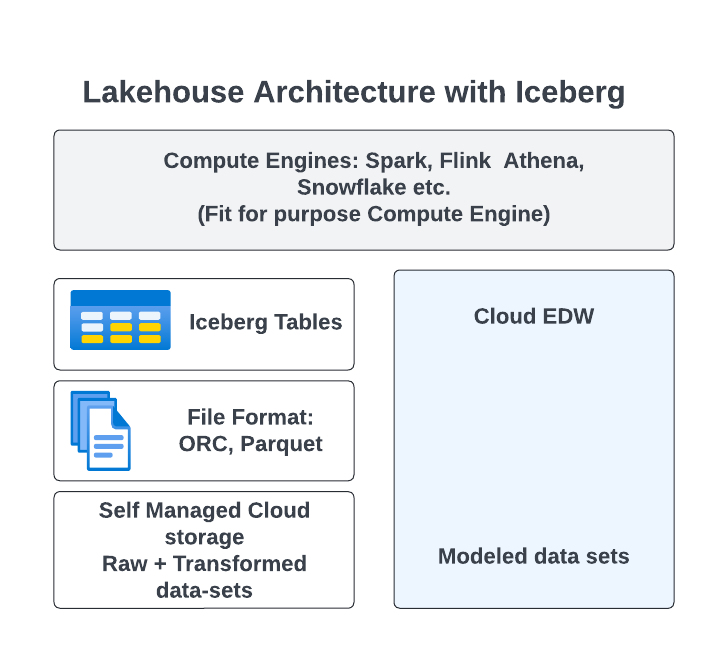

With Iceberg, companies can migrate to a hybrid data house architecture that combines the scalability of data lakes with the performance and governance of data warehouses. This approach helps keep costs down and avoids vendor lock-in, offering the best of both worlds.

In the proposed architecture, companies can store raw and cleansed/transformed data sets in the Iceberg tables on cloud storage like S3 buckets. Modeled datasets—where deep business logic is applied after the raw and cleansed stages—can be housed in cloud EDWs like Snowflake, Databricks, Bigquery etc..

This architecture allows organizations to:

- Fit for purpose compute engine: Pick the best compute engine that is built to solve a specific workload, ensuring efficient utilization.

- Cost-effective data processing: By moving the raw datasets to self-managed cloud storage and using open-source compute frameworks, the cost will be significantly reduced.

- No vendor lock-in: This architecture reduces vendor lock-in by separating different layers.

Conclusion

In conclusion, an Iceberg/open table specification architecture unbundles the EDW, empowering organizations to achieve greater flexibility, scalability, and cost-efficiency in their data management initiatives.

Next steps

As you migrate to the hybrid Datalake architecture with iceberg one of the critical aspects is to ensure your data lake is healthy and performing optimally, which includes comprehensive monitoring and observing all aspects of the open source components

New Relic provides full-stack observability for different layers of the open source components. From monitoring compute frameworks like Spark (New Relic standalone integration polls the Apache Spark REST API for metrics and pushes them into New Relic using Metrics API) to storage layer like S3 that provides monitoring of bucket size, bucket object counts

Useful links to explore

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.