Let's be honest. When a planned peak event hits—whether it's Black Friday, a major product launch, or that end-of-year scramble for tax returns—you can guarantee one thing: everyone is suddenly an expert on your system.

The "eyeball count" explodes. Questions fly in from all angles, from execs you never knew existed. Robust businesses run on data, and peak events ratchet up the pressure to provide answers that are quick, succinct, and were “actionable yesterday".

Observability is ideally suited to answer many of these questions, even those you weren’t expecting. It provides a wealth of real-time information to draw on. But how do you mine that data for actionable insight? How do you do it quickly and how do you present it in a way the rest of the business actually understands?

In this post, we’ll explore techniques to improve how you gather and present data to stakeholders outside your technical realm, be that reporting up to the business or across to other service teams, suppliers or even consumers. These skills won't just help you ride out the peak; they'll help you communicate better every day.

We’ll cover:

- Talking business currency: Learn how to communicate with the business in terms they readily understand.

- What does “normal” look like? What is good, and what is bad?Context is everything, and during peak the context can shift unexpectedly.

- Beyond data to wisdom - Provide actionable insight, not just data.

- Impactful dashboards - Less is more: Top tips for dashboards that drive action.

- Finding the data you need - You might be surprised by the insight you can glean from the data you already collect.

1. Talking business currency

Learn how to communicate with the business in terms they readily understand.

Clear communication is always essential, especially during busy times. Day-to-day, you're intimately familiar with your data and what it means for your services. But when you communicate beyond your team, misunderstandings, wrong assumptions, and bad decisions can creep in.

While you may be interested in pod eviction rates or LCP variations, your stakeholders probably aren’t. To improve collaboration, you must translate your technical metrics into their "business currency".

What is "business currency"? It's the pragmatic proxy for success your stakeholder already understands. While final success is financial, their daily priorities are more specific.

To find it, learn their priorities. Are they focused on release velocity, basket size, failed payments, or resource costs? Maybe it's flights booked or claims approved.

When you report in their currency, you remove friction. They can act on your data immediately without needing to understand your internal metrics first.

2. What does “normal” look like? What is good, and what is bad?

Context is everything, and during peak the context can shift unexpectedly.

On a typical day, you know what "normal" looks like. You have dashboards, SLOs, and alerts for your error rates, average basket sizes, or sales per minute.

Peak events, however, are not typical. You'll face extreme throughput and unusual load patterns. Your risk appetite changes as the cost of downtime skyrockets. In these moments, the data you rely on must account for this new context.

Let's use an example: failed sign-in attempts. You're used to occasional failures. But during peak, this number skyrockets, dashboards turn red, and all your alerts fire.

Is this a real problem? Maybe the login service is broken. But it's also likely that the sheer volume of total logins has increased, driving the count of errors with it. The rate of failures might be perfectly healthy.

The fix is simple: normalise the signal. Convert the discrete count (failed logins) into a throughput-based rate (e.g., failed logins per 1,000 attempts). This immediately tells you if it's a real issue or just a side effect of peak traffic.

When planning for peak, review your dashboards and alerts in that context. Do they still tell the correct story? Load testing is the perfect time to check this. You might find that "normal" thresholds need adjusting for high-load scenarios.

This isn't just a peak problem. An order rate that's acceptable at 3 PM is an outage at 3 AM. You don't want to be paged for that.

You can address this in a few ways. For seasonal variation, anomaly detection or outlier detection can help. You could also use muting rules for out-of-hours notifications or even manipulate the signal to be time-aware.

For example, to only alert on low order rates during business hours, you could use a query like this:

FROM Orders SELECT if(hourOf(timestamp) NOT IN ('20:00','21:00',...,'07:00','08:00'), rate(count(*), 1 minute), 10)This forces the signal to a safe value (10) outside of business hours while reporting the true value within them.

For a more comprehensive approach, use observability-as-code (e.g., Terraform). This lets you define and manage multiple "postures" for your alerts and dashboards. You could have configurations for "normal," "peak," and "holidays," and switch between them easily—or even automate the switch based on traffic.

You can find a basic example of posture switching with Terraform here: https://github.com/jsbnr/nr-terraform-posture-switch

3. Beyond data to wisdom

Provide actionable insight, not just data

You collect a huge variety of data, but raw data is hard for the human (or AI) brain to understand. Dashboards help by aggregating data into charts. But simply presenting data is only the first step. How do you make it meaningful and actionable?

A simple technique is the "Information Funnel." You gradually refine your raw data, improving its clarity with knowledge and wisdom until it becomes an accessible, meaningful, and actionable insight.

Let’s look at a simple example of this process in action…

INFORMATION

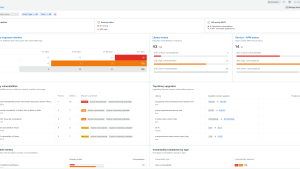

This bar chart shows service hosting costs, ordered from most to least expensive. Our attention immediately goes to the "product catalog" and "currency service." This is just information. The ordering helps, but are we looking at the real problem?

KNOWLEDGE

This second chart injects knowledge, specifically, historical context. We now see the change in cost since last week. The "adservice" has increased the most, shifting our focus.

We've also added summary data ("3 services are more expensive") so the viewer doesn't have to count. We've defined what "more" and "less" expensive means, building our knowledge directly into the widget and reducing cognitive load on the viewer.

But we can take this one step further down the funnel…

WISDOM

This final widget applies wisdom: our understanding of business targets. Services fluctuate, but now we see costs relative to our budget. The "store-frontend" is critically over target, and two others need attention.

We've summarised the action ("1 service is critical and requires action") and, crucially, translated the risk back into business currency: "Total cost at risk."

Take a look at your own dashboards, especially those shared outside your team. How can you apply the Information Funnel to move beyond raw data and provide clear, actionable wisdom?

4. Impactful dashboards

Less is more. Top tips for dashboards that drive action.

Dashboards are a common way to share information. New Relic makes it easy to surface telemetry and create engaging views. But it’s easy to get carried away and build a "kitchen sink" dashboard.

While this might work for you, it's crucial to consider how external stakeholders will consume it.

Who is this dashboard for?

It is crucial to answer this question before building. It’s incredibly difficult to build a dashboard that works for everyone.

Identify the specific stakeholders you're trying to communicate with and build a dashboard (or page) specifically for them.

Consistency and familiarity

When you need information quickly, a familiar format is essential. You don’t want to waste time trying to understand how the dashboard works.

Much like a car's dashboard, consistency in terminology, time frames, and business currency helps you comprehend data efficiently.

Consider implementing a style guide for widely-viewed dashboards. For example: important data is top-left. Detailed breakdowns are on a secondary tab. Include the on-call contact top-right. Whatever style you choose, consistency helps people action the data faster.

Operations or report?

Dashboards serve many purposes. One common differentiator is real-time operational data versus long-term trend reporting. These two goals rarely work well on a single dashboard and should be on different pages or separate dashboards entirely.

An operational dashboard is focused on what's happening right now. It should answer:

- Are we on fire?

- Are things getting worse or better?

- How widespread is the issue?

- What is the business impact?

A report dashboard looks over longer time windows, focusing on trends:

- Has performance degraded over time?

- Are we meeting our SLOs?

- How does today’s business compare with last week?

These two types of dashboards have different default time windows and priorities. Managing them separately ensures you aren’t confusing the viewer.

Is it actionable?

While vanity dashboards can be useful, it's best if a dashboard offers actionable insights. When adding a widget, ask: “What question is this chart answering?”

For example, you have data on cancellation rates. You could list the rate for each restaurant, answering, “What is the cancellation rate for each restaurant?”

But a better, more actionable question is, “Which restaurants have a cancellation rate we should be concerned about?” As we learned from the Information Funnel, this extra pre-processed thought makes the data far more actionable.

Does it inform a decision?

An individual chart might be actionable, but sometimes you need an aggregation of data to make a decision. A dashboard that gathers all necessary information in one place is very powerful.

For example, before a new feature deployment, you need to know the health of your dependencies. A "deployment readiness" dashboard could show this at a glance. You can even use the Information Funnel to present the simplest possible data: everything should be green!

Keep it simple, stupid!

The KISS principle is very relevant to dashboards. It's tempting to keep adding "useful" widgets, but they may just be confusing everyone else.

A good rule of thumb: The complexity of the dashboard should be inversely proportional to its audience. If it's just for you, make it as busy as you like. If the whole organisation will see it, keep widgets to a minimum.

Clarity and documentation

“OMS overflow fall out rate: 6.8” — this means something to you, but how would anyone else know?

Try to convert this to a business currency, like “orders failing to dispatch on time”. If not, provide hints (like guidelines or color-coding) to show what's good or bad. You can also use a markdown widget to explain the metric, why it matters, and what different values mean to the business operation.

Share more widely

Making dashboards available to a wider audience is easy. Some customers build an "index" dashboard that links to their external-facing dashboards. You can also share data with suppliers or partners outside of New Relic using the "share dashboard" feature.

5. Finding the data you need

You might be surprised by the insight you can glean from the data you already collect.

Observability data is rich with insight. Whether it's from an agent, OpenTelemetry, or a log forwarder, this data helps you understand both system performance and the business itself.

Day-to-day, you watch system metrics for health and availability. But as we've discussed, these metrics can be meaningless to external stakeholders. We need to explore our data for meaning that relates to our business currency. With a bit of creativity, you can often derive business-level data from the telemetry you're already collecting.

Let's explore some of these sources.

Real user monitoring (Browser and Mobile)

Our browser and mobile agents report data from your actual users. Events like PageView and Mobile carry a wealth of information about how (and who) is transacting with your service. You can use this data to understand traffic geography, count unique users, interrogate sessions, and more.

For example, we could count the number of users currently browsing the site based on their session ID using the uniqueCount() function:

FROM PageView select uniqueCount(session) since 10 minutes agoAPM Transactions

Transaction events from APM agents represent individual units of work in an application. This lets you explore database calls and the throughput and performance of specific activities.

Transaction names are often aligned to their business purpose. You could count the rate of the /payment/declined transaction, for example, and use it as a real-time proxy for failed payments.

Sometimes the information is buried deeper. Perhaps product IDs are in the request.uri attribute. In this case, you need to extract that data. NRQL has functions like aparse() and capture() to help.

For example, if your URIs look like this: /product/detail/hitachi-air-fryer/242234/view, you could extract product names and chart the most popular ones:

WITH aparse(request.uri,'/product/detail/*/%/%') as productName

FROM Transaction

SELECT count(*) as productViews

FACET productNameMining your logs

Application logs have always been a rich source of data. You will often find information that is directly relatable to a business operation.

You can use aparse() and capture() here, too. You can also use ingest-time parsing to convert logs into structured data, making them much easier to query.

Automated ingest-time parsing lets you define the structure of your logs and automatically extract data. For example, consider this log line:

claim submitted: type=insurance riskValue=400000 price=35.99 customerProfile=A457X

The following parsing rule would convert this into a structured record:

claim submitted: type=%{WORD:type} riskValue=%{NUMBER:riskValue} price=%{NUMBER:price} customerProfile=%{WORD:customerProfile}The result:

{

"riskValue": "400000",

"price": "35.99",

"customerProfile": "A457X",

"type": "insurance"

}Your log data might also include data that is further JSON encoded, in that case you can use jParse() to extract it further at query time,

Enriching your data

While your telemetry has plenty of information, sometimes it needs more business context to be truly actionable. Enriching your data can significantly improve its value.

You can enrich data at collection time or query time. At collection time, you can use agent SDKs to add custom attributes or events. For example, you might include a user's loyalty status with their checkout operation.

At query time, you can use joins and lookups. This allows you to join different data across a shared key. For example, you could upload a lookup table with location data for your depots, then join it with your telemetry on depotID to plot the depots on a map.

Observability is far more than just a troubleshooting tool for engineers; it is the ultimate source of truth for the entire business, especially during the chaos of peak season. By translating technical metrics into business currency, setting clear context around what "normal" means and refining raw data into actionable wisdom, you stop simply presenting data and start driving action. Building simple, focused dashboards and creatively mining the data you already collect will not only help you successfully navigate peak season, but will build a foundation for clearer, more impactful communication with key stakeholders, every day of the year.

Ready to see how the retail world is mastering peak traffic? This guide provided the how-to for transforming chaos into clarity. Now, see the state of play across the retail industry.

Discover how your peers are tackling peak-traffic challenges, where they are investing their observability resources, and the clear business benefits they are realising.

Read the free report: The State of Observability for Retail 2025

Read the report now:

https://newrelic.com/resources/report/state-of-observability-for-retail-2025

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.