The best time to learn about fire is when you’re on fire.

—Jen Hammond, New Relic engineering manager

A few months ago, a Cassandra database node belonging to one of the New Relic engineering teams disappeared. Poof. Gone. At the time, the team had three new members, and the runbook and Ansible playbook they’d adopted for managing the Cassandra cluster, they quickly learned, were both out of date.

Nevertheless, they had to get this database node up and running again without any assistance—which they did, but the task took more than half an hour. That’s 30 minutes that New Relic customers weren’t able to access their data on that particular database node.

Except none of this is true. The Cassandra node failure was a simulation, part of what we call an “adversarial game day,” a term coined by New Relic Senior Site Reliability Engineer David Copeland.

A homespun subset of chaos engineering—in which you deliberately introduce faults into your systems to see how the systems and the teams managing them recover—adversarial game days are part of New Relic’s site reliability best practices. During an adversarial game day, a malicious actor (the adversary), introduces faults into a non-production version of our system to see how well our teams are prepared to recover.

In our day-to-day operations, we don’t usually run into the situations a game day can throw at us, so we don’t see the potential problems. During adversarial game days at New Relic, for instance, we’ve discovered that a team didn't have a rollback mechanism for failed deploys or that cross-functional teams didn't have access to systems they needed access to in order to troubleshoot a failure.

We use game days to prove out our reliability practices. In the modern software world, failures are inevitable. All too often, we think of our systems’ incredible uptime and then get lulled into a state of complacency. That can impact our ability to respond to an unplanned incident, as we saw in our opening example. At the end of the day, chaos engineering and adversarial game days are all about managing the chaos—with workable plans and a clear sense of direction.

Read on to learn more about why and how New Relic runs adversarial game days. If you’re interested in using New Relic to collect data to prepare for game day, read our docs tutorial about using New Relic to prepare for a peak demand event.

Why run game days?

In the STELLA report, written by a group of researchers called the SNAFUcatchers, Dr. Richard Cook, “reviewed postmortems to better understand how engineers cope with the complexity of anomalies … and how to support them.” The report determined that team members who handle the same parts of a system often have different mental models of what the system looks like. This is a normal state of affairs.

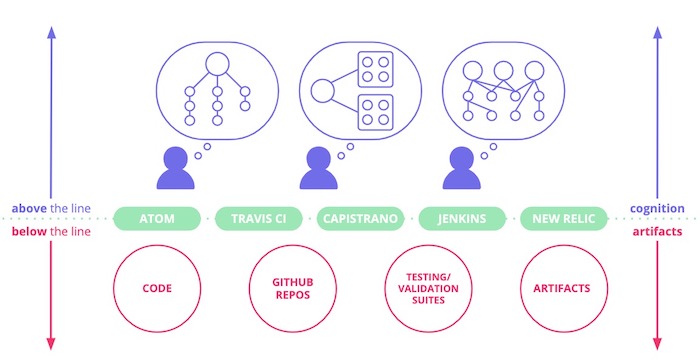

According to the STELLA report, you have your code and the repositories in which it lives; you have your testing and validation suites, the artifacts you produce, and the systems on which those artifacts run. All of these things are below the line of representation, which is composed of your deployment tools, your monitoring and observability tools, and your UIs and CLIs. You don’t interact with the systems directly, but with these representations. The only information you have about the system comes from these representations—you never actually “see” your code operating, only the reflections through the representation. And each team member sees a different reflection.

This is similar to Plato's "Allegory of the Cave," a philosophical allegory in which prisoners chained in a cave perceive the shadows cast on a wall by backlit projections to be reality; it’s not until they’re freed from the “chains of ignorance” and forced from the cave that they can properly see and rationalize the natural world. In the same way, then, we name the tools running in our laptop monitors and we call it reality. If we don’t have a complete picture of where our systems are unreliable, we ignore reality at our peril.

When should you have a game day?

At the least, you should run a game day once per quarter, particularly if your systems are outperforming their Service Level Objectives (SLOs). If your performance metrics that quantify your Service Level Agreements (SLAs) with your customers—for example response time, throughput, and availability—exceed the goals you set for the quarter, you should run a game day. Far too often, teams load more and more dependencies onto high-performing services without testing their resiliency, and those dependencies tumble like dominoes during incidents.

Onboarding new team members is a great excuse to hold a game day. At New Relic, we’ll often have an adversarial game day to prep a new team member for “pager duty.” Not only will this exercise their knowledge of the system, but it’ll familiarize them with our incident response processes.

Finally, you should definitely have an adversarial game day before releasing new features to users. Without an adversarial game day, you don’t know for sure what tripwires customers may hit. If you have a “rock-solid” product, a pre-general availability game day may turn out to be rather boring, but your on-call engineers will likely sleep easier on those first few nights after release. They’ll also be better equipped to handle an emergency should something go wrong with a new feature.

Who should play adversary?

The adversary is a critical role, and it is usually played by the person with the most complete mental model of the system you’ll attack. This is also a great way of testing the “bus factor”—what would happen if this (typically senior) person went on vacation (or got hit by a bus)? How would the rest of the team respond to a real-world incident without that person's knowledge?

You can also be creative when assigning the adversary role:s Maybe your newest team member should be the adversary? Writing a plan to test a veteran team is great way for new members to quickly come up to speed.

In creating an adversary plan, we often consider our incidents from the previous quarter. Did we actually fix the underlying issues? Did the incident improve or worsen our mental models of our systems? Replicating incidents is a good way to make sure your team's mental model actually reflects the reality of the system.

Ordinary software knowledge should not be part of your game day. In other words, if you have a question about your system that can be answered in five minutes, that's not an appropriate target for your adversarial game day. Remember, game days are about discovering what’s missing from your team’s collective knowledge; you want to uncover things that your team doesn't know about your system. With that in mind, a typical game day at New Relic can run up to three hours.

Who should participate?

The last time I put together a game day in anticipation of a major new feature GA, I included two engineering teams because I wanted them to practice managing incidents for other teams—in a real-world incident, the first-responders may not be part of the team that owns the root cause.

In this particular game day, I mixed the teams to create a team of responders and a team of adversaries. The adversaries threw attacks at the new feature, and as the game day progressed, the response team discovered that even though they were using alerting best practices, they had alerts that weren’t sending notifications. The team that engineered the feature realized they had made some mistakes in their alerting. They went back to the drawing board, fixed their alerts, and then we had another game day in which their alerts behaved as expected.

Huddling together during an adversarial game day can be a lot of fun, but don't let geographical logistics get in the way. You're not necessarily going to be in the same room when you have an unplanned incident, so having remote participants is a great idea. In fact, including remote participants will help you test the communication tools you’d use during a real-world incident.

Your game day may have unintended consequences for other teams, so be sure to socialize your game day ahead of time. In fact, teams with downstream services dependent on yours may find that this is a good opportunity to see if their systems are resilient to upstream downtime, or if their alerting is appropriately configured.

Wait, we can’t run a game day in production

In an ideal world, you’d run your adversarial game days in your production environment; however, you may not be able to introduce faults into your system that could trip your users. If you don’t have Service Level Indicators (SLIs) or error budgets, and you don’t know if your uptime can support a game day, don’t let such issues deter you—run your game day in whatever environment you can. Use a dev or staging environment, or spin up a version of your system in a sandbox. The important thing is that you run your game day in a version of your system that closely mimics what your users experience on a day-to-day basis. Your goal for any adversarial game day is to improve your mental model of your system so that you improve your mean time to resolution (MTTR) when things inevitably go wrong.

What tools do you use in an adversarial game day?

There are a lot of clever tools you can use to inject faults into your system, but sometimes simple is best. Look at your architectural diagram and then ask what could possibly go wrong. What mistaken assumptions could your customers make about your system? What could a malicious actor do? Figure out those answers and then write a simple bash script to emulate that behavior.

At New Relic, we’re fond of Toxiproxy, an open source tool from Shopify. Toxiproxy lets you “tamper” with network connections between interdependent services, via a proxy and client. For example, in a recent game day to test an API, we used Toxiproxy to cut off the connection between the API service and the database. When we did this, the API responded with an error message that included the IP address of the database—which was interesting but not something we’d want customers to see.

There are plenty of tools you can adopt for your own game days. Check out this curated list of chaos engineering resources on GitHub.

Run your game day like an actual incident

Run your game day like it was a real-world unplanned incident. Assign a scribe to take notes about the soft spots in your troubleshooting and the gaps in your monitoring and alerting. Invite customer-support representatives to participate, so they can explain likely customer impacts. You may be testing your team’s mental model of the system, but they need to understand how system faults impact customers.

Within 48 hours after your game day, hold a retrospective, and ask plenty of questions. For example:

- What could have gone better? What did your team learn? Did your mental model of your system improve?

- Did your monitoring and alerting work as planned?

- What was your MTTR? Can you improve it?

- Can your team automate your runbooks to make mitigation faster?

- Should you update your architectural diagram?

- Did alerting and monitoring for dependent teams work?

- Do you need to refactor the relationship between these dependent services?

The key outcome of the retro is simple: You assign work to improve the resiliency of your system.

Share what you learn

Finally, share what you've learned. Hopefully, you’ll inspire other teams to hold their own adversarial game days.

Finally, all of this advice aside, there's no wrong or right way to have an adversarial game day, and different teams will have different needs in terms of what they should test. Use the adversarial game day to force your teams out of the allegorical cave. A complete, unified view of your system may be hard to look at, but it’s usually the best way to reveal the truth.

The views expressed on this blog are those of the author and do not necessarily reflect the views of New Relic. Any solutions offered by the author are environment-specific and not part of the commercial solutions or support offered by New Relic. Please join us exclusively at the Explorers Hub (discuss.newrelic.com) for questions and support related to this blog post. This blog may contain links to content on third-party sites. By providing such links, New Relic does not adopt, guarantee, approve or endorse the information, views or products available on such sites.