Running a global observability platform means one thing above all: your infrastructure must never go down. When you're responsible for monitoring thousands of customers' applications 24/7, network failures aren't just inconvenient, they're existential threats.

At New Relic, hundreds of clusters run on multiple clouds, and regions. These clusters depend on a complex web of network connections: regional transit gateways, inter-regional hubs, and cross-cloud links. While we have resiliency to avoid single points of failures, If too many connections fail, our ability to provide real-time observability is compromised.

The challenge? We needed to know instantly if connectivity fails at any layer; within availability zones, between regions, or across cloud providers.

So we did what we do best: we built a solution using New Relic's own platform to monitor our entire network. The result was Weather Station, our internal network monitoring system that now performs over 100,000 connectivity checks per hour across our entire multi-cloud infrastructure. Here's how we built it, and what we learned along the way.

Understanding the network visibility challenge at scale

In our large, complex environment, the challenge wasn't detecting complete network outages. Those were obvious. The challenge was answering specific diagnostic questions quickly: Can clusters in the same region communicate through the regional transit gateway? Is the hub gateway properly routing traffic between regions? Is the cross-cloud connection between clouds operational? Are we experiencing packet loss during peak traffic hours?

Without continuous validation of these paths, engineers would spend a lot of time manually testing connectivity, SSHing into instances, running ping and traceroute commands, checking route tables across multiple cloud provider consoles, and correlating timestamps to identify when possible failures occurred.

We needed a systematic approach to validate every critical network path continuously, detect failures quickly, and provide engineers with immediate context about which specific network segment had failed. The solution would need to scale alongside our infrastructure, automatically adapting as we added new regions, cells, or cloud providers.

Architecting a continuous network validation system

We called our continuously monitoring system Weather Station, a name inspired by how meteorologists use distributed sensors to monitor atmospheric conditions. Just as weather stations detect changes in temperature, pressure, and wind across geographic regions, our Weather Station detects connectivity changes across network regions, availability zones, and cloud providers.

The core concept is straightforward: if we deployed monitoring instances that mirrored our production network topology, we could have them continuously check connectivity to each other. Any failure in these synthetic checks would immediately signal a real network problem before it affected customer data.

Weather Station is a self-contained Go application designed around three core architectural principles:

Mirroring production topology

We created a dedicated monitoring network that replicates the exact network architecture used in production. These monitoring VPCs attach to the same shared infrastructure that production traffic flows through. This means we're testing real network paths through actual infrastructure components, not synthetic routes that bypass production.

The setup includes primary and secondary VPCs in each region to emulate different configurations, with one monitoring instance per availability zone. In total, we deployed 88 dedicated monitoring instances across our multi-cloud infrastructure, plus containerized deployments within production cells themselves.

Multiple monitoring perspectives

We monitor from two complementary vantage points. The dedicated monitoring network validates shared infrastructure; the regional gateways, inter-regional hubs, and cross-cloud provider connections. These instances exist solely for monitoring and aren't affected by production workload changes. Meanwhile, Weather Station also runs as Kubernetes pods within production workloads themselves, giving us an inside-out view of connectivity from the actual workload perspective.

Dynamic configuration and observability

Weather Station uses a hierarchical configuration system. When an instance starts, it receives parameters describing its location, cloud provider, region, availability zone, and environment. Based on these parameters, it loads the appropriate YAML configuration file that specifies which connectivity checks to run.

For example, an instance will ping other instances in the same availability zone to validate regional connectivity, ping instances in other regions to test inter-regional routing, and ping instances in the other cloud provider to verify cross-cloud connections. The configuration automatically adapts based on where the instance runs.

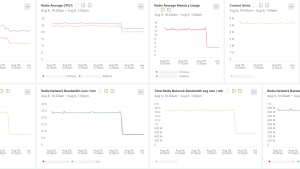

Every check result flows into New Relic as a metric with the prefix

weather_station, tagged with rich metadata about the source instance such as cloud provider, region, availability zone, environment, and cluster name. This means we can query, visualize, and alert on network health using NRQL queries. When alert conditions are breached, New Relic automatically open issues and notify the right teams immediately.

Weather Station runs four types of connectivity checks, each targeting a different layer of our network architecture:

- Regional checks: validate that hosts in the same region can communicate through regional transit gateways or virtual hubs.

- Inter-regional checks: ensure hub transit gateways properly route traffic between regions.

- Cross-cloud checks: verify our cloud to cloud communication.

- Management network checks: confirm all instances can reach critical internal services like container registries and vault.

Each check is simple; primarily ICMP ping tests with some TCP port checks for specific services; but the aggregate of over 100,000 checks per hour provides comprehensive coverage of every network path our platform depends on.

Scaling weather station across regions and clouds

We took a methodical approach to deploying Weather Station, starting with a proof of concept in a single staging cell. The goal was simple: validate that the application ran reliably, sent metrics correctly, and that our configuration system worked as expected. Within days, we caught our first potential network issue, a misconfigured security group rule that would have blocked inter-region traffic in production. This early win gave us confidence to expand.

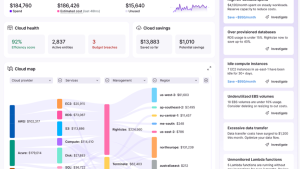

We then rolled out Weather Station systematically across our dedicated monitoring infrastructure, deploying iteratively in each region and validating metrics and alerts at each step. This phase revealed intermittent packet loss on cross-cloud connections during peak hours, asymmetric routing issues between availability zones, and BGP flapping on regional hubs. Each discovery led to infrastructure fixes before they could impact customers.

The final phase involved deploying Weather Station as Kubernetes pods within production cells themselves, using our standard automation tooling for consistent rollouts. We built a comprehensive Networking Status Dashboard to visualize all connectivity data and configured alerts using Terraform, ensuring they're versioned and consistently deployed. Each alert includes related context such as service tags and incident groupings, enabling immediate routing to the right teams with full information about which network segment failed.

Achieving 90% faster network issue detection

The Weather Station transformed how we operate our network infrastructure. The impact was immediate and measurable:

- MTTD Improvements: Our mean time to detect network issues dropped by 90%.

- MTTR Improvements: Our mean time to resolve incidents improved by 50% since alerts contained precise context about the potential issue..

- Immediate root cause identification: The dashboard shows not just that connectivity failed, but exactly where in our network topology the failure occurred.

- Configuration issues were caught in staging before reaching production such as security group misconfigurations, incorrect route table entries, and BGP configuration errors are now caught during deployment validation.

- Operational confidence during infrastructure changes: We validate all Weather Station checks pass before routing production traffic to newly deployed cells, and can test provider failover scenarios instantly.

- Cost avoidance: While the Weather Station requires infrastructure investment, it prevents costly outages. Each hour of network downtime affects customer data ingestion across multiple cells. Catching issues in staging avoids production incident costs including customer impact, emergency rollbacks, and extensive incident response.

- Unified operational view: The Networking Status Dashboard became our single source of truth; one view instead of multiple cloud provider consoles, VPN configurations, and gateway route tables, with current status, historical trends, and drill-down investigation capabilities all in one place. The following image shows the Weather Station dashboard in New Relic.

Top takeaways

Building the Weather Station exemplifies how we use our own platform to solve operational challenges. The same observability capabilities we provide to customers; custom metrics, dashboards, and alerts; enabled comprehensive network monitoring for our multi-cloud infrastructure.

Our experience building Weather Station yielded lessons that apply to any organization managing complex, multi-cloud infrastructure:

- Mirror your production topology. Test real network paths through actual infrastructure components, the same gateways, hubs, and provider connections that production traffic uses. Synthetic routes that bypass production won't catch real-world failures.

- Monitor from multiple perspectives. Dedicated monitoring instances validate shared infrastructure while in-cell pods validate connectivity from the workload's perspective. Both views are essential.

- Start simple, then expand. We began with basic ping checks to prove value quickly before adding TCP port checks for specific services.

- Treat network health as observable telemetry. Send connectivity results as metrics so you can use dashboards, queries, and alerts the same way you monitor applications.

- Automate everything. Configuration as code, infrastructure as code, and deployment automation let us scale across regions without manual work.

The key insight? Network monitoring isn't about collecting more data, it's about collecting the right data from the right places. The results speak for themselves. But the most important outcome is cultural. Network monitoring is now proactive, data-driven, and integrated into every infrastructure change.

次のステップ

If you're struggling with network visibility in complex, multi-cloud infrastructure, the patterns we used can help: deploy synthetic monitoring that mirrors your topology, treat network health as observable telemetry, and automate everything.

Explore New Relic's Infrastructure Monitoring and Synthetics to build similar visibility for your network.

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。