This is a guest post by Frederick Townes. Frederick was previously the Founding Chief Technical Officer of Mashable, one of the top independent media sites worldwide and is currently the organization's Senior Technical Advisor. As a search / social media marketer and WordPress consultant, Frederick’s projects typically include WordPress as a core element. One of his largest contributions to the WordPress community was his web performance optimization framework W3 Total Cache.

WordPress is the gold standard platform in democratized online publishing. In digital media, performance means delivering the most value possible using the fewest resources and least time. The better the site performance, the more usage and the higher the adoption rates. Every website needs a host. And selecting the right web hosting partner has always been a key starting point of success. The definition of the 'right' hosting partner has once again changed. Your web host is no longer just a provider of commodity hardware and resources at a competitive price point. Today, web hosting companies are software companies in disguise -- specializing in making sure that the applications you use scale, thereby assisting you in creating great end user (or customer) experiences.

Let's review some of the components in the hosting 'solution' stack that great hosts consider or that your organization would consider when trying to improve application/website performance.

The Foundation

Performance matters because a positive (fast) user experience facilitates all goals a publisher or application developer might have. WordPress, like most applications, has numerous moving parts that must be considered when trying to scale.

Disk

Quite a bit can be said about the impact of disk performance on applications. So much so, that there are many false notions about the value of memory-based caching as a silver bullet solution. Assuming your hardware is modern, here are some rules of thumb for thinking about your architecture:

* A non-shared local disk will always give the most consistent and reliable performance.

* Network (shared) storage definitely plays its role, but will not scale as far as or in the way that you would like. Always break up the problem you need to solve into pieces optimized for the need.

* Never compromise on data redundancy even when using cloud-based solutions.

MySQL

The query cache is your best friend. MySQL has been proven to be a scalable relational data store time and again. There’s no reason why it cannot continue to work for WordPress for years to come. You’ll want to ensure that the query cache is enabled and performing well for your site(s). That will make sure that MySQL is doing as little work as possible, freeing up resources (hopefully) for more important things than redundant queries.

You’ll also want to make sure that you use a tool like mysqltuner or similar to ensure that your table performance (buffers, etc.) is optimal. Switching engines to InnoDB, which now has support for full text search, is recommended for higher traffic sites. You won’t compromise the native WordPress search quality by making the switch. I recommend the Percona distribution of MySQL, especially if functionality like sharding, read replicas, etc, allow it to scale as your needs grow.

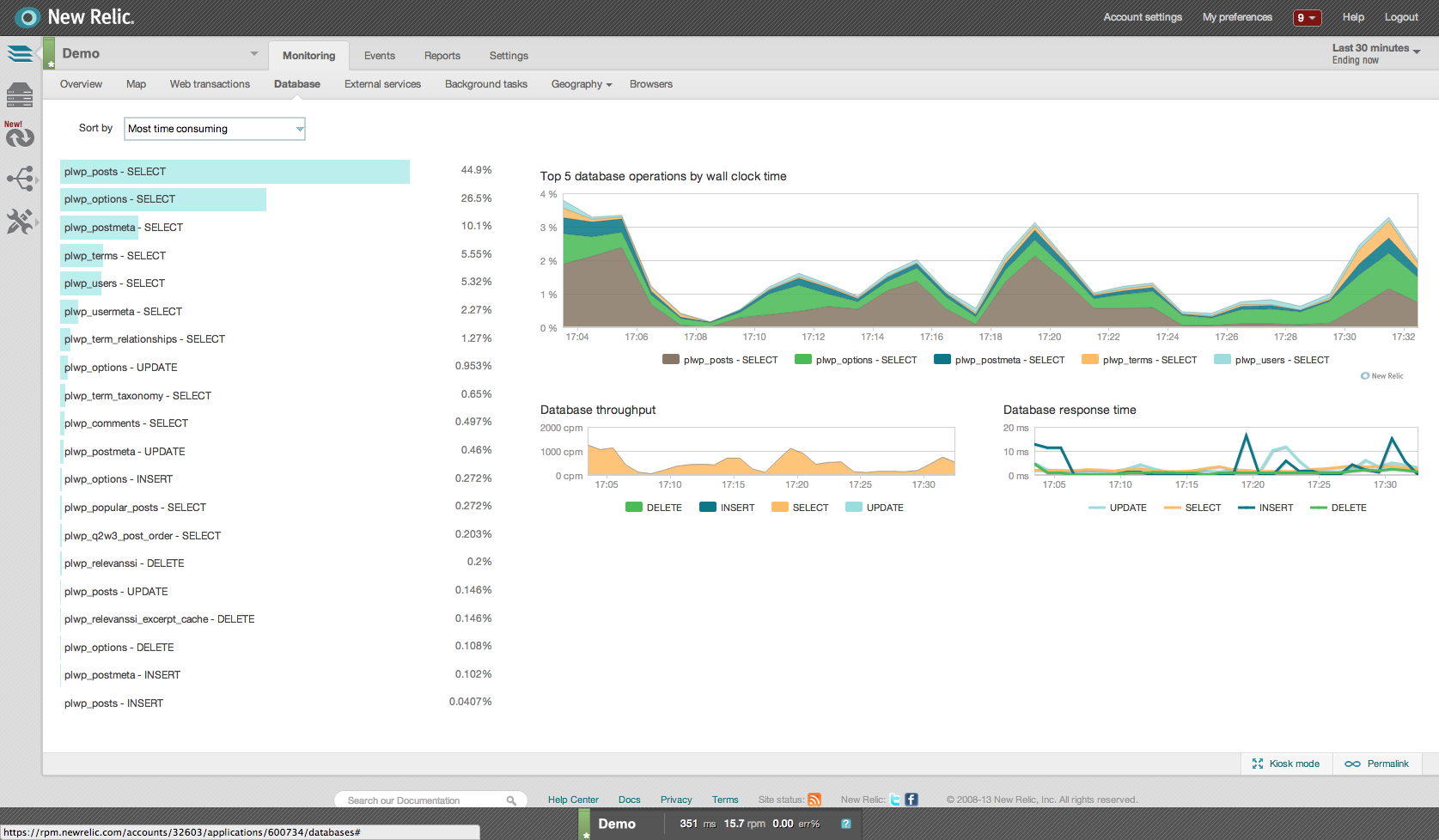

Database

Once you’ve ensured that MySQL your data store isn’t a bottleneck, you need to analyze your queries generated by your theme and plugins to either optimize them (reduce their response times), reduce their number through refactoring code or various caching techniques. Remember that by default, using transients in WordPress 'moves the problem' of caching something like a time consuming API request (API requests become expensive because they block execution while waiting on and processing responses from third parties) and puts it into the database (wp_options table). That may not be such a bad thing if that data was not serialized and stored in the same table as various other settings in WordPress. Fortunately, there are new options for reducing database load that will be highlighted shortly. New Relic makes it trivial to disseminate the goings on in your environment.

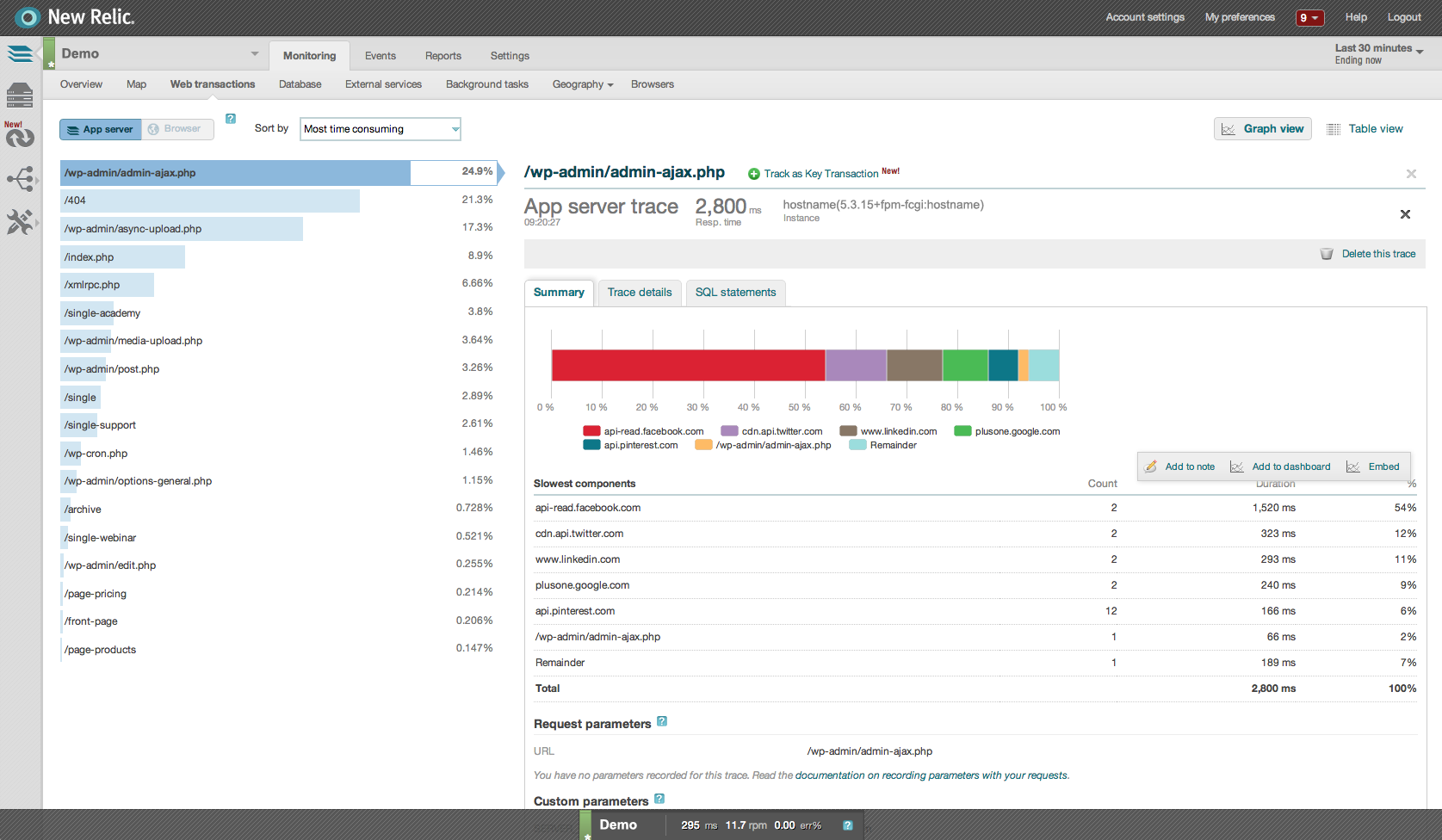

PHP

PHP is slow. That’s not the fault of WordPress, nor is it anyone else’s fault; technology simply keeps moving forward. What do we do about it? Use New Relic’s stack traces to get an empirical view on where optimizations can be made. Some rules of thumb to consider:

* The larger the memory usage, the greater the execution time

* Take advantaged of PHP 5’s auto load to reduce memory usage

* Use memorization and avoid loops wherever possible to reduce execution time and memory usage

* Avoid opening more files than necessary to reduce calls to disk and memory usage

* Use include and require rather than include_once and require_once

Opcode Cache

APC is a 'free, open, and robust framework for caching and optimizing PHP intermediate code.' That means that when added to your web server you can realize dramatic speed improvements (especially with well written code). APC keeps the interpreted (executable) version of your code in memory so that requests for it do not have to be re-interpreted.

APC also offers an object store, allowing objects you create to be stored in memory right next door to the scripts that need them. The only drawback to this level of caching is that it’s local to each respective application server you have. That means that the cache for objects is rebuilt on each server (wasted CPU time) and, more importantly, purging of objects is tricky. There are multiple copies of objects everywhere, potentially with various 'states', but there are solutions to the purging issue.

Object Cache

WordPress’ object cache is where a lot of magic happens. There are various groups of objects that are generated by WordPress when it processes page requests. These groups serve numerous purposes, and depending on how you use WordPress, a very significant performance increase is realized by persistently storing the objects using memcached or APC backend stores for example.

Similar to APC, functionality exists to take advantage of using the object cache via the transients API mentioned earlier, or directly. All of this depends on the wp_options table so test carefully using New Relic’s reporting to ensure that the aggregate performance realizes positive results.

Fragment Cache

Some WordPress caching plugins implement this at the page caching level allowing for scenarios where portions of a given page can still be generated by PHP while larger portions of the page that are unchanged are cached and not regenerated. This definitely realizes some performance benefits -- especially when performed by a Content Delivery Network (CDN) that supports it (more on that later) -- but there are some opportunities for increasing performance using this technique at the application level, ultimately allowing you to have a fully dynamic eCommerce or personalized website without the need for page caching to speed things up.

A quick way to realize this is to use the latest version of W3 Total Cache (W3TC) along with the transient API mentioned earlier to cache the template parts that remain unchanged. W3TC will allow you to choose a data store other than MySQL like memcached, which is designed for high-speed access from multiple application servers. So rather than every page request that already needs to fetch respective information about the visitor or other dynamic elements, MySQL is now able to focus (and be tuned for) only the dynamic parts of your templates.

Fragment caching will reduce your execution time by orders of magnitude as well as reduce the CPU utilization across an application pool of any size.

Minify

Its value may be reduced to some degree when HTTP 2.0 arrives, but until then, especially for mobile, minification is vital to improving end user experience. Reducing the file size and number of files (and therefore HTTP transactions) has a dramatic impact on the user’s experience with your site. Unfortunately, not all Cascading Style Sheets (CSS) or JavaScript (JS) lends itself to the various techniques in popular minify libraries. Developers should take steps using jQuery or WordPress Core to provide a fully optimized version of their files to take the load off the publishers.

There are various tools for WordPress that support the minification process. When making a selection, ensure you pick one that caches the minified files to disk, respects the differences between templates, supports local and remote files and most importantly is compatible with content delivery networks and mobile plugins.

Page Cache

The first line of defense and go-to tactic for scaling a typical website is the caching of pages. This particular optimization moves the problem of returning HTML via PHP and MySQL to the web server (e.g. Nginx or Apache), which adds orders of magnitude of more scale because web servers are designed from the ground up to return files from disk to user agents. Some things to consider when caching pages are making sure that:

* Caches are primed to avoid traffic spikes

* Caches are purged when comments, revisions, etc. occur

* Caches are unique (if required) for various cookies, user agents and referrers

New Relic provides some fantastic value around these pieces of the web server stack because they not only provide server monitoring, they also provide analytics and monitoring around end user experience with your pages, which takes into account more than just the HTML of your site, but all of its assets.

Reverse Proxy

Reverse proxies often provide caching that sits between the web application and the public Internet. Its goal is to lighten the load on the web servers behind them. Reverse proxies are optimized for returning content generated by the underlying application. Varnish, Nginx or even Apache can be configured to provide reverse proxy functionality. As with other components of the stack, the goal is to optimize layers in the stack to do one job and do it well. Often there are multiple application servers that provide specific functionality; a reverse proxy can be used to marry those endpoints together under a single hostname. The browser caching policy (headers) set by your application then determines how the cache behaves, just as it would for the browser or CDN.

Content Delivery Network

CDNs are yet another form of caching provided as a service for publishers. The performance key here is that they provide low latency access to media you choose to host with them. With a CDN, it doesn’t matter where your site is hosted. If you have visitors in other parts of the world, the CDN caches that content close to them and shaves seconds in aggregate off of the total page load time for your site.

As with all of the other types of caching, purging old content is tricky because we always want to make sure that users are downloading the latest versions of JS, CSS, images etc. So make sure that you have a mechanism to automate handling this before taking this step. Nearly every publisher will see an reduction in page load time using CDNs and parallelization, image optimization and other techniques that help you extract the most from CDNs. Use New Relic’s RUM to track your progress.

Browser Cache

Leveraging the browser’s cache is one of the biggest performance wins available. Setting the expires headers will make sure that any proxies that Internet Service Providers (ISPs) cache also respect the policies of your content, so that content is automatically purged.

HTML5 provides very robust functionality that allows publishers to cache resources locally on devices, creating a 'native' app-like experience.

Things to Think About

End user experience is typically optimized by improving the performance of what is sent to the browser -- there are still lots of tools and tactics to consider like mod_pagespeed and SPDY, neither of which are silver bullets on their own because a well built site is always the starting point for success.

Do you have a site with many social layers? Are you using something like BuddyPress for forums, comments and personalization on a WordPress site? Because there’s more to great user experience than optimizing what is sent to your visitors, you have to ensure that the heavy lifting the server does is as performant as possible because a logged in user can never be sent a cached page.

Caching is nothing new; it’s at work all over the place. In computing, RAM is a cache. The trick is keeping them fresh and purging them. That requires automation.

The Automation

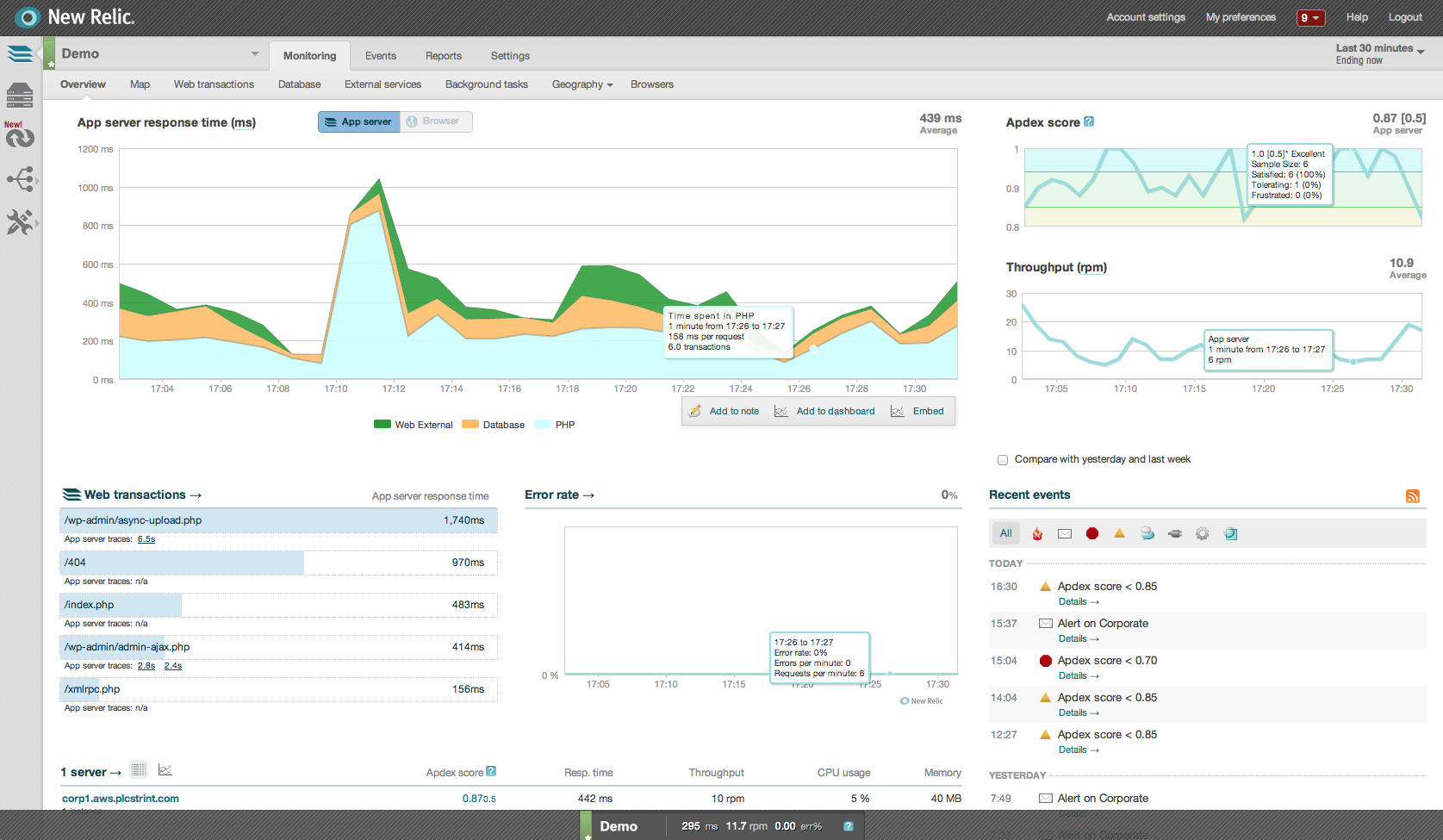

Enter New Relic. I started working with them when they first arrived on the scene while working as CTO at Mashable.com. It was immediately clear they were more than an alternative to creating cachegrind files using Xdebug and instead offered real time insight into the behavior of not just WordPress itself, but the server software (third party resources, MySQL, etc.), as well as the end user experience.

New Relic closes the loop in Web Performance Optimization Automation by combining alerts, stack traces (insight into the processes the application is performing), visualizations, real time end user monitoring and server monitoring.

Using New Relic allows the publisher to focus on publishing rather than stretching WordPress into trying to achieve these things inside the same hosting account. In other words, plugins like P3, which are great for troubleshooting, fall by the wayside, as you no longer have to remember to run and have to use other tools to figure out how to address performance issues in your site.

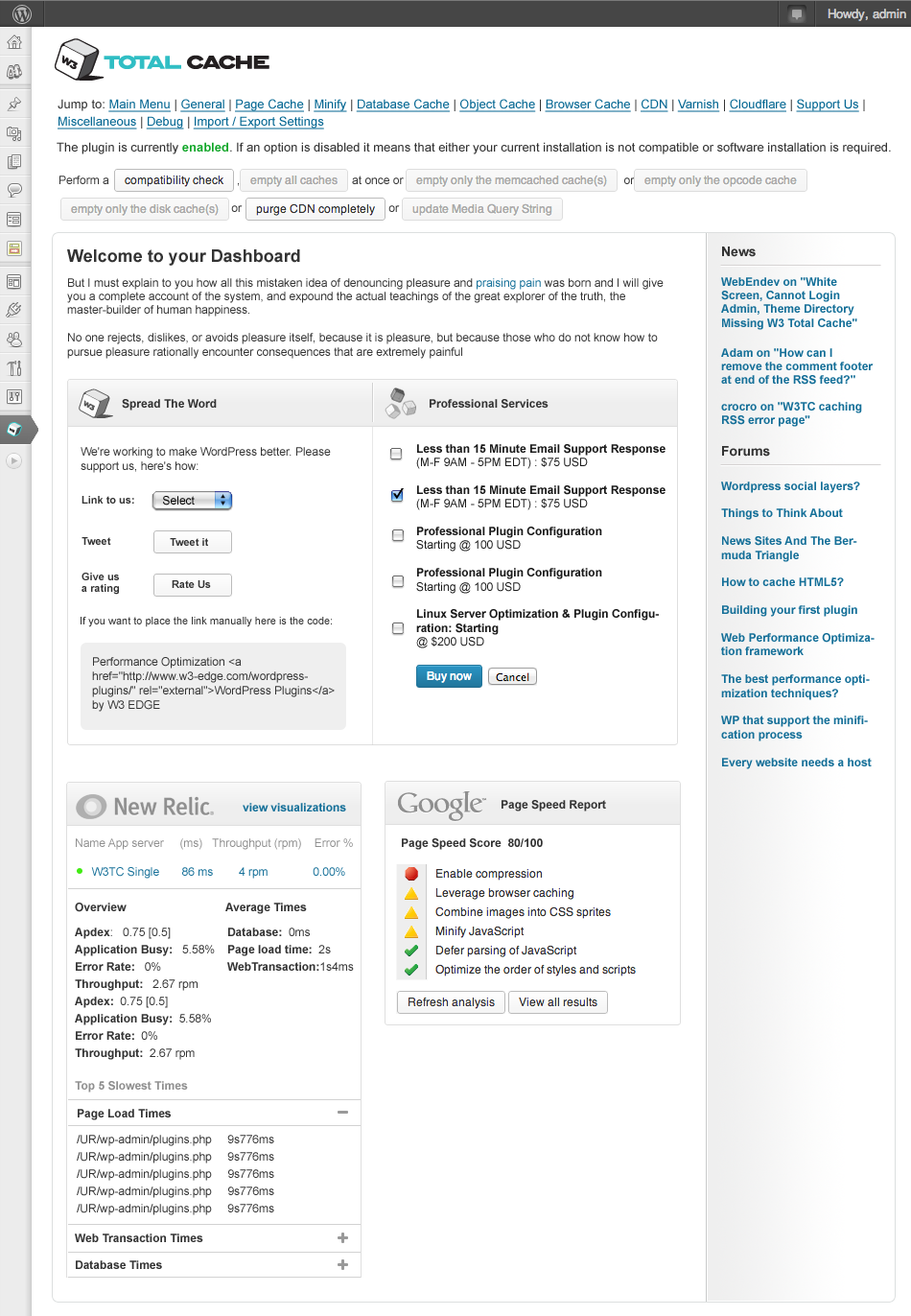

W3 Total Cache, the Web Performance Optimization framework for WordPress, now provides a robust integration with New Relic, exposing even more data and insight into the platform.

So, if you have a New Relic enabled host like Page.ly or Kinsta, you can put everything discussed here to use with ease. All of these performance optimization techniques and hundreds more best practices are packed and ready to be managed inside the W3 Total Cache framework.

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。