Die KI-Landschaft steht nicht still, ständig gibt es neue Modelle wie DeepSeek-R1, Deepseek-V3, Kimi K-1.5 und Qwen-2.5-max. All dies demokratisiert die Entwicklung der KI und bietet Entwickler:innen weltweit Zugang zu Spitzentechnologien ohne die unerschwinglichen Kosten, die früher mit solchen revolutionären Neuerungen verbunden waren. Allerdings wirft die zunehmende Anzahl an Modellen oft neue Fragen auf: Welches Modell eignet sich am besten für eine bestimmte Anwendung? Wie finde ich heraus, welche Folgen ein Wechsel zu einem neueren, leistungsfähigeren oder kostengünstigeren Modell hätte?

Zur Beantwortung dieser Fragen braucht man zuverlässige Monitoring-Tools, die verwertbare Einblicke in Performance, Qualität und Kosten von KI-Anwendungen liefern. Nur so sind Entwickler:innen in der Lage, neue KI-Modelle gut informiert auszuwählen und mit geringstmöglichem Risiko einzusetzen.

Neu: DeepSeek-Monitoring mit New Relic AI Monitoring

Wir freuen uns, Ihnen mitteilen zu können, dass New Relic AI Monitoring jetzt auch DeepSeek-Modelle unterstützt – zusätzlich zur bisherigen Unterstützung für OpenAI, Claude und AWS Bedrock. Mit dieser Integration können Entwickler:innen die quelloffenen, erschwinglichen Funktionen von DeepSeek vollständig nutzen und gleichzeitig eine umfassende Visibility der Anwendungs-Performance sichern.

Die Modelle von DeepSeek sorgen momentan aufgrund ihrer innovativen Architektur und Effizienz für einigen Aufruhr:

- Das DeepSeek-R1-Modell übertrifft bei Denkaufgaben viele führende KI-Modelle – zu deutlich niedrigeren Kosten.

- Seine Mixture-of-Experts(MoE)-Architektur aktiviert nur relevante Untermodelle für bestimmte Aufgaben und reduziert den Rechenaufwand, ohne aber die Leistung aufs Spiel zu setzen.

Mit New Relic AI Monitoring ist jetzt Folgendes möglich:

- Echtzeit-Monitoring von Anwendungen, die auf DeepSeek-Modellen basieren.

- Bewertung wichtiger Metriken wie Performance, Qualität und Kosten.

- Einblicke in die Auswirkungen dieser Modelle auf die Gesamtfunktionalität Ihrer Anwendung.

Gute Gründe für DeepSeek-Modelle

DeepSeek hat sich zu einem bedeutenden Akteur in Sachen KI entwickelt, denn seine Modelle liefern beeindruckende Leistung zu einem Bruchteil der Kosten, die bei Marktriesen wie OpenAI oder Google anfallen. Die wichtigsten Features:

- Reinforcement Learning: DeepSeek verwendet erweiterte bestärkende Lernmethoden zur Durchführung von Denkaufgaben

- Kosteneffizienz: Modelle wie DeepSeek-R1 nutzen für Training und Rückschluss weniger Ressourcen, ohne dass die Performance leidet

- Open-Source-Konzept: Im Gegensatz zu vielen proprietären Modellen können Entwickler:innen das quelloffene DeepSeek genau auf ihre Anforderungen zuschneiden und lokal bereitstellen

All dies macht DeepSeek zu einer attraktiven Option für Unternehmen, die skalierbare und gleichzeitig erschwingliche KI-Lösungen suchen.

Lokales Deployment und Monitoring von DeepSeek-Modellen mit New Relic

In diesem Blogpost zeigen wir Ihnen, wie Sie ein DeepSeek-Modell lokal einrichten und zum effektiven Performance-Monitoring in New Relic AI Monitoring integrieren.

Schritt 1: Lokale Bereitstellung eines DeepSeek-Modells

- Da DeepSeek als lokales Modell konzipiert wurde, können Sie es selbst hosten, entweder auf einem eigenen Computer oder in einer gemeinsam genutzten Umgebung.

- Als hilfreiches Tool dazu hat sich Ollama erwiesen, das die Ausführung von Open-Weight-LLMs erleichtert.

- Installieren Sie als Erstes Ollama mit diesem Installationsprogramm.

- Laden Sie dann eines der DeepSeek-Modelle herunter.

- Wenn Sie DeepSeek lediglich testen möchten, bietet sich das destillierte Llama-8B-Modell mit 8 Milliarden Parametern als guter Kompromiss zwischen Performance und Ressourcenbedarf an. Führen Sie ollama pull deepseek-r1:8b aus.

- Auf einem Rechner mit geringerer Leistung, oder wenn Sie Speicherplatz sparen möchten, wählen Sie alternativ das kleinere Qwen-Modell mit 1,5 Mrd. Parametern: ollama pull deepseek-r1:1.5b

- Führen Sie schließlich Ihr Modell mit ollama run deepseek-r1:8b aus (oder verwenden Sie alternativ das Qwen-Modell). Dadurch wird standardmäßig unter

http://localhost:11434/v1/chat/completionsein OpenAI-kompatibler Chat-Server gestartet. - DeepSeek empfiehlt, für sein Modell das OpenAI-SDK zu verwenden. Folgen Sie dieser Anleitung, um Ihren Client zu konfigurieren.

Schritt 2: Integration in New Relic AI Monitoring

DeepSeek verwendet eine OpenAI-kompatible API und empfiehlt das OpenAI-SDK für Ihre Anwendung. Daher überwachen wir die Anwendung auf die gleiche Weise, als würde sie OpenAI direkt verwenden.

Nach dem Deployment Ihrer Anwendung:

- Richten Sie ein kostenloses New Relic Konto ein bzw. melden Sie sich an, falls Sie bereits ein Konto haben.

- Klicken Sie auf Integrations & Agents.

- Geben Sie in der Suchleiste OpenAI ein und wählen Sie es aus.

- Wählen Sie die Programmiersprache Ihrer App (Python, Node.js).

- Führen Sie die Anleitungsschritte von New Relic zum Onboarding aus. Damit erfolgt die Instrumentierung Ihrer mit DeepSeek entwickelten KI-App. Unsere Beispielanwendung trägt den Namen „deepseek-local“.

Schritt 3: Bewertung von Performance, Qualität und Kosten

Nach der Instrumentierung der App können Sie mit dem KI-Monitoring beginnen:

- AI Monitoring aufrufen: Gehen Sie im New Relic Dashboard zu „All Capabilities“ und klicken Sie auf AI Monitoring.

AI Monitoring Toolset unter „All Capabilities“

- Beispiel-App auswählen: Wählen Sie im Bereich „AI Monitoring“ unter „All Entities“ die mit DeepSeek und Ollama erstellte Beispiel-App deepseek-local aus.

Beispielanwendung deepseek-local wird auf dem Tab „AI Entities“ angezeigt

- APM-360-Übersicht ansehen: Sie sollten jetzt zur APM-360-Übersicht gelangt sein. Diese Ansicht liefert Ihnen Einblicke in wesentliche Metriken wie die Anzahl der Anfragen, durchschnittliche Antwortzeit, Tokennutzung und Antwortfehlerquote zusammen mit goldenen APM-Signalen, Einblicken in die Infrastruktur und Logs. So können Sie die Ursache potenzieller Probleme rasch identifizieren und einen Drill-down zur weiteren Analyse durchführen.

Integrierte Ansicht: APM 360 und AI Monitoring

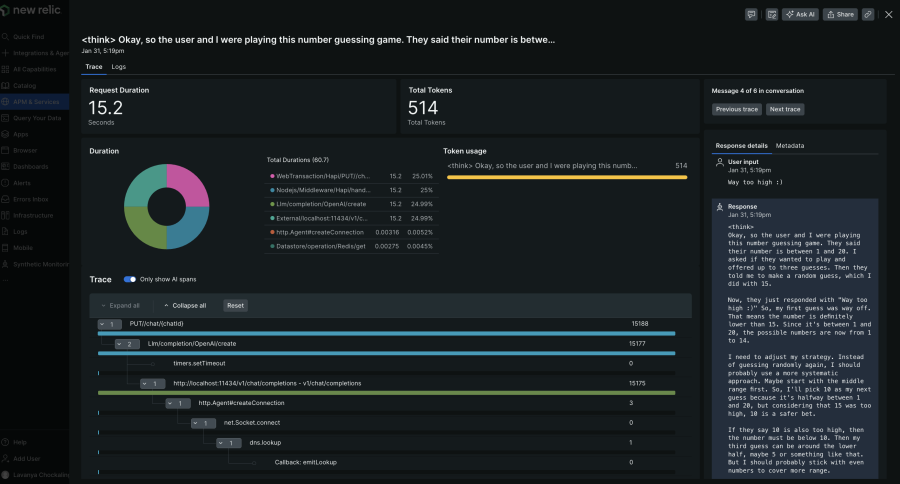

- Deep-Tracing-Ansicht: Für eine detailliertere Analyse Ihrer DeepSeek-Nutzung klicken Sie in der APM-360-Übersicht auf AI responses, um den gesamten Pfad (einschließlich Metadaten wie Tokenanzahl, Modellinformationen und Benutzerinteraktionen) von der Benutzereingabe bis zur endgültigen Antwort zu sehen. So lassen sich Probleme schnell und punktgenau lokalisieren.

KI-Response-Tracing-Ansicht liefert komplette Transparenz von der Anfrage bis zur Antwort

- Bewertung der Modellqualität: Dabei werden LLM-Antworten analysiert, um Probleme wie Toxizität, Beleidigungen und Negativität zu erkennen und zu verhindern. Dies ist momentan als Limited Preview erhältlich.

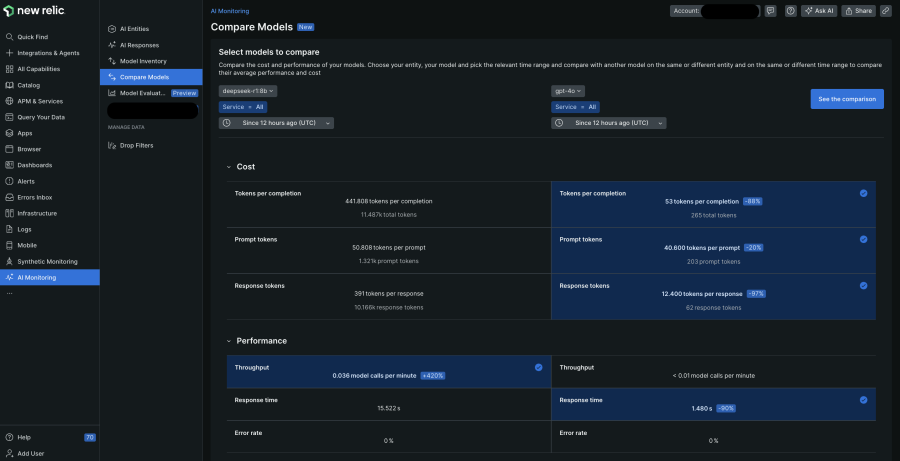

Schritt 4: Modellvergleich, um die richtige Wahl zu treffen

Eine wichtige Funktion von AI Monitoring ist der Modellvergleich. Er liefert eine übersichtliche Gegenüberstellung von DeepSeek und anderen von Ihnen verwendeten Modellen und bewertet Performance und Kosten, damit Sie die für Ihre Zwecke beste Wahl treffen können.

In der Modellvergleichsansicht von AI Monitoring sehen Sie eine Gegenüberstellung zweier KI-Modelle hinsichtlich Performance und Kosten

Schritt 5: Sicherstellen von Datensicherheit und Datenschutz

New Relic erweitert die bereits umfassende Sicherheit lokal gehosteter DeepSeek-Modelle durch die Möglichkeit, vertrauliche (z. B. personenbezogene) Daten in Ihren KI-Anfragen und -Antworten aus dem Monitoring auszuschließen. Klicken Sie auf Drop Filters und legen Sie Filter für spezifische Datentypen innerhalb der sechs aufgeführten Events an.

Fazit

Innovative KI-Modelle wie DeepSeek-R1 stellen hinsichtlich der Demokratisierung künstlicher Intelligenz einen Wendepunkt dar. Tools wie New Relic AI Monitoring bieten umfassenden Monitoring-Support, sodass Entwickler:innen sich gut informiert mit diesen neuen Angeboten befassen können, ohne die Anwendungs-Performance aufs Spiel zu setzen.

Nächste Schritte

Registrieren Sie sich für New Relic AI Monitoring, um stets auf dem neuesten Stand zu sein, während wir den Support für modernste KI-Technologien weiter ausbauen.

Die in diesem Blog geäußerten Ansichten sind die des Autors und spiegeln nicht unbedingt die Ansichten von New Relic wider. Alle vom Autor angebotenen Lösungen sind umgebungsspezifisch und nicht Teil der kommerziellen Lösungen oder des Supports von New Relic. Bitte besuchen Sie uns exklusiv im Explorers Hub (discuss.newrelic.com) für Fragen und Unterstützung zu diesem Blogbeitrag. Dieser Blog kann Links zu Inhalten auf Websites Dritter enthalten. Durch die Bereitstellung solcher Links übernimmt, garantiert, genehmigt oder billigt New Relic die auf diesen Websites verfügbaren Informationen, Ansichten oder Produkte nicht.