The AI landscape is constantly evolving with the emergence of new models like DeepSeek-R1, Deepseek-V3, Kimi K-1.5, and Qwen-2.5-max. These advancements are democratizing AI development, enabling innovators and developers worldwide to access cutting-edge technologies without the prohibitive costs traditionally associated with such breakthroughs. However, the growing number of available models often raises critical questions: Which model is best suited for a specific application? How can one assess the impact of switching to a newer, higher-performing, or more cost-effective model?

To address these concerns, having robust monitoring tools that provide actionable insights into AI application performance, quality and cost are essential. This empowers developers to confidently adopt or transition between AI models while minimizing risks.

Introducing DeepSeek monitoring with New Relic AI monitoring

We are excited to announce that New Relic now extends its AI monitoring support to DeepSeek models, adding to our existing coverage of OpenAI, Claude, and AWS Bedrock. This integration allows developers to fully leverage DeepSeek’s open-source, cost-effective capabilities while maintaining comprehensive visibility into application performance.

DeepSeek’s models have gained significant attention due to their innovative architecture and efficiency. For instance:

- The DeepSeek-R1 model outperforms many leading AI models in reasoning tasks while maintaining a significantly lower cost structure.

- Its mixture-of-experts (MoE) architecture activates only relevant submodels for specific tasks, reducing computational overhead without compromising performance.

With New Relic AI monitoring, you can now:

- Monitor applications built on DeepSeek models in real time.

- Evaluate key metrics such as performance, quality, and cost.

- Gain insights into how these models impact your application’s overall functionality.

Why choose DeepSeek models?

DeepSeek has emerged as a formidable player in the AI space by delivering high-performing models at a fraction of the cost required by larger competitors like OpenAI or Google. Key features include:

- Reinforcement learning: DeepSeek employs advanced reinforcement learning techniques for reasoning tasks.

- Cost efficiency: Models like DeepSeek-R1 use fewer resources during training and inference while maintaining state-of-the-art performance.

- Open source accessibility: Unlike many proprietary models, DeepSeek’s open-source nature allows developers to customize and deploy locally without restrictions.

These attributes make DeepSeek an attractive choice for businesses seeking scalable AI solutions without incurring exorbitant expenses.

Setting up and monitoring DeepSeek models locally with New Relic

In this blog post, we’ll guide you through setting up a DeepSeek model locally and integrating it with New Relic AI monitoring to monitor its performance effectively.

Step 1: Deploying a DeepSeek model locally

- Because DeepSeek released its model locally, you can host it yourself, either on a personal machine or in a shared environment.

- One easy way to run DeepSeek models is using Ollama, a tool to easily run open-weight large language models.

- First, install Ollama using its installer here.

- Next, download one of the DeepSeek models.

- If you’re just testing DeepSeek, the 8B parameter Llama distilled model should strike a good balance between performance and resource demands. Run ollama pull deepseek-r1:8b

- On a lower-power machine, or if you want to try to save disk space, try the smaller Qwen 1.5B parameter model ollama pull deepseek-r1:1.5b

- Finally, run your model with ollama run deepseek-r1:8b (or substitute the Qwen model if you downloaded that). This will by default start an OpenAI-compatible chat server at

http://localhost:11434/v1/chat/completions. - DeepSeek recommends using the OpenAI SDK to interact with its model. To configure your client, follow the instructions here.

Step 2: Integrating with New Relic AI monitoring

DeepSeek uses an OpenAI-compatible API and suggests using the OpenAI SDK in your application. We’ll therefore monitor the application exactly as we would an application that uses OpenAI directly.

Once your application is deployed:

- Sign up for a free New Relic account or log in if you have one already.

- Click on Integrations & Agents.

- In the search bar, type OpenAI and then select it.

- Choose your application’s programming language (Python, Node.js).

- Follow the guided onboarding process provided by New Relic. This process will walk you through instrumenting your AI application built with DeepSeek. We’ve named our sample app “deepseek-local”.

Step 3: Evaluating performance, quality, and cost

Once your application is instrumented, you can start using AI monitoring:

- Access AI monitoring: Navigate to All Capabilities in your New Relic dashboard and click on AI Monitoring.

AI Monitoring capability under "All Capabilities"

- Select the sample app: In the AI Monitoring section, under All Entities, select the deepseek-local sample app built using DeepSeek and Ollama.

Deepseek-local sample app showing up under AI Entities tab

- View APM 360 summary: After navigating, you will be on the APM 360 summary. This view gives you insights into key metrics like the number of requests, average response time, token usage, and response error rates, alongside your APM golden signals, infrastructure insights, and logs. You can quickly identify the source of issues and drill down for further analysis.

Integrated view of APM 360 and AI Monitoring

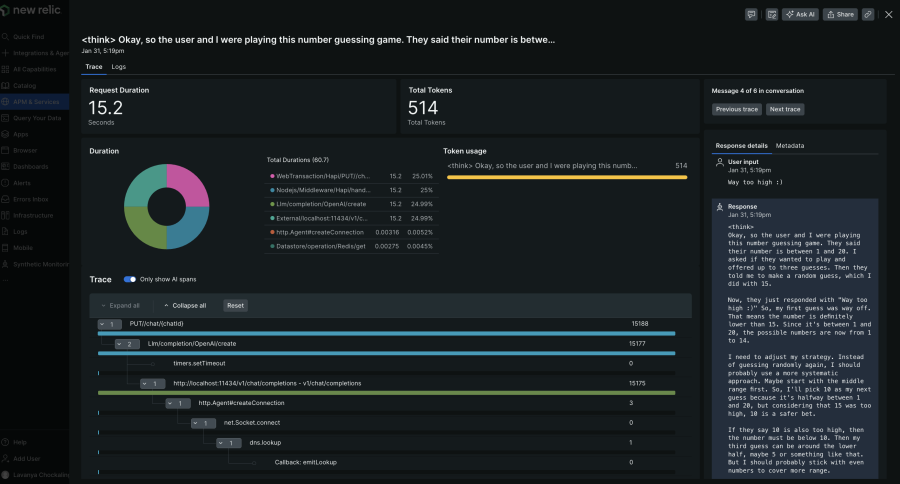

- Deep tracing view: For more detailed analysis related to your DeepSeek usage, click on AI responses in the APM 360 summary to see the entire path from user input to final response, including metadata like token count, model information, and user interactions. This helps in quickly identifying root causes of issues.

AI Responses tracing view that provides end-to-end visibility of the request and response

- Model quality evaluation: Analyzes LLM responses to identify and mitigate issues such as toxicity, insult, and negativity. Currently, this is available in limited preview.

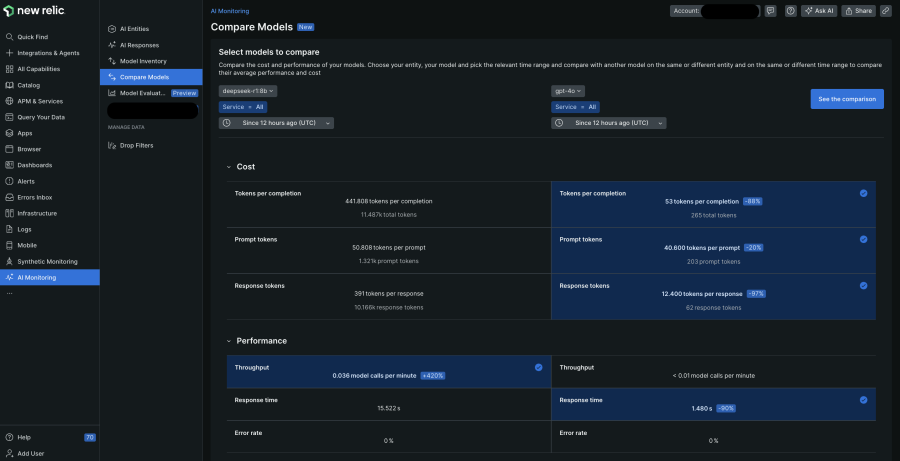

Step 4: Compare models to pick the right one

Model comparison is a key part of AI monitoring. It allows for a simple comparison of DeepSeek models with other models you’ve used, evaluating performance and cost to make the best choice for your needs.

AI Monitoring's unique model comparison view that shows how two AI models compare against performance and cost

Step 5: Security and data privacy

Complementing the security advantage of locally hosted DeepSeek models, New Relic allows you to exclude monitoring of sensitive data (such as personal identifiable information or PII) in your AI requests and responses. Click on Drop Filters and then create filters to target specific data types within the six events offered.

Conclusion

The emergence of innovative AI models like DeepSeek-R1 marks a pivotal moment in the democratization of artificial intelligence. With tools like New Relic AI monitoring providing comprehensive monitoring support, developers can confidently explore these new horizons while ensuring optimal application performance.

다음 단계

Sign up for New Relic AI monitoring and stay ahead of this rapidly evolving field as we continue expanding support for cutting-edge AI technologies.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.