Imagine this familiar scenario: A new incident hits production; your team is getting paged and a growing number of people are getting on the conference bridge or joining the Slack channel to troubleshoot. A lot of chatter takes place. Everyone is trying to figure out what went wrong.

But almost immediately, it feels like there are a lot more people on this than are necessary. If I had to throw a number out, maybe 50% more people than necessary. There’s way too much noise and not enough clear information about the root cause of the incident. Getting so many people involved is not exactly efficient and inflates the time required to resolve this incident.

As an incident first responder, my top priority is always to understand what went wrong and where, so I try to involve the right people. At the beginning, it’s not always easy to immediately answer these questions, so we bring in more people thinking we’ll collaborate and mitigate the incident quickly. But it never happens that way. In the long run, it ends up decreasing the team’s productivity and effectiveness.

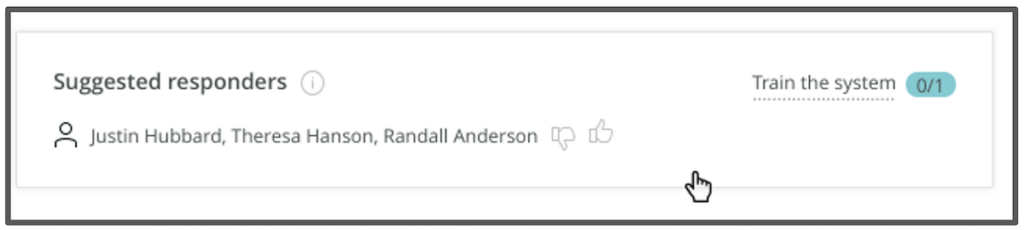

In this blog, I’ll tell you how to address incidents and resolve production issues faster with a feature in our Applied Intelligence product, and I'll share some of the details about how it works. Within the Incident Intelligence capability is a feature called suggested responders, and it augments every new incident by identifying in real time the most relevant team members to help resolve it. Applying machine learning (ML) to analyze your past interactions, New Relic identifies the most relevant users in your organization who can help resolve an incident.

If you’re an Incident Intelligence customer, suggested responders is automatically available with no configuration or setup needed.

Machine learning powered

When you opt in to Incident Intelligence, New Relic automatically trains an ML model to analyze your account’s historic incident data and overall New Relic user interaction. As soon as the model training is completed—which happens as more and more users interact with the system—future incidents will be augmented in real time with those people best suited to help resolve them. The responders list is displayed both in the New Relic issue page and within the issue notification payload, so you can see it directly from where you usually respond to incidents.

For each suggestion, you can provide feedback in the form of a thumbs up/down vote, so that the model could become even more accurate.

How the machine learning model works

The suggested responders model uses past violations, combined with user analytics data, to predict the most likely responders for new violations. The model consists of three phases: supervised pattern recognition, label spreading, and a recommender engine.

Supervised pattern recognition: This step predicts, based on how users interacted with the New Relic platform in the past, whether they closed the violation. It curates a labeled dataset that includes only violations for which we know for certain the user who closed them. We then train a binary classifier that treats the users’ actions as features and whether they closed the violations as the target. Suggested responders uses this trained model in the next step.

Label spreading: The purpose of this step is to increase the coverage of our labeled dataset by augmenting it with violations for which we do not know the user who closed it. We construct an unlabeled dataset that links each violation to the user actions that occurred while the violations were still open. We then run the unlabeled dataset through the trained model. The output of this stage is a responders table that assigns, for each violation-user pair, the likelihood that the user closed the violation. This table is used in the next step to identify the users who are most likely to resolve a certain violation. We update the table periodically, so that it will reflect the most recent violations.

Recommender engine: The third and last step of the model uses the responders table generated in the previous stage to suggest responders to new violations. When a new violation occurs, the model computes a similarity score between that violation and the violations that appear in the responders table. The similarity score is based on many fields, such as the type of product, target id, policy id, condition id, golden signal, and components of the violation at hand. We then use the similarity score as a weight to compute a weighted score for each user across all the violations in the table. The weighted score can be interpreted as the (predicted) level of involvement a user had with similar violations in the past. The model returns the users who received the highest weighted score. These are the suggested responders.

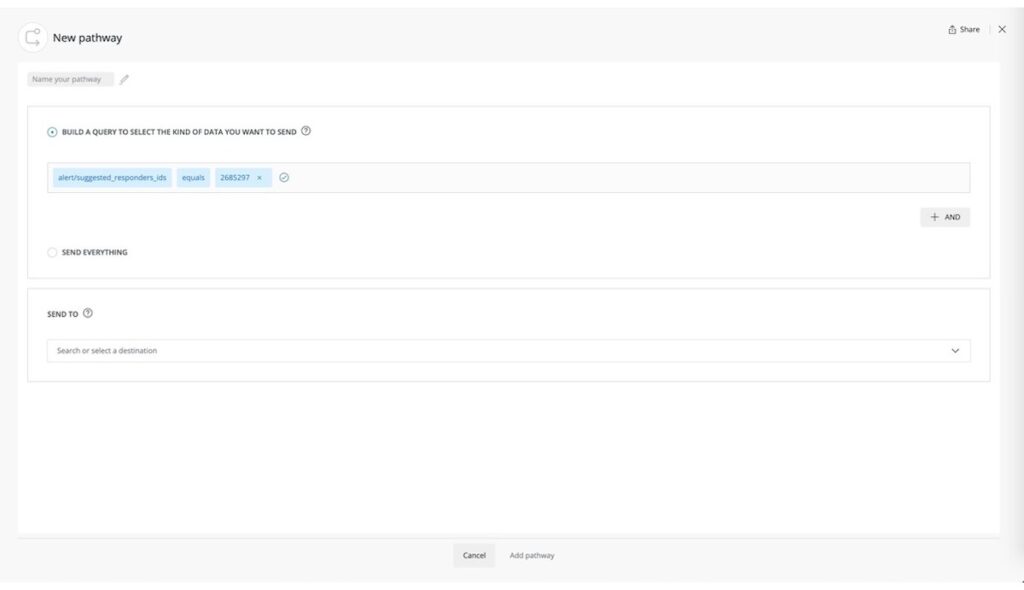

How to use suggested responders to route incident notifications

Now that you know how suggested responders works, you can start using it in new ways and further increase your response efficiency and resolve production issues faster. One way to do that is by configuring a pathway logic that, for instance, routes the alerts notification to a specific channel whenever it predicts certain users as suggested responders for a given incident. This lets you ensure that the right people will always be notified for the right incidents.

Get started now and resolve production issues faster

To get up and running with suggested responders, all you need to do is to opt in to Incident Intelligence and start ingesting your violations. The model trains itself and gives you suggestions about 24 hours after opt-in. The more you engage, the more recommendations will increase. For instance, if you interact with New Relic One entities or define more alert conditions and policies in your account, the model will increase recommendations.

If you’re not a New Relic Applied Intelligence customer and would like to experience its simplicity yourself, sign up for a forever free account and check out New Relic Applied Intelligence.

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。