Observability in serverless environments can be challenging, but AWS Distro for OpenTelemetry (ADOT) simplifies this by providing a standardized, vendor-neutral way to collect and export telemetry. ADOT allows you to leverage industry-standard OpenTelemetry APIs to instrument your applications without being locked into a single observability backend.

The challenge with containerized Lambdas is that they do not support standard Lambda Layers. Since ADOT is typically deployed as a layer for Lambda functions, we need an alternative way to get the telemetry agent into our execution environment. This post walks through a practical workaround by embedding ADOT directly into your container image using a multi-stage Docker build, followed by exporting that telemetry to New Relic.

Why Container Images?

AWS Lambda supports two deployment types: ZIP packages (with Lambda Layers) and container images. Container images offer significant advantages:

- Larger deployment packages up to 10GB vs. 250MB for ZIP

- Consistent tooling with your existing CI/CD pipelines

- Full control over runtime dependencies and versions

- Pre-built dependencies that reduce cold start times for complex applications

The Problem?

The container images can't use Lambda Layers directly. Lambda Layers are a ZIP-deployment feature. When you deploy via ZIP, AWS extracts layer contents to /opt at runtime. Container images bypass this mechanism entirely. They are self-contained filesystems where you control everything, but AWS has no hook to inject layer content. For ADOT integration, this means we need an alternative approach.

The Solution: Multi-Stage Docker Build

The key insight is that Lambda Layers are simply ZIP archives extracted to /opt at runtime. We can replicate this by downloading and extracting the ADOT layer content during the Docker build process.

Project Structure

├── Dockerfile

├── template.yaml

└── src/

├── index.js

└── package.jsonStep 1: Create the Dockerfile

The multi-stage build downloads the ADOT layer in a lightweight Alpine container, then copies the contents to your Lambda image:

# Stage 1: Builder - Download and extract the ADOT Lambda Layer

# Using Alpine for minimal footprint and fast download

FROM alpine as builder

# Install tools needed to fetch and extract the layer

RUN apk add --no-cache curl unzip

# ADOT layer URL - update this if using a different language runtime

ARG ADOT_LAYER_URL="https://github.com/aws-observability/aws-otel-js-instrumentation/releases/latest/download/layer.zip"

# Download and extract to /opt (same location AWS uses for layers)

RUN curl -Lo /tmp/layer.zip "${ADOT_LAYER_URL}" && \

unzip /tmp/layer.zip -d /opt && \

rm /tmp/layer.zip

# Stage 2: Final Lambda Image

FROM public.ecr.aws/lambda/nodejs:22

# Copy ADOT layer contents from builder

COPY --from=builder /opt /opt

# Make the ADOT wrapper script executable

RUN chmod +x /opt/otel-instrument

# Install dependencies and copy function code

COPY src/package.json ${LAMBDA_TASK_ROOT}

RUN npm install

COPY src/index.js ${LAMBDA_TASK_ROOT}

CMD [ "index.handler" ]Note: The chmod +x command is essential. The wrapper script must be executable for ADOT to instrument your function.

Step 2: Configure SAM Template

The SAM template configures the Lambda function and sets the environment variables needed for ADOT. By default, ADOT exports to AWS X-Ray, but we'll configure New Relic as our destination.

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: Container Lambda with ADOT and New Relic

Parameters:

NewRelicLicenseKey:

Type: String

Description: "New Relic Ingest License Key"

NoEcho: true

Globals:

Function:

Timeout: 30

MemorySize: 256

LoggingConfig:

LogFormat: JSON

Environment:

Variables:

AWS_LAMBDA_EXEC_WRAPPER: /opt/otel-instrument

# Disable AWS Application Signals to prevent interference

OTEL_AWS_APPLICATION_SIGNALS_ENABLED: 'false'

# Enable specific instrumentations (Optional)

OTEL_NODE_ENABLED_INSTRUMENTATIONS: 'aws-sdk,aws-lambda,http,pino'

OTEL_SERVICE_NAME: container-lambda-hello

OTEL_PROPAGATORS: 'tracecontext,baggage'

# Exporters

OTEL_TRACES_EXPORTER: otlp

OTEL_METRICS_EXPORTER: otlp

OTEL_LOGS_EXPORTER: otlp

# OTLP Endpoints (signal-specific)

OTEL_EXPORTER_OTLP_PROTOCOL: http/protobuf

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT: https://otlp.nr-data.net:4318/v1/traces

OTEL_EXPORTER_OTLP_METRICS_ENDPOINT: https://otlp.nr-data.net:4318/v1/metrics

OTEL_EXPORTER_OTLP_LOGS_ENDPOINT: https://otlp.nr-data.net:4318/v1/logs

OTEL_EXPORTER_OTLP_HEADERS: !Sub "api-key=${NewRelicLicenseKey}"

Resources:

MyFunction:

Type: AWS::Serverless::Function

Properties:

PackageType: Image

Architectures:

- x86_64

Events:

Api:

Type: HttpApi

Metadata:

DockerTag: latest

DockerContext: .

Dockerfile: Dockerfile

Outputs:

ApiEndpoint:

Description: "API Gateway endpoint URL"

Value: !Sub "https://${ServerlessHttpApi}.execute-api.${AWS::Region}.amazonaws.com/"Key environment variables for New Relic:

- OTEL_EXPORTER_OTLP_TRACES_ENDPOINT: Signal-specific endpoint for traces

- OTEL_EXPORTER_OTLP_HEADERS: Your New Relic Ingest License Key (passed via CloudFormation parameter)

- OTEL_SERVICE_NAME: Identifies your service in New Relic

- OTEL_NODE_ENABLED_INSTRUMENTATIONS: (Optional) Enable specific instrumentations including pino for structured logging

Known Issue: As of writing, the ADOT Node.js layer ignores OTEL_EXPORTER_OTLP_ENDPOINT in favor of signal-specific endpoints like OTEL_EXPORTER_OTLP_TRACES_ENDPOINT. This is why we use separate endpoints for traces, metrics, and logs.

See GitHub Issue #297 for details.

Step 3: Add Custom Instrumentation and Metrics (Optional)

For richer observability, add custom spans, metrics, and structured logging using the OpenTelemetry API with pino. Add the following dependencies:

{

"type": "module",

"dependencies": {

"@opentelemetry/api": "^1.9.0",

"pino": "^9.0.0"

}

}Create a metrics helper (metrics.js):

import { metrics } from '@opentelemetry/api';

const meter = metrics.getMeter('my-lambda-metrics');

const workDurationHistogram = meter.createHistogram('work_item_duration', {

description: 'Duration of work items in milliseconds',

unit: 'ms',

});

const workItemCounter = meter.createCounter('work_item_count', {

description: 'Count of work items processed',

});

export const recordWorkMetrics = (id, duration, success = true) => {

workDurationHistogram.record(duration, { 'work.item.id': id, 'work.success': success });

workItemCounter.add(1, { 'work.item.id': id, 'work.success': success });

};Instrument your handler with pino logging. Pino is default supported by OpenTelemetry library and is patched on the fly

import { trace, SpanStatusCode } from '@opentelemetry/api';

import pino from 'pino';

import { recordWorkMetrics } from './metrics.js';

// Pino logger - ADOT auto-instruments this

const logger = pino({ level: 'info' });

const doWork = async (id) => {

const tracer = trace.getTracer('my-lambda-tracer');

const span = tracer.startSpan(`doWork-${id}`);

span.setAttribute('work-item-id', id);

try {

logger.info({ itemId: id }, `Starting work item ${id}`);

const delay = Math.floor(Math.random() * 200) + 100;

await new Promise(resolve => setTimeout(resolve, delay));

logger.info({ itemId: id, duration: delay }, `Completed work item ${id}`);

recordWorkMetrics(id, delay, true);

span.setStatus({ code: SpanStatusCode.OK });

return { id, duration: delay };

} catch (error) {

logger.error({ itemId: id, error: error.message }, `Work item ${id} failed`);

recordWorkMetrics(id, 0, false);

span.recordException(error);

span.setStatus({ code: SpanStatusCode.ERROR, message: error.message });

return { id, error: error.message };

} finally {

span.end();

}

};

export const handler = async (event) => {

const tracer = trace.getTracer('my-lambda-tracer');

return tracer.startActiveSpan('handler-span', async (span) => {

logger.info({ event }, 'Event received');

try {

logger.info('Initializing processing...');

const results = await Promise.all([1, 2, 3].map(doWork));

span.setStatus({ code: SpanStatusCode.OK });

span.end();

return { statusCode: 200, body: JSON.stringify({ results }) };

} catch (error) {

logger.error({ error: error.message }, 'Handler failed');

span.recordException(error);

span.setStatus({ code: SpanStatusCode.ERROR, message: error.message });

span.end();

return { statusCode: 502, body: JSON.stringify({ error: 'Bad Gateway' }) };

}

});

};Step 4: Build and Deploy

# Build the container image

sam build

# Deploy to AWS

sam deploy --guidedDuring guided deployment, SAM will:

- Create an ECR repository for your container image

- Build and push the image

- Deploy the Lambda function with API Gateway

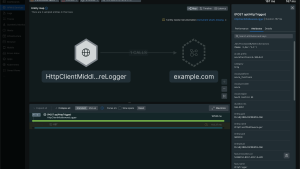

Step 5: Verify in New Relic

After invoking your function a few times, navigate to New Relic to view your telemetry:

1. APM & Services: Find your service by the OTEL_SERVICE_NAME you configured

2. Distributed Tracing: View the complete trace waterfall, including custom spans

3. Errors & Custom Instrumentation: Any exceptions recorded with span.recordException() appear in the Errors inbox. Custom spans created with the OpenTelemetry API (like our doWork-{id} spans) are visible in traces with their attributes.

Considerations

ADOT introduces some operational overhead to your Lambda function that is worth noting.

- Cold Starts: Since ADOT is a repackaged version of the OpenTelemetry Lambda Layer, it adds a slight overhead during cold starts.

- Memory Limits: We recommend starting with 256MB and adjusting based on your workload. Monitor memory usage in New Relic's Lambda monitoring to right-size your allocation.

Note: These memory recommendations are based on the Node.js ADOT layer. Memory requirements vary between supported languages. Python and Java runtimes may have different overhead profiles. Always benchmark your specific language and workload.

Key Takeaways

- Container-based Lambdas can use ADOT via multi-stage Docker builds

- The ADOT layer content is extracted to /opt just like native Lambda Layers

- New Relic's OTLP endpoint provides seamless integration with ADOT

- Custom instrumentation enhances trace detail beyond auto-instrumentation

Conclusion

Container images and OpenTelemetry are a powerful combination for serverless observability. By embedding ADOT directly in your container build and exporting to New Relic, you get the best of both worlds: the flexibility of container deployments and deep, actionable telemetry.

Ready to get started? Check out these resources:

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。