What is software risk analysis?

Software risk analysis identifies, assesses, and prioritizes potential risks associated with a software project. The goal is to anticipate and mitigate potential problems that may arise during the development, deployment, or maintenance of software. Risk analysis is an integral part of the overall risk management process in software development and is aimed at improving the chances of successful project completion.

Key steps in software risk analysis include:

Identification of risks:

Identifying potential risks involves brainstorming and analyzing various aspects of the software project. This may include technical, operational, organizational, and external factors that could impact the project.

Risk assessment:

Once risks are identified, they need to be assessed in terms of their probability of occurrence and potential impact on the project. This assessment helps prioritize risks based on their severity and likelihood.

Risk Mitigation planning:

After assessing the risks, strategies for mitigating or managing those risks are developed. This involves creating plans to reduce the probability of the risk occurring or minimizing its impact if it does occur.

Monitoring and control:

Throughout the software development lifecycle, risks are continuously monitored to track changes in their status. Regular updates and adjustments to risk management plans may be necessary based on the evolving nature of the project.

The need for software risk analysis

Performing software risk analysis is crucial because it helps identify, assess, and manage potential challenges that may impact the success of a software project. Systematically analyzing technical, operational, organizational, and external factors helps development teams proactively anticipate and address issues before they escalate.

This process aids in making informed decisions, allocating resources effectively, and establishing mitigation strategies to handle unforeseen circumstances. This blog post will focus on building a risk matrix to help software teams identify potential threats early, enabling the team to take preventive actions and, ultimately, increasing the likelihood of delivering a successful and high-quality software product.

New Relic's risk matrix

One of the most important artifacts to come out of our site reliability work at New Relic is something we call the risk matrix. Essentially, a risk matrix is a list of things teams identify that could go wrong with their services and components. Teams then categorize those risks according to their likelihood and impact.

In the STELLA report, the research group SNAFUcatchers determined that team members who handle the same parts of a system often have different mental models of what the system looks like. How each member of a team thinks their system works, as opposed to how it actually works, can quickly get out of sync as members commit various changes to the system’s code base. All too often, this is a normal state of affairs.

Don’t miss: 4 Key Lessons About Effective Incident Response [Video]

Aligning on the inherent risks in our services and components can help sync our teams on the actual shape of our ecosystem. Achieving this alignment also makes it easier to prioritize reliability work—and actually get it done. Even more important, that clarity translates into business value to the rest of the organization.

So, creating and reviewing risk matrices helps our teams:

- Synchronize our drifting mental models

- Onboard new team members

- Recognize and address hotspots or unexpected gotchas in our systems

- See new risks when things change

- Prioritize reliability work to avoid future disasters

How to create a risk matrix for software risk analysis

New Relic publishes an internal “risk matrix how-to guide” designed to help teams create and maintain their own matrices. The following four steps for creating a risk matrix were adapted from that guide.

Step 1: Modeling the risk matrix

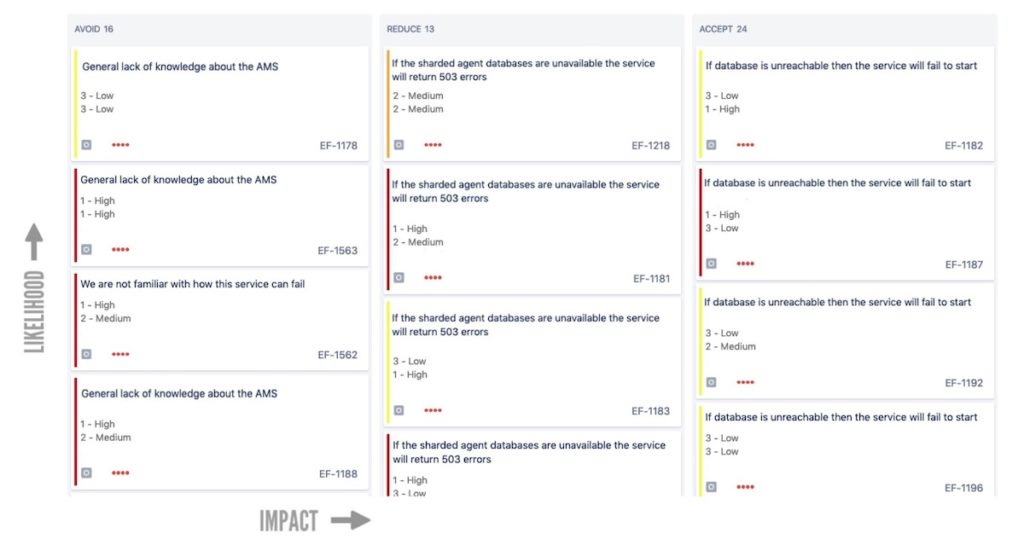

We organize our risk matrices using the Threat Assessment and Remediation Analysis (TARA) methodology, originally designed to help engineering teams “identify, prioritize, and respond to cyber threats through the application of countermeasures that reduce susceptibility to cyber attack.” More colloquially, TARA is short for “Transfer, Avoid, Reduce, and Accept.” While we don’t use the Transfer category at New Relic, our risk matrices are designed so teams identify how they will take steps to avoid, reduce, or accept a risk.

Avoid: Risks classified here are those a team can fix completely. For example, if a team uses libraries or other dependencies in its service, the team may need to schedule regular maintenance windows during which it can upgrade those libraries or dependencies.

Reduce: These are risks a team cannot completely avoid, but the team can reduce the impact or likelihood of such risks with additional engineering effort. For example, network outages are a risk for all teams, but we can reduce that risk by working to ensure our services gracefully recover after an outage.

Accept: In some cases a team may accept a risk; these are risks a team knows about, but there is little it can do to prevent them. For example, a team has to worry about network downtime, but if its system recovers gracefully after a network outage, the team can categorize that risk as one it accepts. (But the risk of the system not being able to recover gracefully after an outage would belong in the “Reduce” category.)

Step 2: Building the risk matrix

There are many ways to build a risk matrix. At New Relic, we use a kanban board in Jira. The board has three columns for our Avoid, Reduce, and Accept categories. We categorize risks along two axes: Impact and Likelihood; and we have three levels for each axis: High, Medium, and Low (more on these below).

To build an effective risk matrix, teams need to concentrate on their systems’ capabilities and what their customers (internal or external) expect from those capabilities. Asking what can degrade or interrupt the delivery of those capabilities helps teams focus on the risks that matter instead of trying to “boil the ocean” by addressing every possible risk.

If a team has fewer than 10 risks, it might want to think more deeply about its system. Conversely, if it has identified 50 or more risks, it might need to reduce scope and focus more strictly on risks that threaten its ability to deliver customer-facing capabilities. In most cases, we advise teams to toss aside risks that are too general.

Finally, we ask teams to identify risks inherent in their upstream and downstream dependencies, and in the links among their services. A team should also categorize these risks and add them to the kanban board.

When a team is ready to build out its board/risk matrix, we ask the team to schedule at least an hour to do so—and the team must have its engineering manager and product manager present for the exercise. The questions in the table below are designed to guide these discussions:

| Risk area | Questions |

|---|---|

| Risk areaIncidents | Questions● How many follow-up incident tickets do you have in your backlog? ● What services have had the most incidents and why? ● Do services need to be restarted after dependent services are restored? ● Is there commonality between team incidents? |

| Risk areaProcess | Questions● Does the team have any error-prone manual toil? ● Can the team deploy daily to both staging and production? ● What is the state of the team’s runbooks and documentation? |

| Risk areaCode | Questions● Does the team own any legacy code, and, if so, is it understood? ● How many inherited services/systems does the team own and are they understood? ● How many languages are represented in the codebase? |

| Risk areaTesting | Questions● Is testing coverage comprehensive enough? ● Are regression tests automated and kept up to date? ● What are the number of false positives? ● Are failure modes meaningful and actionable? |

| Risk areaDependencies | Questions● What are the upstream dependencies and are they well supported? ● Are upcoming changes API-compatible, or are there End-of-Life plans for any of the dependencies? ● What are the downstream dependencies? ● Are libraries up to date and supported long-term? ● Is there reliance on third-party tooling? Is that tooling still supported? |

| Risk areaUnexpected user interactions | Questions● Are requests rate limited, easily configurable, documented, and appropriate? ● Are incoming requests checked for accuracy to make sure they are well-formed to prevent abuse? ● Is there monitoring and alerting on system throughput? ● Are requests authenticated? |

| Risk areaCapacity and scaling | Questions● Have systems been designed with capacity and scaling in mind? ● Is alerting set up to sound an alarm when throughput is consistently at 75% of anticipated capacity? ● Does the team participate in quarterly capacity-planning exercises? |

| Risk areaMonitoring and alerting | Questions● What is the ratio of false positives to real alerts? ● How often does the team learn about incidents from support or end users instead of being alerted by pages? ● Are the same monitoring alerts set up for staging and production? ● Has the team recently verified that its monitoring alerts are still active? ● Is there enough visibility into the system’s failure modes and are there alerts for those modes? |

We want teams to generate real work from these risks; the risk-reduction workload needs to be manageable, so that a team actually stands a chance of completing it.

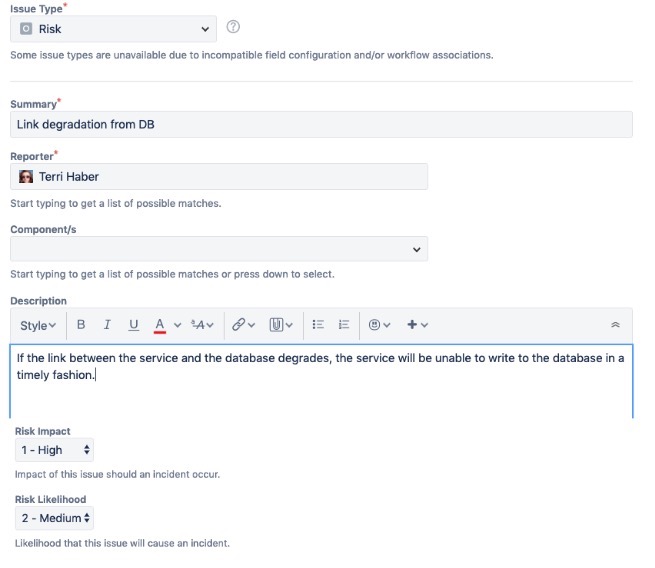

For each risk, teams create a “Risk”-type Jira ticket, and assign it an Impact and Likelihood rating, each on a scale from 1 (high) to 3 (low).

Let’s take a closer look at how we define Likelihood and Impact.

Defining risk likelihood

To avoid subjective assessments of likelihood, we ask that teams write concrete definitions of low, medium, and high likelihoods appropriate for the team; and use those definitions in the same way, at all times.

For example:

- High likelihood: The event that created the risk happened in the last six months.

- Medium likelihood: The event that created the risk happened in the last year.

- Low likelihood: A particular event that could cause a risk hasn’t happened yet, but the team predicts the event could happen.

Defining risk impact

Defining impact can also be slippery and subjective. In this case, we refer teams to New Relic’s incident severity levels: If a risk were to happen, what would be the severity of the incident would cause? Teams factor in potential incident duration, for example, by assessing the service’s ability to recover if a dependency fails and comes back into service, or if the team's monitoring and alerting is comprehensive enough.

This table shows how we might apply incident severity to impact:

[table id=23 /]

Step 3: Working through the risks

After a team has built a kanban board/risk matrix, it works with its product and engineering managers to prioritize the work in the Avoid and Reduce columns. Any risks marked as high likelihood/high impact should get the highest prioritization. We expect that all teams will have one sprint (work a team can do in one to three weeks that stands on its own, delivers durable business value, and could be shipped to its intended audience when it’s complete) per quarter to reduce the risk count in the Avoid column. During the sprint, the team should include a gameday to test assumptions about the risks it’s resolving and to make sure the team's mental models are aligned with the reality of its systems.

It’s important to note that this scheduled work may not be able to completely eliminate all of the most urgent risks, but the work should dial down the risks’ probability or impact.

Step 4: Reviewing the risk matrix

New Relic expects each team to review its risk matrix at least every eight months, or whenever it onboards a new team member or releases a new service or product.

If a team has existing risks, and it’s not sure if those risks are still relevant, the team should work through the following rules:

- Is the risk too vague? If yes, delete it.

- Does this risk actually belong to a different team? If yes, transfer it.

- Is this risk a security issue rather than an availability issue? If yes, transfer it to the Security team.

- Does the team have control of this risk, or has it done as much work as possible to mitigate it? If yes, review the risk’s impact and likelihood, and put in the Accept column.

- If the team can’t fix the risk, does it have a plan to monitor the risk so the team doesn’t make it worse? If yes, review the risk’s impact and likelihood, and put in the Reduce column.

- Can the team schedule work to mitigate this risk? If yes, review the risk’s impact and likelihood, and put in the Avoid column.

Reviewing risk matrices on a regular basis keeps teams honest as they work to maintain the reliability of their systems.

Best practices for software risk analysis

The following best practices will help software development teams enhance their ability to identify, assess, and manage risks, improving the chances of successful project outcomes.

Early identification

Initiate the risk analysis process early in the project to detect potential issues before they become critical, facilitating more effective planning and mitigation strategies.

Comprehensive approach

Take a comprehensive approach by considering a broad range of risks, including technical, operational, organizational, and external factors, ensuring a thorough understanding of potential challenges.

Stakeholder involvement

Involve key stakeholders, including developers, project managers, and end-users, to gain diverse perspectives and create a more comprehensive and accurate risk assessment.

Regular review and updates

Continuously review and update the risk analysis throughout the project lifecycle to adapt to evolving risks and ensure that mitigation strategies remain effective.

Risk prioritization

Prioritize risks based on severity and likelihood of occurrence, enabling the team to focus efforts on addressing the most critical issues that could significantly impact the project's success.

Clear documentation

Document identified risks, their assessments, and mitigation strategies clearly to serve as a reference for the team and facilitate communication with stakeholders.

Contingency planning

Develop contingency plans for high-priority risks, providing predefined responses for potential issues and allowing for a faster and more effective reaction when risks materialize.

Reliability is a feature

The risk matrix elevates the visibility of risks, and the matrix exposes those risks to the entire organization so that we can make better decisions as we prioritize work. We’ve tried to make this process as simple as possible—and, ideally, teams should see fewer items in the Reduce and Avoid columns every quarter.

If that improvement isn’t happening the team may be struggling to prioritize its reliability work. This should lead to a conversation with the team’s Site Reliability Champion. The ability to prioritize work that is in service to reducing documented risk means we recognize that reliability delivers business value.

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。