Microservices architectures enhance the ability for modern software teams to deliver applications at scale, but as an application’s footprint grows, the challenge is to maintain a network between services. Service meshes provide service discovery, load balancing, and authentication capabilities for microservices, but just like the architectures they support, service meshes also present plenty of new management concerns.

This is where Istio comes into play. Developed by a collaboration between Google, IBM, and Lyft, Istio is an open-source service mesh that lets you connect, monitor, and secure microservices deployed on-premise, in the cloud, or with orchestration platforms like Kubernetes and Mesos. Announced less than two years ago, Istio is establishing a growing user base including giants like Ebay and AutoTrader UK.

Google crashed KubeCon in 2018 when the company announced a public beta of Istio on Google Cloud. VMware, F5 Networks, and Twistlock have either introduced managed Istio services or full support for the service mesh platform. “When you're moving towards modern apps that use APIs with microservices, it becomes natural that you will at some point need a service mesh layer. Istio is such a hot project—it’s become natural solution,” explains Adilson Somensari, a Senior Solutions Architect at New Relic. “I believe eventually Istio will become the de facto standard for managing service meshes running on Kubernetes.”

Kubernetes isn’t the only way to deploy microservices, and Istio isn’t the only service mesh, but current thinking from tech leaders, like Google and IBM, seem to suggest they’re increasingly becoming inseparable.

To understand why that’s happening, we need to look a little deeper into what a service mesh is and what it does, and how Istio extends the service mesh model.

What is a service mesh?

A microservices architecture isolates software functionality into multiple independent services that are independently deployable, highly maintainable and testable, and organized around specific business capabilities. These services communicate with each other through simple, universally accessible APIs. On a technical level, microservices enable continuous delivery and deployment of large, complex applications. On a higher business level, microservices help deliver speed, scalability, and flexibility to companies trying to achieve agility in rapidly evolving markets.

But, as noted earlier, a microservices architecture can get complex, quickly. How do you manage that complexity?

A service mesh is an infrastructure layer that allows your service instances to communicate with one another. The service mesh also lets you configure how your service instances perform critical actions such as service discovery, load balancing, data encryption, and authentication and authorization.

Because the service mesh provides a layer of abstraction—the application code typically has no knowledge of the work the service mesh performs—you get serious flexibility; you can move a microservice to a different server or cluster, for example, without having to rewrite your application. In effect, the service mesh automates the most boring and repetitive work of managing microservices.

How does a service mesh work?

The architecture of service mesh is split between two disparate pieces: the data plane and the control plane.

The data plane is essentially a proxy service that handles communications between services. In Istio, the data plane is deployed as a “sidecar proxy,” a supporting service added to the primary application; for example, in a Kubernetes infrastructure, proxies are deployed in the same pod as an application with a shared network namespace.

Data planes also provide observability into your microservices, particularly in the form of logs and metric aggregation.

NGINX, HAProxy, and Envoy all provide data-plane functionality. Envoy, in particular, has become a wildly popular proxy because it’s intended specifically for use in microservice architectures, provides dynamic APIs for configuration, and has enhanced observability.

The control plane, meanwhile, oversees policies and configurations for the data plane—it does not handle any data. Tools like Nelson, SmartStack, and Istio all provide control-plane functionality in some form, and each has its own strategy for managing the relationship with proxies. In Kubernetes, for example, the control plane works in conjunction with the orchestration system to schedule services and their proxies, track service discovery, and configure proxies via API.

You can run Envoy as a standalone proxy without a control plane, but it’s Istio’s unique approach to the control plane/data plane workflow, as well as its core features (traffic management, security, observability) that, when combined with Envoy, makes it increasingly appealing to many users as a fully functional service mesh.

Inside the Istio service mesh

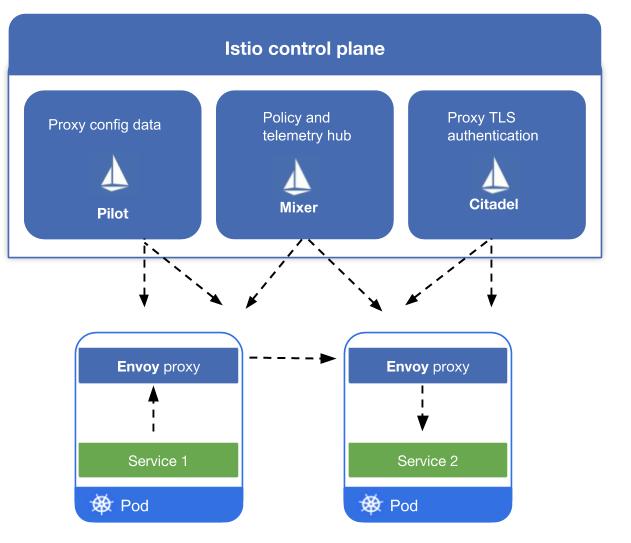

Istio’s architecture includes four main components. Istio uses Envoy sidecar proxies as its data plane, and three other tools comprise the Istio control pane. Let’s look at each one:

Envoy: Envoy sidecar proxies serve as Istio’s data plane. Built-in features such as failure handling (for example, health checks and bounded retries), dynamic service discovery, and load balancing make Envoy a powerful tool. Envoy also provides information about service requests through attributes.

Mixer: Istio’s policy and telemetry hub gathers Envoy attributes about service requests within the mesh, and provides an API so DevOps teams can build plugins (or adapters) to repurpose those attributes within any number of third-party backends, including logging, authorization, or monitoring tools—such as New Relic (more on this below). Mixer also handles authorization between proxies using mutual TLS.

Pilot: Istio uses Pilot to manage load balancing traffic controls based on your Envoy configurations. As with Mixer, you can include adapters so Pilot can communicate via API with your Kubernetes infrastructure about deployment changes affecting traffic. Pilot also distributes authentication rules to proxies.

Citadel: With Citadel, Istio provides a robust, policy-driven security layer for authentication and credential management between Envoy proxies. Citadel manages keys and certifications across the mesh.

(For more specifics about how Istio works, refer to the documentation.)

Istio’s flexible traffic management

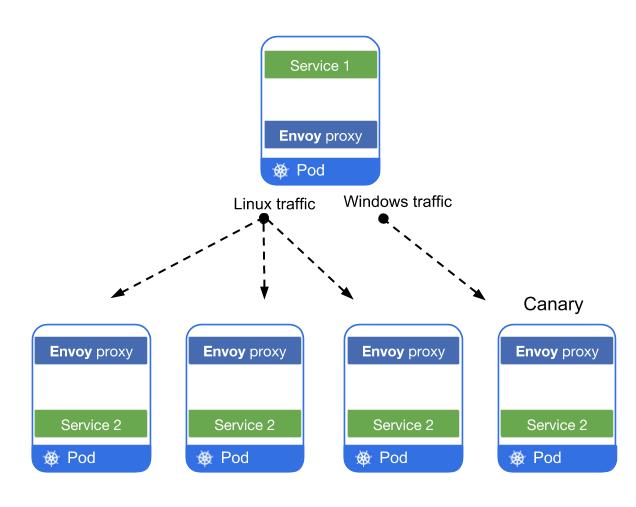

In Istio, you manage proxy traffic with Pilot’s traffic routing rules API. With Istio traffic management, you don’t have to worry as your applications scale—traffic is directed based on the rules you provide rather than according to pre-configured host settings.

Rule-based traffic control means you can route a specific portion of traffic to a specific instance of a service (for example, specify the percentage of traffic that should hit a canary deploy), or set routing rules based on the content of a request (for example, specify that a Windows version of a service should be routed to a canary deploy).

When an orchestration platform like Kubernetes registers a service, the proxy for that service assumes the service’s DNS name, and the service’s HTTP traffic is routed through the proxy and through the available load balancing pool. (Istio supports round robin, random, and weighted least requests load-balancing modes.) Additionally Envoy runs periodic health checks on proxies to add or remove instances from the load balancing pool. These health checks are based on predefined thresholds for additions or removals that you configure in Pilot.

Along with health checks, Istio includes a number of other traffic-management tasks, including circuit breaking, which limits the impact of networking issues like latency spikes; and traffic shifting, to let you move traffic across multiple versions of a service.

Flexible traffic management is but one feature that makes Istio so useful in a microservices environment. But how are teams using Istio in actual production deployments?

The newrelic-istio-adapter

Istio also produces a significant amount of telemetry information that organizations want to collect. The newrelic-istio-adapter uses the go-telemetry-sdk, an open source set of API client libraries that send your metric and trace data to the New Relic platform. Using the SDK, the adapter integrates with Mixer to gather two types of telemetry data:

- Istio’s metric telemetry to send that open source metric data to New Relic

- Istio’s trace telemetry to send spans of distributed traces traversing the service mesh to New Relic

The newrelic-istio-adapter sits alongside Istio in an isolated environment to ensure no interference with the core service mesh functionality of Istio. Once the adapter is up and running, you configure Mixer to send telemetry about events within the service mesh to the adapter. The adapter transforms and delivers this telemetry data as curated open source (multi-dimensional) metrics—essentially, metrics with multiple key-value pairs—to New Relic.

Read more about getting started with the newrelic-istio-adapter.

Is Istio ready for prime time?

Like Kubernetes five years ago, Istio is still in its infancy when it comes to widespread enterprise adoption. After all, the idea of the service mesh itself is relatively new. Many companies are still taking initial steps toward shifting to modern software practices including microservice development—but Istio is definitely in the right place at the right time.

Learn how to monitor Istio with New Relic!

In addition to original Istio sponsors Google and IBM, Cisco and RedHat Openshift already offer Istio support within their platforms while AWS has built its own service mesh to work with Envoy. This will be one development to watch for in the evolution and adoption of Istio as more cloud providers add managed versions in their offerings.

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。