With New Relic, you can collect, process, explore, query, and create alerts on your log data with scalability for your needs. While there are various options for getting logs into New Relic using an agent or one of the open source plug-ins like FluentBit, in the world of serverless architecture it’s challenging to rely on install-based agents to forward the logs. This is when an agentless approach is really helpful—You can import all your logs to New Relic without installing any agent!

In this blog post, we’ll use all of the Google Cloud Platform native services to set up an agentless log forwarder. By default, Google Cloud services write logs to Google Cloud Logging (formerly Stackdriver). With the logs router, we’ll configure a sink with a destination that is a Cloud Pub/Sub topic. Whenever a log entry is generated and sent to this topic, it triggers a linked Cloud Function that is configured through the push-based subscription on the topic. The Cloud Function accepts all the logs and forwards them to New Relic through the New Relic Log API.

Before you begin

Before you start this tutorial, make sure you have completed these prerequisites:

- Enable the Google Cloud APIs for the following services:

- You'll get your New Relic Insert API Key, which is different from your Ingest License Key. This is explained in step 3 of Configure log forwarding from Google Cloud.

During the configuration, make sure you do these tasks:

- Set up the correct inclusion and exclusion filters on the Google Cloud sink (also known as the logs router). You'll do this in steps 10 and 11 of Configure log forwarding from Google Cloud.

- Create runtime

ENVvariables to store your New Relic license key for security. Follow the instructions in step 3 of Configure log forwarding from Google Cloud.

Now let's get started implementing and configuring log forwarding from Google Cloud to New Relic.

Configure log forwarding from Google Cloud to New Relic

1. Create a Cloud Function in Google Cloud. Enter basic details like this example:

2. Select PubSub as the trigger for your Cloud Function, and create a new topic, as shown in this clip.

3. In the Cloud Function configuration, add a runtime environment variable named API_KEY. This is where you add your Insight Insert Key, which is important so our license is not exposed in our Cloud Function code. Generate this key by selecting the highlighted link on the right panel on the Account Settings > API Keys page.

Note: This Insight Insert Key is not the Insights License or Browser API Key on the Account Settings > API page.

4. Now, copy this Node.js code sample and paste it into your Google Cloud Functions source.

const https = require("https");

exports.nrLogForwarder = (message, context) => {

const pubSubMessage = Buffer.from(message.data, "base64").toString();

/* Setup the payload for New Relic with decoded message from Pub/Sub

with "message", "logtype" as atrributes

*/

const logPayload = {

message: pubSubMessage,

logtype: "gcpStackdriverLogs",

};

// configure the New Relic Log API http options for POST

const options = {

hostname: "log-api.newrelic.com",

port: 443,

path: "/log/v1",

method: "POST",

headers: {

"Content-Type": "application/json",

"Api-Key":

process.env

.API_KEY /* ADD YOUR NR INSIGHTS INSERT LICENSE TO THE RUNTIME ENV VAR */,

},

};

// HTTP Request with the configured options

const req = https.request(options, (res) => {

console.log(`statusCode: ${res.statusCode}`);

const body = [];

res.on("data", (d) => {

body.push(d);

});

res.on("end", () => {

const resString = Buffer.concat(body).toString();

console.log(`res: ${resString}`);

});

});

req.on("error", (error) => {

console.error(error);

callback(null, "error!");

});

// write the payload to our request

req.write(JSON.stringify(logPayload));

req.end();

};In Google Cloud Functions, this code accepts the message from the Pub/Sub topic and then removes the Pub/Sub formatting. This is necessary because Pub/Sub might add additional information to the message, such as metadata or specific formatting, that’s not relevant in the context of logs. After the messages is decoded, the extracted logs are then forwarded through the New Relic Log API.

5. Ensure that the entry point is updated with the same exact name as the function name in the code. Then deploy the Google Cloud Function.

6. Verify that the deployment of your function is successful. Hint: look for green check. This can take up to a few minutes after you selected Deploy in the previous step.

7. After the function is deployed, switch to Google Cloud Logs Explorer under Logging. This example video shows how to create a sink here to forward the logs to the Pub/Sub topic that we created earlier.

8. On the next screen, let’s add details to the sink and configure it to forward the logs. When you fill in the sink details for NRLogForwarder, it should look like this:

9. For the Sink Destination, choose Cloud Pub/Sub Topic and then select the topic we created in step 2.

10. Optionally, you can add an inclusion filter. By default, the sink is configured to forward all the logs that appear in the Logs Explorer.

You can configure it to forward only the logs for the services that you want to use with New Relic. This is also helpful when you have many services running on your Google Cloud.

For example, you might have a microservices architecture, as in this sample, and you want to capture all the logs generated by these services. These services could be running on a serverless model, such as Cloud Functions or Cloud Run, or Google App Engine. These logs could provide valuable insights into your customers’ journeys on your platform, which can help you debug and identify any bottlenecks.

In this example, I used Winston logger with my Node.js apps and wanted to forward all logs that are from the Google Cloud Winston Logger transport to New Relic.

You’ll need to update your Project ID or project name and copy this snippet:

log_name="projects/<Your Project ID or Project Name>/logs/winston_log"Then add in your inclusion filter under Sink Configuration like this example:

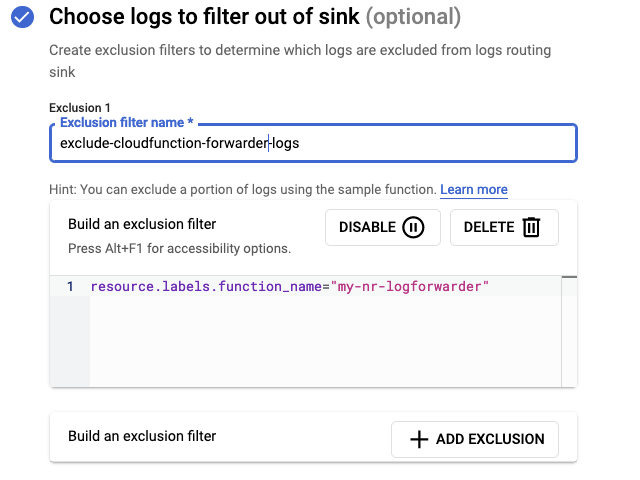

11. Required step: Add an exclusion filter.

If you don’t complete this step, your sink, Cloud Functions, and Pub/Sub will go in an infinite loop of capturing and forwarding the logs, increasing your billing.

Because Cloud Function execution also generates logs, you'll also need to add an exclusion filter under the sink configurations to exclude the logs generated by our Cloud Function, which forwards the log.

Copy this code:

resource.labels.function_name="my-nr-logforwarder”Add the code in your exclusion filter, replacing my-nr-logforwarder with your function’s name, like this example:

12. Now let's go to New Relic, verify the implementation, and explore the forwarded logs!

The messages are published as base64 by Pub/Sub, and the code we added in the Cloud Function is responsible for converting it into a readable string format. Using the inherent parsing mechanism on New Relic for the logs, the message is parsed as a JSON. This allows us to gain access to a range of attributes, such as authorizationinfo, emailid, and method names, which we can then use to query the logs. With this data, we can efficiently monitor and track the progress of our services, making sure they are running optimally, and troubleshoot any issues that arise.

You’ve seen that by setting up and using native services from Google Cloud, you can forward all your logs to New Relic. Then you can enjoy the benefits of enhanced log monitoring and explore other capabilities of New Relic. Want to brush up more on log integration? Learn about Cloudflare log integration.

Nächste Schritte

Learn more about the New Relic Log API in our documentation.

If you aren’t using New Relic yet but want to try out log monitoring and explore what else you can do, , sign up for a free New Relic account. Your free account includes 100 GB/month of free data ingest, one full-platform user, and unlimited free basic users.

Die in diesem Blog geäußerten Ansichten sind die des Autors und spiegeln nicht unbedingt die Ansichten von New Relic wider. Alle vom Autor angebotenen Lösungen sind umgebungsspezifisch und nicht Teil der kommerziellen Lösungen oder des Supports von New Relic. Bitte besuchen Sie uns exklusiv im Explorers Hub (discuss.newrelic.com) für Fragen und Unterstützung zu diesem Blogbeitrag. Dieser Blog kann Links zu Inhalten auf Websites Dritter enthalten. Durch die Bereitstellung solcher Links übernimmt, garantiert, genehmigt oder billigt New Relic die auf diesen Websites verfügbaren Informationen, Ansichten oder Produkte nicht.