過去20年にわたるテクノロジーの変化により、電気通信などの特殊分野での分散システムの力が、多くの企業の一般的な日常業務に取り入れられてきました。この変化に伴い、大規模な分散システムを理解し、監視できることが必要になってきました。多くの依存関係を持つ分散アーキテクチャーでは、特定のエラーやレイテンシの増加によるユーザーへの影響箇所を理解することが複雑になり、困難になる場合があります。New Relicを使用して、分散された世界における追跡困難な問題をどのように理解できるかを模索してみましょう。

指標

一般的に、DevOpsエンジニアは、次のようないくつかのパフォーマンスメトリクスを注視しています。

- ペイロードのサイズ(サイズ)

- 特定のリクエストの時間(期間)

- リクエストが成功したかどうか(エラー)

さらに、デバッグ力を高めるために、追加属性を使用して上記のメトリクスを強化するのが一般的です。

- アップストリームのアプリケーション: このリクエストを生成したアップストリームがどれかを追跡します。

- トレースID 多数のシステム全体の個々のリクエストを追跡するために使用します。

マルチテナントアプリケーションを実行している場合は、特定の顧客に対する個別のリクエストをデバッグするために、「userId」や「tenantId」などのテナント関連属性を追加で含めることができます。

分散アプリケーション間でこの情報を導入する一般的な方法は、リクエストを行う際にヘッダーに情報を含めることです。これにより、ダウンストリームのアプリケーションは、クライアントが誰であるか、その呼び出しに関するセマンティクスを理解できるようになります。このパターンは、同期アーキテクチャーとイベント駆動型アーキテクチャーの両方でうまく機能します。

計装

New Relicがアプリケーションに関連データを計装するために提供する方法は多数あります。パワーユーザーはカスタムイベントを利用できますが、より単純なユースケースでは、カスタム属性を使用して「トランザクション」イベントを計装できる場合があります。簡単な例をいくつか見てみましょう。

Ruby on Rails

Ruby on Railsアプリケーションでは、New Relicエージェントを使用して、次の例のようにトランザクションにカスタム属性を簡単に追加できます。

class MyController < ApplicationController

def doAction

NewRelic::Agent.add_custom_attributes({

upstreamApplication: request.headers['x-application-context'],

traceId: request.headers['x-trace-id']

})

...

end

end

Java Spring

Java Springの開発者にとって、プロセスは同様に簡単です。以下の例は、Java Springアプリケーションで同様のインストゥルメンテーションを実現する方法です。

@RequestMapping(

path = "/api/doAction",

method = RequestMethod.POST)

public void doAction(

@RequestHeader("x-application-context") String applicationContext

@RequestHeader("x-trace-id") String traceId

) {

NewRelic.addCustomParameter("upstreamApplication",applicationContext);

NewRelic.addCustomParameter("traceId",traceId);

...

}

New Relic SDKを使用すると、アップストリームのアプリケーションに関する情報をすばやく計装でき、複雑な問題のデバッグに役立ちます。### 分散トレーシング 分散トレーシングを有効にしている場合、これらの属性とヘッダーはNew Relicにより自動的に追加され、アプリケーション間で伝播されます。クライアントとサーバーの両方を一致するヘッダーで計装することを心配する必要がなく、New Relicエージェントの組み込み機能を利用するだけで済みます。

モニタリング

New Relicでは、次のようなクエリを発行できます。

FROM Transaction SELECT traceId, appName, upstreamApplication

ここでは、発生したさまざまなトランザクションとその発生場所を確認できます。

複数のサービス全体で特定のエラーリクエストを追跡するために、traceIdでフィルタリングするクエリが発行されます。これにより、クライアントからサーバーへのリクエストパスのテーブルを取得できます。

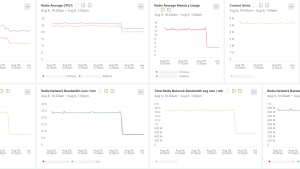

これを使用して、トランザクションイベントに既に存在する属性を追加し、アップストリームのアプリケーションエラーが実際にその依存関係の1つに由来していることを特定できます。この例では、実際には、「web-dashboard」と「auth-service」の間にある「api-gateway」サービスでエラーが発生したことがわかります。

このことを理解すると、APMでエラーが発生したアプリケーションを調査し、api-gatewayサービスでのトークンの更新中にエラーが発生したことを確認できるようになります。

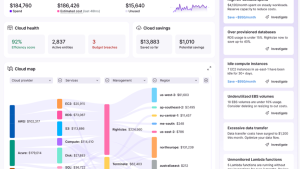

ビルド済み分散トレーシングUIを使用すると、アプリケーションの依存関係のマップ、個々のトランザクションとその依存関係を、スパン持続時間などの主要なパフォーマンス情報とともに表示することもできます。

ここでは、たとえば、最終的にユーザーサービスで複数のAPIを呼び出すことになるサインアップフローを追跡するために、New Relicによる分散トレーシングの活用方法を確認できます。

結論

多数のマイクロサービスで構成される分散インフラストラクチャがある場合、単純なエラーのデバッグが難しい場合があります。「traceId」や「upstreamApplication」などのデバッグ情報を追加すると、システムコールの複雑なメッシュ内のエラー原因を迅速かつ効率的に追跡できるようになります。New Relic分散トレーシングを使用すると、それがはるかに容易になります。

次のステップ

分散システムの可視性を高めたいですか?今すぐNew Relicにサインアップして、複雑な問題追跡を簡単に開始しましょう。

分散システムでNew Relicを使用するための詳細なガイダンスについては、次のリソースを確認してください。

あるいは、分散トレーシングに関する自習型のコースを受講することもできます。

本ブログに掲載されている見解は著者に所属するものであり、必ずしも New Relic 株式会社の公式見解であるわけではありません。また、本ブログには、外部サイトにアクセスするリンクが含まれる場合があります。それらリンク先の内容について、New Relic がいかなる保証も提供することはありません。