When monitoring the performance of your tech stack, you’re likely collecting telemetry data in the form of metrics, events, logs, and traces, all of which can contain data in the form of URLs. These URL attributes are usually stored as standard strings, which, in the past, posed challenges for querying using New Relic Query Language (NRQL) because of the limited functions available for strings. And while you could theoretically add specific instrumentation to address these challenges, knowing what to instrument in advance isn’t always easy or even possible.

To demonstrate some of the challenges of querying this data, let’s look at the following example.

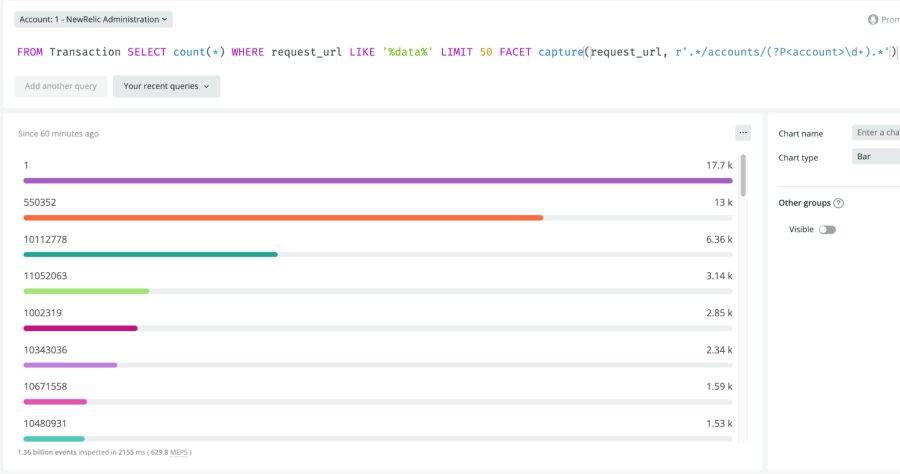

The URLs in the image above are being published as attributes on the transaction event for an example service. By faceting on the attribute request_url, you only see aggregated data by the full string (in this case, request count). But what if you wanted to facet by the account within the path? As it stands, it would be nearly impossible to find which account is using each endpoint the most, how many unique accounts are using each endpoint, which endpoint has the highest traffic irrespective of account, and so on.

That’s why we’ve released regex capture, making it easier than ever to query and extract useful data from strings such as URLs, log messages, and more.

How regex capture works

You can use regex capture in NRQL with the capture function. The capture function allows you to use regular expressions in your queries and extract focused data. Here's an example of the capture function:

capture(request_url, r'.*/accounts/(?P<account>\d+).*')

The capture function takes two arguments. The first is the request_url: the attribute containing the URL string. The second argument is a regular expression pattern that uses the special literal syntax r’... [pattern] ...’, which has more accommodating escaping than a string literal argument. Regex within NRQL is built on the Re2 syntax.

By default, a regex returns True or False when matching against a string. If you’re looking for boolean matching, take a look at RLIKE. As shown above, the range of the pattern to be captured is defined by a named capture group: ..(?P<name> … pattern …)... The entire pattern is used for matching, and the capture group defines which subpattern to extract. We will go into more detail about how it works for the example in the next section. As it stands, only a single capture is allowed. We are planning to extend to multi-capture in the future!

Regex capture in action

Regex capture makes it simple to query data from the example above. While regex can be intimidating to work with at first, Re2 has clear documentation, and Stack Overflow has great answers on how to formulate more complex regular expressions.

Let’s walk through an example. This query uses regex capture to find which accounts are using specific service endpoints (the request_url) most frequently:

FROM Transaction SELECT count(*) WHERE request_url LIKE '%data%' LIMIT 50 FACET capture(request_url, r'.*/accounts/(?P<account>\d+).*')

In the query above, SELECT count returns the number of transactions for each facet. Next, the FACET clause is combined with regex capture to group the results by the captured account ID.

The regex itself is composed of the following sections:

.*/accounts/matches any string which is followed by the suffix/accounts/. This way, you can find the piece of the string just before the account ID.(?P<account>\d+)is the required capture group, which extracts one or more numbers with the nameaccount. Doing this extracts the embedded account ID..*matches any trailing characters, allowing you to match any URL path. This is necessary because all the URLs have paths following the account ID and the pattern should match across different URL paths. In this case, we don’t care which endpoint is being used.

Below are the results of the query. Note the specific numbers identifying each account. The capture function is only able to return these values because a named capture group was specified in the second argument.

Next, let’s take a look at how many unique accounts are hitting these endpoints. Regex capture can be used in the FACET, WHERE, and SELECT clauses. This example uses the capture function in the SELECT clause as an input argument to UniqueCount:

FROM Transaction SELECT uniqueCount(capture(request_url, r'.*/accounts/(?P<account>\d+).*')) WHERE request_url LIKE '%data%'

With uniqueCount, each account is counted only once, returning a total number of accounts that use the specified request_url. In other words, this query returns the total number of accounts that use any of the specified endpoints.

Here are the results:

With the ability to apply these captures to different segments of the URL, it becomes much easier to discover interesting data points. For instance, you can drill down even further and see how many unique accounts are using each of the endpoints. You just need to facet on another capture, which extracts the path following the account. Here’s the query:

FROM Transaction SELECT uniqueCount(capture(request_url, r'.*/accounts/(?P<account>\d+).*')) WHERE request_url LIKE '%data%' FACET capture(request_url, r'.*/accounts/\d+/(?P<path>.*)')

This example shows how you can use the capture function with both the SELECT and WHERE clauses. With this facet added to your query, you get more nuanced results:

You can also discover which accounts are having the worst performance with these endpoints. This request looks at all accounts where the average duration of time it takes to make a request to the specified request URLs is in the 99.9th percentile. These are extreme outliers where performance is lagging.

Here’s what the query returns:

Thankfully, most of these endpoints are performing well! However, 507990 is an outlier, so it might be worth investigating.

Regular expressions are an extremely powerful tool for finding patterns in strings—and with regex capture, you can now leverage that power to improve your NRQL queries. Try out this new feature and discover new insights on your data.

Nächste Schritte

Learn more about using regex capture with New Relic One in our docs. If you want to learn more about regex syntax, check out Re2 syntax.

Die in diesem Blog geäußerten Ansichten sind die des Autors und spiegeln nicht unbedingt die Ansichten von New Relic wider. Alle vom Autor angebotenen Lösungen sind umgebungsspezifisch und nicht Teil der kommerziellen Lösungen oder des Supports von New Relic. Bitte besuchen Sie uns exklusiv im Explorers Hub (discuss.newrelic.com) für Fragen und Unterstützung zu diesem Blogbeitrag. Dieser Blog kann Links zu Inhalten auf Websites Dritter enthalten. Durch die Bereitstellung solcher Links übernimmt, garantiert, genehmigt oder billigt New Relic die auf diesen Websites verfügbaren Informationen, Ansichten oder Produkte nicht.