In my experience as an Observability Architect, guiding teams to adopt best practices, one of the most common questions I get is, 'What exactly is good telemetry?'. And honestly, it's not always easy to explain.

We all know telemetry data is crucial for understanding how our applications are performing in real-time, but defining what 'good' looks like can be tricky. This is because 'good' can be defined at multiple levels, from how scalable data production is within your applications, to how well your telemetry describes your system and business logic, including how reliably you can transport that data to your observability platform. ‘Good’ also depends greatly on the specific system, team, and organization using it. What works for a small startup might not work for a large enterprise, and a system focused on real-time data processing will have different needs than one that handles batch jobs.

However, I strongly believe that if you don’t measure something, you cannot improve it, and this principle holds true for observability. After all, observability isn't an action, like monitoring; observability is an inherent quality of a system, like security or reliability. If we want to be serious about improving how we observe our systems, we need to establish agreed-upon standard measurements that tell us if we’re heading in the right direction.

This belief is why I'm excited to announce a new project in the observability space: Instrumentation Score. This open standard aims to measure how well our applications are set up for observability. It's a team effort, initially including engineers from New Relic, Ollygarden, Splunk, and Dash0, among others, and I'm super keen to help lead its initial development.

The right tool for the job

Let's dive in and talk about why this matters. You’ve often heard that you need multiple signals – traces, metrics, logs/events, and profiles – to get a complete view of your system. OpenTelemetry supports you in generating those in a standard, vendor-neutral way, whether you are an end-user or an open-source library owner – something we covered recently at KubeCon + CloudNativeCon, in OpenTelemetry in 5 Minutes. But how do you know when to use each of those signals? And how do you know if you’re doing it for its intended purpose?

To exemplify this, let’s go through a thought experiment, illustrated in the image below. Let’s assume someone visits your website and gets a poor experience at the checkout. You see this reflected as an increase in the number of ‘checkout failed’ events that you emit from your users' browsers. These may be discrete events, but you can use a common "session.id" attribute to correlate those events to the distributed traces that were generated during the same user session. These traces allow you to follow individual requests through the system, all the way down to your backend services, each represented below with different colours. This gives you a deeper understanding of how and where things are failing, identify anomalies across all requests, and ultimately find the root cause of those errors.

At the same time, a team operating one of those backend services may have got alerted from a – potentially – related regression. This time, instead of starting from the end-user perspective, this team starts from a high-level, standard metric showing them the number of "5xx " responses in their service. Thanks to OpenTelemetry concepts like “exemplars” they can find individual traces that were recorded at the same time those error metrics were produced. Using this context, they can swiftly understand what the impact was on their end-users, and also identify the problematic dependency that’s making their service fail. Everything is part of the same holistic description of the system, and every engineer involved in troubleshooting it understands it.

The same trace context can also be used to decorate logs that were generated as part of individual transactions, or profiles that allow us to dig deeper into issues that may only be identified by looking at the call stack within a given replica. Different levels of granularity, yet again the same holistic context.

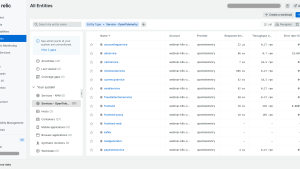

Finally, using standard Semantic Conventions across all these signals allows observability platforms, like New Relic, to understand relationships and causality between different measurements. For example, it’s possible that all these failed requests were part of the same Kubernetes pod, and perhaps point to memory contention in a particular replica as a potential root cause.

I’m not an expert in AI, but I know one thing: the better the context, the better the insights. If all these signals share the same context, and the same set of semantic conventions, an observability platform that can correlate all of them with a single query allows us to answer questions like “What was the memory utilisation in the replicas of my service that handled requests for these failed checkouts?”. Or, even better, not having to ask the question in the first place and letting our new AI overlords take care of it!

Ultimately, the goal of observability is to understand what makes our users happy and our systems efficient in providing a good experience for them, and for that we need quality data. Data that describes the system in a way that allows us to answer questions with evidence, not intuition, relying on correlation and not on the fact that what happened before may happen again.

Not all data is created equal

But here's the thing: not all data is created equal. Observability data has different requirements than data used for auditing or other kinds of analysis. For instance, we may value freshness over completion. We also know that the majority of transactions going through our systems are really not that interesting. They complete successfully in a normal amount of time. So, do we need to transport and store debug-level information for all of them just in case something fails? In most cases, we don’t. We need to focus on collecting the right data for troubleshooting and performance monitoring, and not more. Our planet will love you for it.

Even within telemetry itself, each signal inherently has different levels of reliability, making them suitable for different purposes. For instance, metric streams produce predictable, highly-reliable signals. A metric that aggregates the number of requests per endpoint as a counter will generate roughly the same amount of data, regardless of the service processing one million, or ten requests per second. This allows clients implementing protocols like OTLP to buffer and retry metric exports for longer than they can do on traces, or logs, which would generate a record per request observed. This makes metrics great for long-term trend analysis, and for alerts, as they provide highly aggregated views. However, they’re not great for other cases like debugging or understanding anomalies, as they inherently lack context.

Tracing, on the other hand, with its high granularity, is great to deeply understand the system and the interdependencies within it. It’s vital to truly understand a distributed system. However, due to its larger data volumes, buffering, retrying, and traffic egress can become prohibitively expensive if one were to implement the same level of reliability as metrics. Luckily, context helps here too, allowing us to use smart sampling techniques to store only what matters, and then correlate to other signals.

This is where Instrumentation Score comes in, to give more information on how all these signals fit together, and measure how well the resulting telemetry describes our system in a holistic way. It's a way to measure how well our telemetry meets those specific observability needs, and do it in an effective and efficient way, avoiding large bills or operational surprises.

The road to observability maturity

Now, I know what you're thinking: 'Isn't that what OpenTelemetry Semantic Conventions are for?' And you're right! Semantic Conventions define what good telemetry looks like from the producer's perspective. OpenTelemetry tools like Weaver allow us to measure compliance with those schemas, and even extend them for our own use cases. However, Instrumentation Score takes it a step further, or rather wider. It's a higher-level view that considers telemetry quality at the point of use. We're not just looking at how well the telemetry is produced at source, but how well it actually helps us understand and improve our systems within a specific environment, composed of the applications observed and the observability platform in use.

Finally, let's talk about observability maturity. We mentioned that observability is a specific quality of an observed system, which empowers us to drive specific business outcomes. However, one does not go from zero to hero overnight, so it’s important to understand the most important actions we can take to incrementally improve our posture. Slowly but surely wins the race!

If an observability expert were to give the average team a large list of all actions they can take to improve their observability, they’d probably drown in advice without understanding the impact. They’d close that list, and never open it again. This is where Instrumentation Score, and the scorecards that can be built upon it, are crucial. By providing different levels of impact for each rule, and weighted scores, one can build maturity models, and promote adoption of best practices in an organization, allowing teams to prioritise the most valuable actions to increase the observability for their systems. Also, who doesn’t love a little bit of gamification in their operational excellence?

Join us!

So, what do you think? I'd love to hear your thoughts on this concept. Let's start a conversation about how we can make observability more effective for everyone. Instrumentation Score is still in its infancy, as we’re trying to capture some of the most important aspects to measure, and how to measure them, as you can see by the ongoing discussions in https://github.com/instrumentation-score/spec. Join us, and help us drive the future of better observability data!

Die in diesem Blog geäußerten Ansichten sind die des Autors und spiegeln nicht unbedingt die Ansichten von New Relic wider. Alle vom Autor angebotenen Lösungen sind umgebungsspezifisch und nicht Teil der kommerziellen Lösungen oder des Supports von New Relic. Bitte besuchen Sie uns exklusiv im Explorers Hub (discuss.newrelic.com) für Fragen und Unterstützung zu diesem Blogbeitrag. Dieser Blog kann Links zu Inhalten auf Websites Dritter enthalten. Durch die Bereitstellung solcher Links übernimmt, garantiert, genehmigt oder billigt New Relic die auf diesen Websites verfügbaren Informationen, Ansichten oder Produkte nicht.