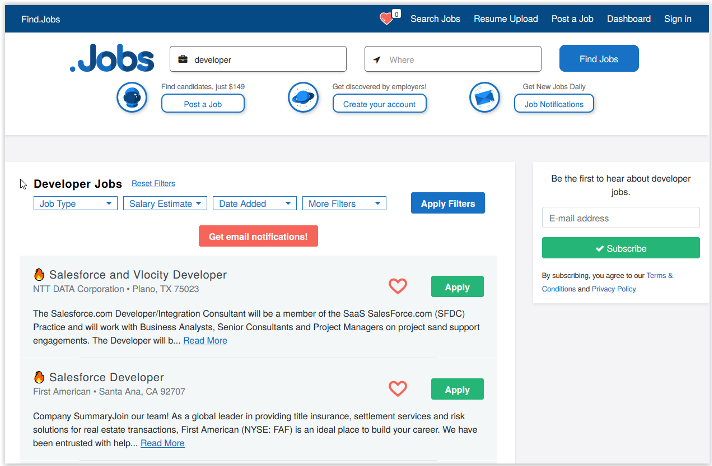

If you’re looking for a job, chances are good that you’ve landed on one of 30,000 different job boards owned and operated by Find.Jobs, a top-level domain and publisher serving job seekers and hiring companies.

We operate in every state and geography, helping people—including 300 million job seekers in 150 countries—find the right positions. Because our business model relies on tying together search results and the appropriate domain (such as California.jobs), it’s critical that we engineer our sites so Google’s web-crawler bot can process the sites quickly and navigate them thoroughly to index all of the content.

Before we deployed New Relic, Google web crawlers weren’t finding jobs on pages other than the first page on a site. Since our founding, we had no idea this was happening—which probably cost us hundreds of thousands of dollars in lost revenue.

Keeping 30,000 domains performing well

Ours is a unique space in that we must make sure our websites are performing well for both end users and crawler bots that hit our sites and index what they find. The problem is that Google's Crawl Stats are not helpful for us at the scale at which we operate.

We didn’t have a way to tell, outside of the IP address, whether a user on the site was a bot or a human, what the user was doing on the site, and how the site was reacting. We couldn’t see which pages bots were hitting or the timing of bot traffic. We had to guesstimate because we couldn’t catalog, classify, or facet the data in a way that was understandable.

We had been using other monitoring products for logging and tracking performance. After one developer turned on logging for a new query type added for Elasticsearch, we started getting hit with unexpectedly large bills, between $20,000 and $40,000 per month for the log data. We got to a place where we started narrowing down what we were logging, and that's a dangerous place to be—deciding to save money on volume by logging less—as a data-driven organization.

Logging everything without breaking the bank

Around this time, New Relic released its logs capability. As longtime users of New Relic APM 360, we were interested in a way to log everything without enormous and unpredictable costs. We were also looking for a way to improve testing for our domains using synthetic traffic. New Relic was the answer for those needs as well as use cases across the whole company.

Logging in New Relic immediately gave us access to new capabilities that we didn’t have before and now can’t live without. The ability to parse records and put data into fields to make it queryable and facetable is incredibly important from the logging perspective. The ability to click a button when you’re looking at logs and turn it into a chart on a dashboard is indispensable, saving enormous amounts of time. The logging capabilities in New Relic help us be as proactive as possible regarding application health.

Discovering the crawlability issue

Using New Relic, we created reporting to look at Google bot traffic and understand the impact of Google on our sites, which wasn’t possible before. With logging in New Relic, we have the ability to separate every type of facet on the bots that are hitting us and look at what the bots are doing.

We instantly saw that the Google bots were hitting the first page on a site and then not going to the subsequent pages of jobs. We immediately stopped all other development to focus on fixing the issue—which helped us generate 20% more revenue in the first month after the fix than any other month we’ve ever had.

Saving infrastructure costs

Google web crawling bots aren’t the only bots that regularly generate traffic on our sites. Using New Relic, we realized that many other bots were generating high amounts of traffic, which impacted our cloud infrastructure costs. These are all bots that provide information about the health of websites, so it was a no-brainer to block them, which enabled us to reduce the overall number of servers we need to serve all our other requests by 30%.

Sharing data with the rest of the company

As a data-driven company, we rely on data for decision making across every aspect of our business. For us, every piece of data is important, which is why New Relic has a strategic impact on our business, even beyond helping us keep our websites healthy and performing well.

Every person in our company uses New Relic. The marketing team uses it to understand the effect of a change. Salespeople use it to see what types of jobs people click on and what types of companies are getting clicks. They start that research inside of New Relic.

Les opinions exprimées sur ce blog sont celles de l'auteur et ne reflètent pas nécessairement celles de New Relic. Toutes les solutions proposées par l'auteur sont spécifiques à l'environnement et ne font pas partie des solutions commerciales ou du support proposés par New Relic. Veuillez nous rejoindre exclusivement sur l'Explorers Hub (discuss.newrelic.com) pour toute question et assistance concernant cet article de blog. Ce blog peut contenir des liens vers du contenu de sites tiers. En fournissant de tels liens, New Relic n'adopte, ne garantit, n'approuve ou n'approuve pas les informations, vues ou produits disponibles sur ces sites.