DORA metrics are four key measurements developed by Google’s Research and Assessment team that help evaluate software delivery performance. Based on extensive research spanning over seven years, these metrics provide a data-driven approach to measuring how effectively your teams deliver software, focusing on both velocity and stability. They’ve become the industry standard for assessing DevOps maturity and identifying pathways to improvement.

Benefits of implementing DORA metrics include:

- Objective measurement of DevOps performance

- Clear identification of improvement areas in your delivery pipeline

- Data-driven decisions for technical investments

- Ability to benchmark against industry standards

What makes DORA metrics particularly valuable is their focus on balancing speed with reliability. By tracking these metrics, you can pinpoint bottlenecks, set realistic goals, and make informed decisions about your development processes. This guide explains the four DORA metrics, how to measure them, and practical strategies for using them to transform your DevOps performance.

Understanding the four key DORA metrics

DORA metrics measure two critical aspects of software delivery: velocity (how quickly you deliver) and stability (how reliable your deliveries are). Each metric serves as an indicator of specific aspects of your development process.

| Metric | Elite | High | Medium | Low |

|---|---|---|---|---|

| MetricDeployment frequency (DF) | EliteMultiple times per day | HighBetween once per day and once per week | MediumBetween once per week and once per month | LowLess than once per month |

| MetricLead time for changes (LT) | EliteLess than one hour | HighBetween one day and one week | MediumBetween one month and six months | LowMore than six months |

| MetricChange rate failure (CFR) | Elite 0-15% | High16-30% | Medium31-45% | Low46-60% |

| MetricMean time to restore service (MTTR) | EliteLess than one hour | HighLess than one day | MediumBetween one day and one week | LowMore than six months |

Beyond these four key metrics, the notion of reliability has emerged as an additional measurement to help balance speed with stability.

Deployment frequency

Deployment frequency measures how often your team successfully deploys code to production. Elite DevOps teams deploy multiple times per day through mature CI/CD pipelines. These teams implement fast feedback loops in which automated tests provide immediate validation, allowing developers to quickly identify and fix issues before they reach production.

To increase your deployment frequency:

- Implement automated CI/CD pipelines

- Break down large changes into smaller increments

- Adopt trunk-based development

- Automate testing to build deployment confidence

Lead time for changes

Lead time for changes measures how long it takes for code to go from commit to running in production. This metric reflects your team’s ability to respond to business needs quickly. Elite teams can achieve a lead time of less than one hour.

Common bottlenecks include manual approval processes, infrequent code integration, and lack of test automation. To improve lead time, focus on eliminating wait states in your pipeline. Automate where possible, reduce code review cycles with smaller changes, and ensure your testing strategy doesn’t create unnecessary delays.

Change failure rate

Change failure rate measures the percentage of deployments that result in degraded service or require remediation. This metric helps teams understand the balance between moving quickly and maintaining stability. Elite performers maintain a rate below 15%.

A high change failure rate signals problems with code quality or insufficient testing. To reduce your change failure rate, implement robust automated testing and use feature flags to control rollouts and quickly disable problematic changes without full rollbacks.

Time to Restore Service

Time to restore service measures how quickly your team can recover from incidents or failures. Elite teams can restore service in less than an hour. Modern monitoring tools can help minimize downtime by providing real-time alerts and detailed diagnostics when issues occur. With automated rollbacks, teams can quickly revert to the previous stable version without complex manual processes.

To improve your time to restore service:

- Implement comprehensive monitoring and alerting

- Create detailed incident response playbooks

- Use automated rollback capabilities

- Invest in observability tools for system insights

Reliability, the emerging key metric

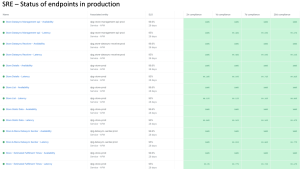

Beyond the four key DORA metrics, reliability has emerged as an additional complementary metric. It evaluates how well your system meets its service level objectives (SLOs) and maintains availability for users, encompassing factors like uptime, error rates, and performance. While the “traditional” DORA metrics focus on software delivery processes, reliability directly measures the outcome that matters most to your users: consistent system performance. This metric helps validate whether faster deployments and quicker recovery times are actually translating to better service quality.

To improve reliability, implement SRE practices like error budgets, SLOs, and automated remediation, which can help you to balance velocity with stability in your deployment strategies. By tracking reliability alongside other DORA metrics, organizations gain a more complete picture of their DevOps performance—not just how quickly they can ship code, but how effectively that code meets users’ needs.

Why are DORA metrics important?

DORA metrics provide a data-driven framework for measuring and improving software delivery performance. By tracking these metrics, organizations can move beyond subjective opinions about process efficiency and instead rely on concrete measurements to guide improvement efforts. The importance of DORA metrics extends beyond just measurement—they fundamentally change how teams collaborate. By creating shared visibility into the software delivery process, these metrics break down silos between development and operational teams.

Onefootball’s journey illustrates how observability platforms like New Relic can contribute to improving DORA metrics for a more resilient service that can withstand massive traffic spikes during sports events. Before New Relic, Onefootball’s engineering team struggled with limited visibility into application performance, often resorting to over-scaling Amazon Web Services resources as a compensatory measure. With proper monitoring of their Kubernetes environment after migration, the team achieved remarkable results: an 80% reduction in incidents along with 40% of reclaimed developer time that was previously spent troubleshooting.

How to measure DORA metrics

Tracking DORA metrics effectively requires integration across your DevOps toolchain. It’s preferable to collect this data automatically rather than through manual processes which could be time-consuming and potentially less accurate.

Here’s how to calculate each metric:

- Deployment frequency – Using your CI/CD and deployment infrastructure tools, count the number of deployment events over a specific period of time.

- Lead time for changes – Measure the time between code commit and successful production deployment. Track when commits occur and when those specific commits are deployed to production.

- Change failure rate – Track the percentage of deployments that cause service degradation by counting the number of deployments with incidents and dividing that by total deployments.

- Time to restore service – Capture a timestamp when an incident begins and when it’s resolved; this is your restore time for a given incident. Average these times across all incidents to get the mean time to restore service.

New Relic’s observability platform simplifies this data collection and analysis by connecting to your version control, CI/CD, and incident management systems to provide a comprehensive view of your delivery performance.

Common challenges in implementing DORA metrics

Implementing DORA metrics brings significant benefits, but organizations may encounter several hurdles along the way. Many teams struggle with the complexity of data collection, as these metrics require information from multiple systems that operate more or less independently of one another. This fragmentation can lead to inconsistent data and misinterpretation of results. Additionally, teams may face cultural resistance when introducing new measurements, as engineers might worry the metrics will be used to evaluate individual performance rather than improve collective processes.

Another common challenge is defining what, exactly, constitutes a “deployment” or a “failure” across different teams and systems. Without standardized definitions, comparisons become meaningless and can lead to misleading conclusions. Organizations with legacy systems or limited tooling may also find it difficult to gather the necessary data automatically, resulting in manual processes that can be time-consuming and error-prone.

Here are some common misconceptions about DORA metrics, and how to proactively address them:

- DORA metrics do not require perfect data – It’s perfectly fine to start with approximations and refine your data collection over time.

- DORA metrics are not intended for evaluating individuals – The purpose is to assess how teams are performing as a whole; the goal is to improve processes, not punish individuals.

- Implementing tracking does not require expensive tooling – You can start with manual tracking before investing in automation.

- There is no one result that’s ideal for all teams – An Elite score in any given category may not be necessary or feasible depending on how your team operates; what’s important is to discover the areas where you can make meaningful improvements. Because:

- Improving metrics does not always improve outcomes – Speed of deployment for its own sake is of little use to the end user if you’re not shipping anything that improves their experience. Don’t lose sight of why you’re evaluating these metrics in the first place.

FAQs on DORA metrics

What are the flow metrics for DORA?

Flow metrics complement DORA metrics by measuring how work moves through your development pipeline. They include flow velocity, time, efficiency, and load, which can help to identify bottlenecks in your processes.

What are DORA metric KPIs?

DORA metrics themselves can serve as key performance indicators for DevOps teams. Organizations set targets based on current performance and industry benchmarks with the aim of improving over time.

What are DORA metrics benchmarking?

DORA metrics benchmark a DevOps team’s performance against industry standards categorized as Elite, High, Medium, or Low, helping to identify specific and measurable areas for improvement.

How often should teams review their DORA metrics?

Teams should review DORA metrics at least monthly to identify trends and improvement opportunities. Elite performers often examine these metrics weekly as part of their continuous improvement process.

How does New Relic help track DORA metrics?

New Relic’s observability platform captures and analyzes data across your entire DevOps tool chain. It tracks deployment events, correlates code changes with performance impacts, identifies incidents, and measures recovery time.

And to take your DevOps performance to the next level and gain deeper insights, learn how to automate, measure, and track DORA metrics across multiple teams using New Relic Scorecards.

Étapes suivantes

DORA metrics provide a solid framework for evaluating and improving DevOps performance. High-performing teams use these metrics as guides for continuous improvement rather than targets to game—the goal isn’t just to improve the metrics themselves, but to meaningfully enhance your ability to deliver value to your users quickly and reliably. With proper tracking of DORA metrics, your organization will experience tangible benefits: faster releases, fewer production issues, quicker recovery times, and ultimately, more satisfied customers. To learn more about how elite DevOps teams operate, explore our resources on CI/CD optimization, infrastructure monitoring, and incident response.

With New Relic’s observability platform, you gain full-stack visibility across your entire development and delivery pipeline. It collects data from your CI/CD systems, tracks code changes through your version control system, and monitors application performance in real-time to automatically detect and analyze issues. Taken together, these capabilities provide accurate measurements for all four DORA metrics in unified dashboards that highlight trends and improvement opportunities. See how New Relic’s observability platform can help you track and optimize DORA metrics to reach elite status.

Les opinions exprimées sur ce blog sont celles de l'auteur et ne reflètent pas nécessairement celles de New Relic. Toutes les solutions proposées par l'auteur sont spécifiques à l'environnement et ne font pas partie des solutions commerciales ou du support proposés par New Relic. Veuillez nous rejoindre exclusivement sur l'Explorers Hub (discuss.newrelic.com) pour toute question et assistance concernant cet article de blog. Ce blog peut contenir des liens vers du contenu de sites tiers. En fournissant de tels liens, New Relic n'adopte, ne garantit, n'approuve ou n'approuve pas les informations, vues ou produits disponibles sur ces sites.