When we first rolled out Dynamic Baseline Alerts late last year, we used a single algorithm that covered a lot of bases and worked well in a wide variety of situations.

Since then, we’ve been talking with customers and doing even more math to find additional ways to make Dynamic Baseline Alerts even better. Of course, we weren’t interested in doing the math for its own sake. Our dedicated applied intelligence engineering team focused on finding the methods that solve our customers’ real-world problems—all at the massive scale of data that New Relic deals with every day. That means monitoring more than a billion events and metrics per minute for more than 15,000 customers.

As usual, our goal is to do as much of the work for you as possible, so you can focus on your systems and your customers. Some solutions still make you select the seasonality for a metric, or pick a particular algorithm to suit a particular metric. With our latest improvements we now do all of that for you.

Automatically discovered seasonality

Seasonality is the periodic pattern underlying a time series. Our first version of baselines used a seasonality system that could identify patterns related to the day of the week, hour of the day, and minute of the hour. This let us find typical usage patterns that vary by day and hour (such as people using a website during working hours Monday through Friday) and also cyclical patterns (such as a database aggregation job that runs at the top of every hour). That covered a lot of bases!

But many other types of seasonality are also possible, and we wanted to support those as well. Enter auto-discovered seasonality. To address that, New Relic’s applied intelligence engineering team used a technique common in signal processing, called Fast Fourier Transforms (FFTs). FFTs can be used to identify the underlying frequency in a time series. Our systems use FFTs to sniff out good candidates for seasonality—which typically have cycles that don’t match the time of day, such as something that happens every 3 hours—then evaluates the candidates against the historical metric data to see if works better than the default seasonality.

Ensemble algorithm chooses the best algorithm—every time

Once we implemented auto-discovered seasonality, we developed a method to find the best fit. Our base algorithm uses three factors: recency, trend, and seasonality of the time series data. However, for some data streams another algorithm may provide a better prediction. With our new unsupervised ensemble system we now select the algorithm that best fits that particular time series.

Every minute, the ensemble selector evaluates the performance of the alternative algorithms and selects the one with the best performance. We weight performance toward more recent performance, using an exponential decay to look at older data. Our evaluator determines best fit with the MASE (mean absolute scaled error) statistical method. (For more on MASE, see our blog post on How We Find the Best Algorithms for Dynamic Baseline Alerts.)

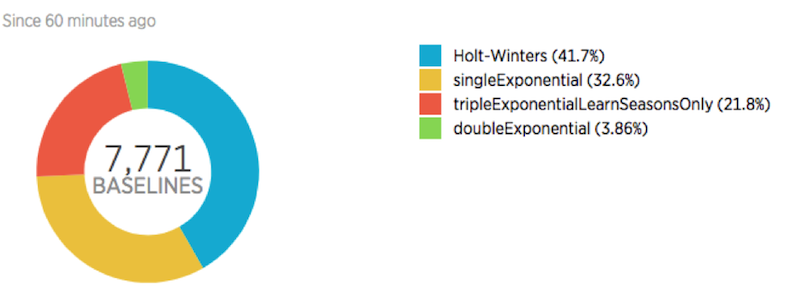

Currently we evaluate four options: triple exponential smoothing with the discovered seasonality, triple exponential smoothing with the default seasonality, double exponential smoothing (recency and trend factors only), and single exponential smoothing (recency only).

Interestingly, it’s not unusual for a simpler algorithm, like single exponential smoothing, to be a better fit than the “fancier” triple exponential smoothing. This is because for data with no appreciable seasonality, the seasonality factor in the triple exponential smoothing can actually amplify noise that is not relevant to data’s behavior.

For example, when we ran a sample of several thousand metrics time series we saw that Holt-Winters (triple exponential smoothing with the default seasonality) was most often the best fit, followed by the much simpler single exponential smoothing. The learned seasons we’d recently implemented came in third.

As a further bonus, with our automatic ensemble selection we can add new algorithms whenever we see an opportunity to further improve accuracy.

The math nerds working on New Relic’s applied intelligence engine are always looking for new ways to improve our systems. Since we’re a pure SaaS company, we love that as soon as we build a solid new improvement, we can ship it so that all our customers get the benefit right away.

Stay tuned for more ways New Relic hopes to use data science and artificial intelligence to help our customers run and manage their systems!

As opiniões expressas neste blog são de responsabilidade do autor e não refletem necessariamente as opiniões da New Relic. Todas as soluções oferecidas pelo autor são específicas do ambiente e não fazem parte das soluções comerciais ou do suporte oferecido pela New Relic. Junte-se a nós exclusivamente no Explorers Hub ( discuss.newrelic.com ) para perguntas e suporte relacionados a esta postagem do blog. Este blog pode conter links para conteúdo de sites de terceiros. Ao fornecer esses links, a New Relic não adota, garante, aprova ou endossa as informações, visualizações ou produtos disponíveis em tais sites.