Aprenda como obter observabilidade do Kubernetes lendo o guia de monitoramento do Kubernetes.

O que é Kubernetes?

Você provavelmente já ouviu o termo Kubernetes ser associado a conteinerização — mas o que é Kubernetes e o que ele significa na redução do esforço manual associado a esses processos? Originalmente desenvolvido pelo Google, Kubernetes é uma plataforma de orquestração de contêineres de código aberto projetada para automatizar a implantação, dimensionamento e gerenciamento de aplicativos conteinerizados

Na verdade, o Kubernetes se estabeleceu como o padrão para orquestração de contêineres e é o projeto principal da Cloud Native Computing Foundation (CNCF), apoiado por grandes players como Google, Amazon Web Services (AWS), Microsoft, IBM, Intel, Cisco e Red Hat.

Embora seja comumente associado à nuvem, o Kubernetes (às vezes chamado de K8s) opera em uma variedade de ambientes, desde aqueles baseados na nuvem até servidores tradicionais e infraestrutura de nuvem híbrida.

As arquiteturas de microsserviços com base em contêineres mudaram profundamente a maneira como as equipes de desenvolvimento e operações testam e implantam software moderno. O contêiner ajuda as empresas a se modernizarem, facilitando o dimensionamento e a implantação de aplicativos, mas o contêiner também introduziu novos desafios e mais complexidade ao criar um ecossistema de infraestrutura totalmente novo.

Grandes e pequenas empresas de software estão implantando milhares de instâncias de contêineres diariamente, e essa é uma complexidade de escala que elas precisam gerenciar. Então como elas fazem isso?

O Kubernetes facilita a implantação e operação de aplicativos em uma arquitetura de microsserviços. Ele faz isso criando uma camada de abstração em um grupo de hosts, para que equipes de desenvolvimento possam implantar seus aplicativos e deixar o Kubernetes gerenciar as seguintes atividades:

- Controle do consumo de recursos por aplicativo ou equipe

- Distribuição uniforme da carga do aplicativo em uma infraestrutura de host

- Balanceamento de carga de solicitações automático nas diferentes instâncias de um aplicativo

- Monitoramento de consumo de recursos e limites de recursos para impedir automaticamente que o aplicativo consuma muitos recursos e reinício do aplicativo novamente

- Deslocamento de uma instância de aplicativo de um host para outro se houver escassez de recursos em um host ou se o host for desativado

- Aproveitamento automático de recursos adicionais disponibilizados quando um novo host é adicionado ao cluster

- Realização fácil de implantações canário e rollbacks

Neste artigo, vamos nos aprofundar no universo do Kubernetes: o que é, por que as organizações o utilizam e práticas recomendadas para usar essa tecnologia inovadora e que economiza tempo em seu potencial máximo.

Para que serve o Kubernetes e por que usá-lo?

Você claramente sabe o que é Kubernetes, mas o que o Kubernetes faz especificamente? O Kubernetes automatiza vários aspectos de aplicativos em contêineres, oferecendo aos desenvolvedores uma maneira dimensionável e simplificada de gerenciar uma rede de aplicativos complexos em servidores em cluster.

O Kubernetes simplifica as operações em contêiner, distribuindo o tráfego para maior disponibilidade. Dependendo da quantidade de tráfego que seu site ou servidor recebe, o Kubernetes permite que o aplicativo se adapte às mudanças automaticamente, direcionando a quantidade certa de recursos de workload conforme a necessidade. Isso ajuda a reduzir o período de inatividade do site causado por picos de tráfego ou interrupções na rede.

O Kubernetes também auxilia na descoberta de serviços, ajudando aplicativos em cluster a se comunicarem entre si sem necessidade de conhecimento prévio de endereços IP ou configuração de endpoint. Além disso, Kubernetes monitora consistentemente a integridade do seu contêiner, detectando e substituindo automaticamente contêineres com falha ou reiniciando contêineres paralisados para manter as operações funcionando sem problemas.

Então, por que usar o Kubernetes?

À medida que mais e mais organizações migram para arquiteturas de microsserviços e nativas na nuvem que fazem uso de contêineres, elas procuram plataformas comprovadamente sólidas. Utilizadores estão usando o Kubernetes por quatro motivos principais:

1. O Kubernetes ajuda você a se mover mais rápido. De fato, o Kubernetes permite que você forneça uma plataforma como serviço (PaaS) de autoatendimento que cria uma camada de abstração de hardware para equipes de desenvolvimento. Suas equipes de desenvolvimento podem solicitar os recursos necessários de forma rápida e eficiente. Se precisarem de mais recursos para lidar com cargas adicionais, eles poderão obtê-los com a mesma rapidez, já que todos os recursos vêm de uma infraestrutura compartilhada entre todas as suas equipes.

Chega de preencher formulários solicitando novas máquinas para executar seu aplicativo! Basta provisionar e começar, aproveitando as ferramentas desenvolvidas em torno do Kubernetes para automatizar o pacotes, implantações e testes. (Falaremos mais sobre Helm em uma próxima seção.)

2. O Kubernetes é econômico. Kubernetes e contêineres permitem uma utilização de recursos muito melhor do que hipervisores e VMs. Como os contêineres são leves, eles exigem menos recursos de CPU e memória para serem executados.

3. O Kubernetes é independente da nuvem. O Kubernetes é executado no Amazon Web Services (AWS), no Microsoft Azure e no Google Cloud Platform (GCP), e você também pode executá-lo localmente. Você pode mover workloads sem precisar redesenhar seu aplicativo ou repensar completamente sua infraestrutura, permitindo a padronização em uma plataforma e eliminação da dependência de fornecedores.

Na verdade, empresas como Kublr, Cloud Foundry e Rancher fornecem ferramentas para ajudar você a implantar e gerenciar seu cluster do Kubernetes no local ou em qualquer provedor de nuvem que você desejar.

4. Os provedores de nuvem gerenciarão o Kubernetes para você. Como observado anteriormente, Kubernetes é atualmente o padrão para ferramentas de orquestração de contêineres. Não é nenhuma surpresa que os principais provedores de nuvem estejam oferecendo várias opções de Kubernetes como serviço. Amazon EKS, Google Cloud Kubernetes Engine, Azure Kubernetes Service (AKS), Red Hat OpenShift e IBM Cloud Kubernetes Service fornecem gerenciamento completo da plataforma Kubernetes, para que você possa se concentrar no que é mais importante para você: entregar aplicativos que agradem seus usuários.

Então, como o Kubernetes funciona?

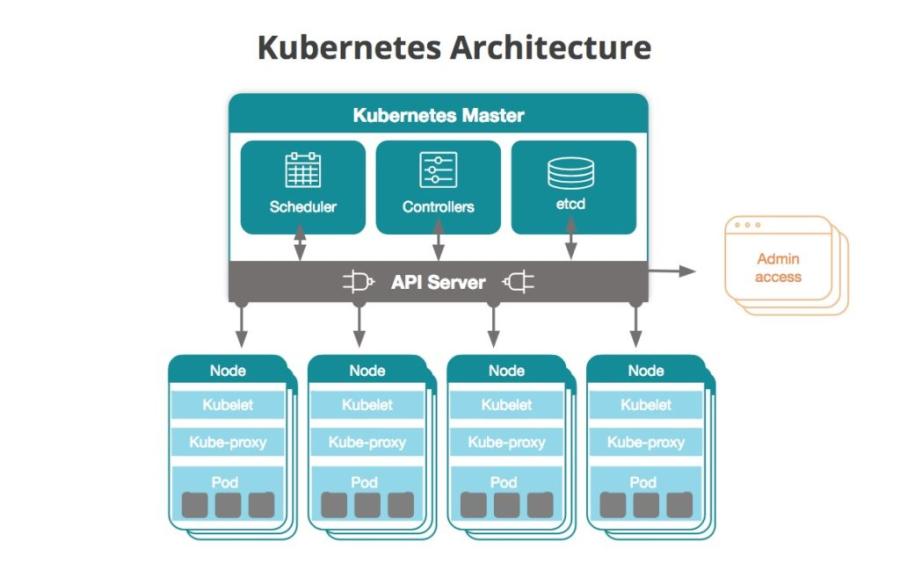

Dentro da hierarquia Kubernetes , há clusters, nós e pods. Esses componentes trabalham juntos para dar suporte a aplicativos em contêineres. Um cluster é composto de vários nós responsáveis por executar o aplicativo. Cada nó representa uma máquina física (no local) ou virtual (baseada na nuvem) que ajuda o aplicativo a funcionar. Cada nó executa um ou mais pods, as menores unidades implantáveis no Kubernetes. Cada pod comporta um ou mais contêineres. Pense no pod como um “contêiner para contêineres” que fornece os recursos necessários para que o contêiner funcione sem problemas, incluindo informações relacionadas a rede, armazenamento e agendamento.

Essa é uma explicação um pouco simplificada de como o Kubernetes funciona. Em um nível mais profundo, o componente central do Kubernetes é o cluster. Um cluster é composto por várias máquinas virtuais ou físicas, cada uma desempenhando uma função especializada, seja como mestre ou como nó. Cada nó hospeda grupos de um ou mais contêineres (que contêm seus aplicativos), e o mestre se comunica com os nós sobre quando criar ou destruir contêineres. Ao mesmo tempo, ele informa aos nós como redirecionar o tráfego com base em novos alinhamentos de contêineres.

O diagrama a seguir mostra uma síntese geral de um cluster do Kubernetes:

Mestre do Kubernetes

O mestre do Kubernetes é o ponto de acesso (ou plano de controle) de onde o administrador e outros usuários interagem com o cluster para gerenciar o agendamento e a implantação de contêineres. Um cluster sempre terá pelo menos um mestre, mas poderá ter mais, dependendo do padrão de replicação do cluster.

O mestre armazena os dados de estado e configuração de todo o cluster no etcd, um armazenamento de dados de chave-valor persistente e distribuído. Cada nó tem acesso ao etcd e, por meio dele, os nós aprendem como manter as configurações do contêineres que estão executando. Você pode executar o etcd no mestre do Kubernetes ou em uma configuração autônoma.

Os mestres se comunicam com o restante do cluster por meio do kube-apiserver, o principal ponto de acesso ao plano de controle. Por exemplo, o kube-apiserver garante que as configurações no etcd corresponda às configurações dos contêineres implantados no cluster.

O kube-controller-manager opera loops de controle que gerenciam o estado do cluster por meio do servidor da API do Kubernetes. Implantações, réplicas e nós têm controles operados por este serviço. Por exemplo, o controlador de nó é responsável por registrar um nó e monitorar sua saúde durante todo o seu ciclo de vida.

Workloads do nó no cluster são rastreados e gerenciados pelo kube-scheduler. Este serviço monitora a capacidade e os recursos dos nós e atribui trabalho aos nós com base em sua disponibilidade.

O cloud-controller-manager é um serviço executado no Kubernetes que ajuda a mantê-lo independente da nuvem. O cloud-controller-manager serve como uma camada de abstração entre a API e as ferramentas de um provedor de nuvem (por exemplo, volumes de armazenamento ou balanceadores de carga) e seus pares representacionais no Kubernetes.

Nós

Todos os nós em um cluster do Kubernetes devem ser configurados com um runtime de contêiner, que geralmente é o Docker. O runtime do contêiner inicia e gerencia os contêineres conforme Kubernetes os implanta nos nós do cluster. Seus aplicativos (servidores web, bancos de dados, servidores de API etc.) são executados dentro dos contêineres.

Cada nó do Kubernetes executa um processo de agente chamado kubelet, que é responsável por gerenciar o estado do nó: iniciando, parando e conservando contêineres do aplicativo com base nas instruções do plano de controle. O kubelet coleta informações de desempenho e integridade do nó, pods e contêineres que ele executa. Ele compartilha essas informações com o plano de controle para ajudar na tomada de decisões de agendamento.

O kube-proxy é um proxy de rede executado em nós do cluster. Ele também funciona como um balanceador de carga para serviços executados em um nó.

A unidade básica de agendamento é um pod, que consiste em um ou mais contêineres que certamente estarão colocalizados na máquina host e podem compartilhar recursos. Cada pod recebe um endereço IP exclusivo dentro do cluster, permitindo que o aplicativo use portas sem conflito.

Você descreve o estado desejado dos contêineres em um pod por meio de um objeto YAML ou JSON chamado Pod Spec. Esses objetos são passados para o kubelet por meio do servidor de API.

Um pod pode definir um ou mais volumes, como um disco local ou disco de rede, e expô-los aos contêineres no pod, o que permite que diferentes contêineres compartilhem espaço de armazenamento. Por exemplo, volumes podem ser usados quando um contêiner baixa conteúdo e outro contêiner carrega esse conteúdo em outro lugar. Como os contêineres dentro de pods costumam ser efêmeros, o Kubernetes oferece um tipo de balanceador de carga, chamado de serviço, para simplificar o envio de solicitações a um grupo de pods. Um serviço tem como alvo um conjunto lógico de pods selecionados com base em rótulos (explicados abaixo). Por padrão, os serviços podem ser acessados somente de dentro do cluster, mas você também pode habilitar o acesso público a eles se quiser que eles recebam solicitações de fora do cluster.

Implantações e réplicas

Uma implantação é um objeto YAML que define os pods e o número de instâncias de contêiner, chamadas réplicas, para cada pod. Você define o número de réplicas que deseja ter em execução no cluster com um ReplicaSet, que faz parte do objeto de implantação. Assim, por exemplo, se um nó que executa um pod é desativado, o conjunto de réplicas garante que outro pod seja agendado em outro nó disponível.

Um DaemonSet implanta e executa um daemon específico (em um pod) em nós que você especificar. DaemonSets são mais frequentemente usados para fornecer serviços ou manutenção aos pods. Por exemplo, um DaemonSet é como a New Relic Infrastructure obtém o agente de infraestrutura implantado em todos os nós de um cluster.

Namespaces

Namespace permite que você crie um cluster virtual em um cluster físico. Namespaces são destinados ao uso em ambientes com muitos usuários distribuídos em várias equipes ou projetos. Eles atribuem cotas de recursos e isolam logicamente os recursos do cluster.

Rótulos

Os rótulos são pares de chave/valor que você pode atribuir aos pods e outros objetos no Kubernetes. Os rótulos permitem que os operadores do Kubernetes organizem e selecionem um subconjunto de objetos. Por exemplo, ao monitorar objetos do Kubernetes , os rótulos permitem que você rapidamente encontre as informações mais significativas.

Conjuntos stateful e volumes de armazenamento persistentes

StatefulSets oferecem a capacidade de atribuir IDs únicas aos pods caso você precise mover pods para outros nós, manter a rede entre os pods ou preservar dados entre eles. Da mesma forma, volumes de armazenamento persistentes fornecem recursos de armazenamento para um cluster ao qual os pods podem solicitar acesso à medida que são implantados.

Outros componentes úteis

Os seguintes componentes do Kubernetes são úteis, mas não necessários para a funcionalidade normal do Kubernetes.

DNS do Kubernetes

O Kubernetes fornece esse mecanismo para descoberta de serviços baseada em DNS entre pods. Este servidor DNS funciona em conjunto com quaisquer outros servidores DNS que você possa usar em sua infraestrutura.

Logs em nível de cluster

Se você tiver uma ferramenta de registro em log, poderá integrá-la ao Kubernetes para extrair e armazenar aplicativos e logs do sistema de dentro de um cluster, gravados na saída padrão e no erro padrão. Se você quiser usar logs em nível de cluster, é importante observar que o Kubernetes não fornece armazenamento log nativo; você deve fornecer sua própria solução de armazenamento de log.

Helm: gerenciamento de aplicativos do Kubernetes

Helm é um registro de gerenciamento de pacotes de aplicativos para Kubernetes, mantido pela CNCF. Os gráficos do Helm são recursos de aplicativos de software pré-configurados que você pode baixar e implantar no seu ambiente Kubernetes. De acordo com uma pesquisa da CNCF de 2020, 63% dos entrevistados disseram que Helm era a ferramenta de gerenciamento de pacotes preferida para o aplicativo Kubernetes. Os gráficos do Helm podem ajudar as equipes DevOps a se familiarizarem mais rapidamente com o gerenciamento de aplicativos no Kubernetes. Isso permite que eles usem gráficos existentes que podem compartilhar, versionar e implantar em seu ambiente de desenvolvimento e produção.

Kubernetes e Istio: uma combinação estimada

Em uma arquitetura de microsserviços como aqueles executados no Kubernetes, uma malha de serviços é uma camada de infraestrutura que permite que suas instâncias de serviço se comuniquem entre si. A malha de serviço também permite que você configure como sua instância de serviço executa ações críticas, como descoberta de serviço, balanceamento de carga, criptografia de dados e autenticação e autorização. O Istio é um desses serviços de malha, e o pensamento atual de líderes de tecnologia, como Google e IBM, sugere que eles estão se tornando cada vez mais inseparáveis.

A equipe da IBM Cloud, por exemplo, usa o Istio para resolver problemas de controle, visibilidade e segurança encontrados ao implantar o Kubernetes em grande escala. Mais especificamente, o Istio ajuda a IBM a:

- Conectar serviços e controlar o fluxo de tráfego

- Proporcionar interações seguras entre microsserviços com políticas flexíveis de autorização e autenticação

- Fornecer um ponto de controle para que a IBM possa gerenciar serviços em produção

- Observar o que está acontecendo em seus serviços, por meio de um adaptador que envia dados do Istio para a New Relic, permitindo o monitoramento dos dados de desempenho dos microsserviços do Kubernetes junto com os dados do aplicativo que já está coletando

Desafios para a adoção do Kubernetes

A plataforma Kubernetes percorreu um longo caminho desde seu lançamento. Esse tipo de crescimento rápido, porém, também envolve problemas de crescimento ocasionais. Estes são alguns dos desafios na adoção do Kubernetes:

1. O cenário tecnológico do Kubernetes pode ser confuso. Uma coisa que os desenvolvedores adoram em tecnologias de código aberto como o Kubernetes é o potencial para inovação em ritmo acelerado. Mas às vezes, muita inovação cria confusão, especialmente quando a base de código central do Kubernetes se move mais rapidamente do que o usuário consegue acompanhá-la. A adição de uma infinidade de plataformas e provedores de serviço gerenciados, pode dificultar a compreensão do cenário para os novos adotantes.

2. Equipes de desenvolvimento e TI com visionárias nem sempre estão alinhadas às prioridades do negócio. Quando os orçamentos são alocados apenas para manter o status quo, pode ser difícil para as equipes obter financiamento para experimentar iniciativas de adoção do Kubernetes, pois esses experimentos geralmente absorvem uma quantidade significativa de tempo e recursos da equipe. Além disso, as equipes de TI corporativas geralmente são avessas a riscos e demoram para mudar.

3. As equipes ainda estão adquirindo as habilidades necessárias para se beneficiar do Kubernetes. Foi somente há alguns anos que os desenvolvedores e o pessoal de operações de TI tiveram que reajustar suas práticas para adotar contêineres — e agora, eles também precisam adotar a orquestração de contêineres. Empresas que desejam adotar o Kubernetes precisam contratar profissionais que saibam programar, além de saber gerenciar operações e entender aplicativos de arquitetura, armazenamento e fluxo de trabalho.

4. O Kubernetes pode ser difícil de gerenciar. Na verdade, você pode ler inúmeras histórias de terror sobre o Kubernetes — desde interrupções de DNS até "uma falha em cascata de sistemas distribuídos" — no Repositório GitHub de histórias de falhas do Kubernetes.

Práticas recomendadas do Kubernetes

Otimize a infraestrutura do Kubernetes para desempenho, confiabilidade, segurança e escalabilidade seguindo estas práticas recomendadas:

- Solicitações e limites de recursos: defina solicitações e limites de recursos para contêineres. As solicitações especificam os recursos mínimos necessários, enquanto os limites impedem que o contêiner use recursos em excesso. Isso garante uma alocação justa de recursos e evita a contenção de recursos.

- Sondas de atividade e prontidão: implemente sondas de atividade e prontidão em seus contêineres. As sondas de atividade determinam se um contêiner está em execução, e as sondas de prontidão indicam quando um contêiner está pronto para atender ao tráfego. Sondas configuradas corretamente aumentam a confiabilidade do aplicativo.

- Gerenciamento de segredos: use Kubernetes Secrets para armazenar informações confidenciais, como senhas e chaves de API. Evite a codificação rígida de segredos em arquivos de configuração ou imagens do Docker, aumentando a segurança.

- Políticas de rede: implemente políticas de rede para controlar a comunicação entre pods. As políticas de rede ajudam a definir regras para tráfego de entrada e saída, melhorando a segurança do cluster.

- RBAC (Controle de acesso baseado em função, do inglês "Role-based access control"): implemente RBAC para restringir o acesso ao cluster. Defina funções e vinculações de funções para fornecer os privilégios mínimos necessários ao usuário e aos serviços, aumentando a segurança.

- Infraestrutura imutável: trate sua infraestrutura como imutável. Evite fazer alterações diretamente nos contêineres em execução. Em vez disso, reimplante com as modificações necessárias. A infraestrutura imutável simplifica o gerenciamento e reduz o risco de desvio de configuração.

- Backup e recuperação de desastres: faça backup regularmente de dados críticos e de configurações. Implemente planos de recuperação de desastres para restaurar seu cluster em caso de falhas.

- Dimensionamento automático de pod horizontal (HPA, do inglês "Horizontal pod autoscaling"): use o dimensionamento automático de pod horizontal para dimensionar automaticamente o número de pods em uma implantação com base na utilização da CPU ou na métrica personalizada. O HPA garante o uso ideal de recursos e o desempenho do aplicativo.

- Monitoramento e registro em log: configure soluções de monitoramento e registro em log para obter insights sobre a integridade do cluster e o comportamento do aplicativo.

A New Relic pode apoiar sua jornada no Kubernetes

Você precisa entender completamente o desempenho do seu cluster e workloads, e precisa ter a capacidade de fazer isso de forma rápida e fácil. Com a New Relic, você pode solucionar problemas mais rapidamente analisando todo o seu cluster em uma única interface do usuário — sem precisar atualizar o código, reimplantar seus aplicativos ou passar por longos processos de padronização dentro de suas equipes. A telemetria automática com Pixie oferece visibilidade imediata de cargas de trabalho e clusters Kubernetes em apenas alguns minutos.

O explorador do cluster do Kubernetes fornece um local onde você pode analisar todas as suas entidades do Kubernetes — nós, namespaces, implantações, ReplicaSets, pods, contêineres e workloads. Cada fatia da “torta” representa um nó, e cada hexágono representa um pod. Selecione um pod para analisar o desempenho do seu aplicativo, inclusive acessando arquivos de log relacionados.

Analise o desempenho de pods e aplicativos

Você pode analisar distributed traces de cada aplicativo. Ao clicar em um span individual, você vê os atributos relacionados ao Kubernetes (por exemplo, pods, clusters e implantações relacionados).

E você também pode obter dados de distributed tracing dos aplicativos em execução no seu cluster.

Próximos passos

Comece hoje mesmo implantando telemetria automática com Pixie (link da UE).

Esta postagem foi atualizada a partir de uma versão anterior publicada em julho de 2018.

As opiniões expressas neste blog são de responsabilidade do autor e não refletem necessariamente as opiniões da New Relic. Todas as soluções oferecidas pelo autor são específicas do ambiente e não fazem parte das soluções comerciais ou do suporte oferecido pela New Relic. Junte-se a nós exclusivamente no Explorers Hub ( discuss.newrelic.com ) para perguntas e suporte relacionados a esta postagem do blog. Este blog pode conter links para conteúdo de sites de terceiros. Ao fornecer esses links, a New Relic não adota, garante, aprova ou endossa as informações, visualizações ou produtos disponíveis em tais sites.