A modern technology stack is a marvel of ingenuity, imagination, and engineering prowess. Most of these environments, however, share another key trait: fragility. As it turns out, keeping a complex system running can be more challenging (and stressful!) than building it in the first place.

That's why New Relic, like most technology firms, takes its incident response processes very seriously. But there's always room for improvement—in how teams prepare for and respond to incidents, and in how they use retrospectives to learn and improve from those incidents.

That was a key message at a recent FutureTalk—New Relic's monthly tech-talk series—on "Incident Lessons and Learning from SNAFUs." It's also the basis for New Relic's partnership with the SNAFUcatchers project, which brings together experts from a number of disciplines to help tech companies cope with complexity, especially around topics related to incidents and incident response.

Watch the video here, and read on for four key insights from the presentation:

1. Incident response involves working at the ‘sharp end’

The FutureTalk featured Beth Long, formerly an engineer with New Relic's Reliability Awareness team and now a DevOps Solutions Strategist, and Tim Tischler, a New Relic Site Reliability Champion. Long and Tischler discussed the special role that incident response plays in maintaining highly complex yet mission-critical systems. These environments may be inherently unstable but unplanned downtime is not an option.

This is what development and operations teams call the "sharp end" of their fields. It's also where, as Long pointed out, there are important lessons to learn from other professionals, including first responders and medical professionals. “Firefighters are the sharp end; they're not sitting in an office" running risk models, Long said. "They're on the ground, they're dealing with the blaze in real time."

"Medical professionals, especially ER doctors and surgeons and anesthesiologists, are the sharp end of medicine," Long added, citing the work of anesthesiologist Dr. Richard Cook—a SNAFUcatchers co-founder and a key figure in the field of incident management—as an especially important influence.

2. Incident response is a team sport

"Incidents are almost always group activities," Long stated. "You have a group that's coming together to deal with it, especially the more interesting incidents that cross team boundaries and require a lot of collaboration."

The collaborative nature of the incident response process raises another issue, "Collaboration is expensive," she explained, "so the theme for the research that we're doing with SNAFUcatchers is the cost of coordination: How do you pay the cost of getting the resources that you need into the room in the first place?"

3. It’s important to recognize—and avoid—the post-mortem ‘cycle of death’

Ironically, the real site reliability work often begins after a team resolves an incident. The post-mortem, or what New Relic calls the retrospective phase, is the point where a team gathers incident response data, interviews participants, compiles its knowledge and lessons learned, and applies these insights to make lasting improvements.

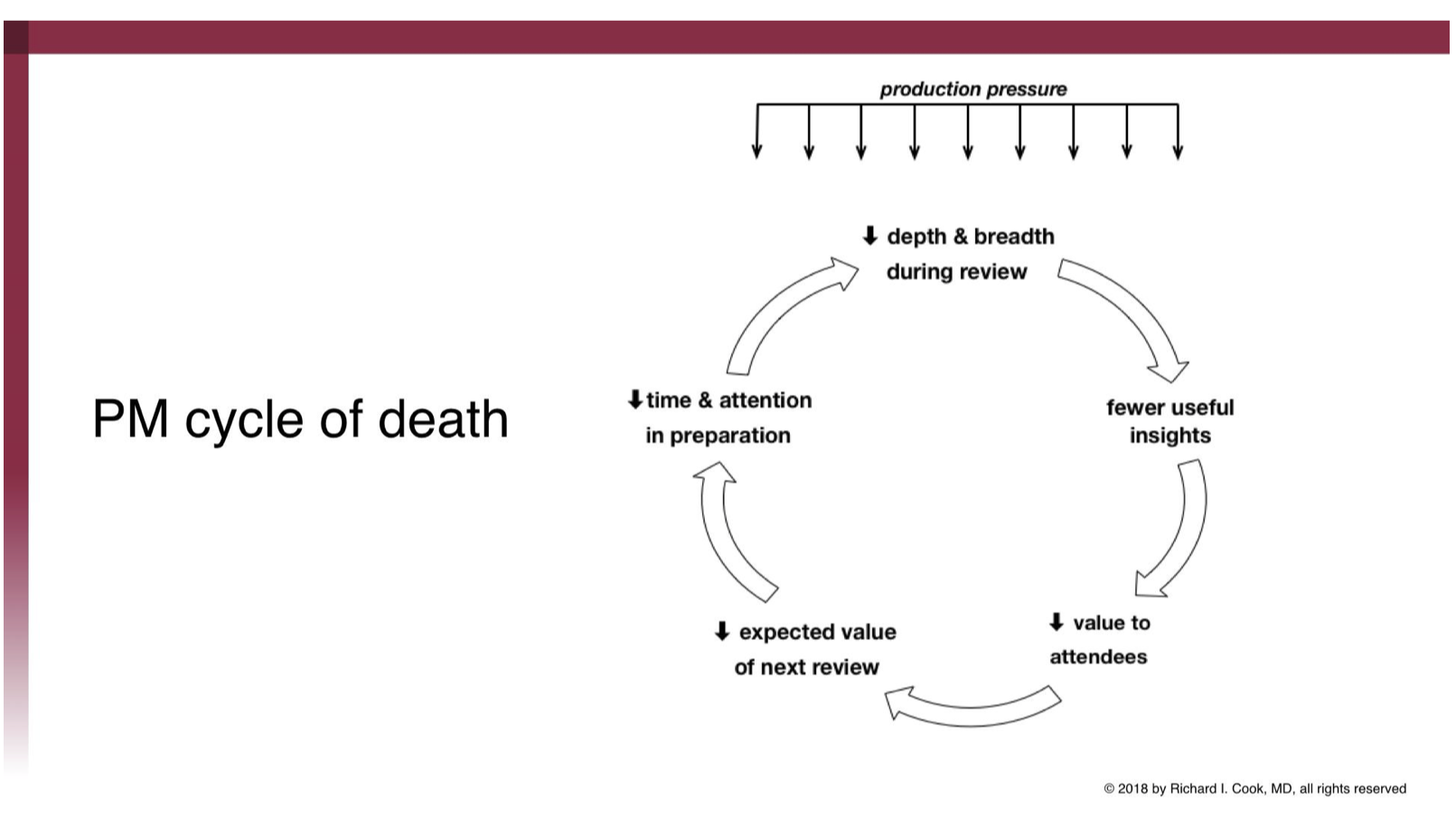

That's what a retrospective should accomplish, at any rate. The reality, however, is that over time, the retrospective process often degenerates into an empty formality—the victim of what Dr. Cook terms the "post-mortem cycle of death."

"We're all under production pressure," Long told the FutureTalk attendees. "We're constantly making this tradeoff: How much time and energy do we spend comparing perspectives and talking, and how much time do we spend doing the real work? There’s a natural tendency to skimp a little on the retrospective, and so we don't get quite as much out of it.”

Over time, that tendency creates a feedback loop. "Eventually, you get retrospectives that are busywork," Long said. "That's the unfortunate end of the spiral of death."

It’s not easy to reverse that process, but New Relic's solution is to tread lightly on emotionally charged, high-impact cases; and to dive deep into retrospectives on incidents involving many participants, cross-team dependencies, and interesting scenarios.

This in-depth process involves something called process-tracing, which New Relic has been learning from the team at SNAFUcatchers, Long explained. In addition to a canonical post-mortem document and extensive interviews with participants, process-tracing also incorporates perspectives from outside the New Relic team.

"This is the work with SNAFUcatchers,” Long said, ”getting experts to come in and look at the artifacts that we've generated and help us understand how to study what is happening."

4. Learn how to maximize the value and impact of incident retrospectives

Long and Tischler also shared several other keys to improving the quality and lasting impact of a team's incident retrospectives:

One common incident-response error, Tischler said, involves the search for a single, "root cause" for an incident. "The root cause fallacy is basically saying X caused Y, even though in reality ... so did A and B and C and D and E," he explained. "If any of those things hadn't been true, there wouldn't have been an incident—but it's the one that changed last that makes us go, 'Aha!'."

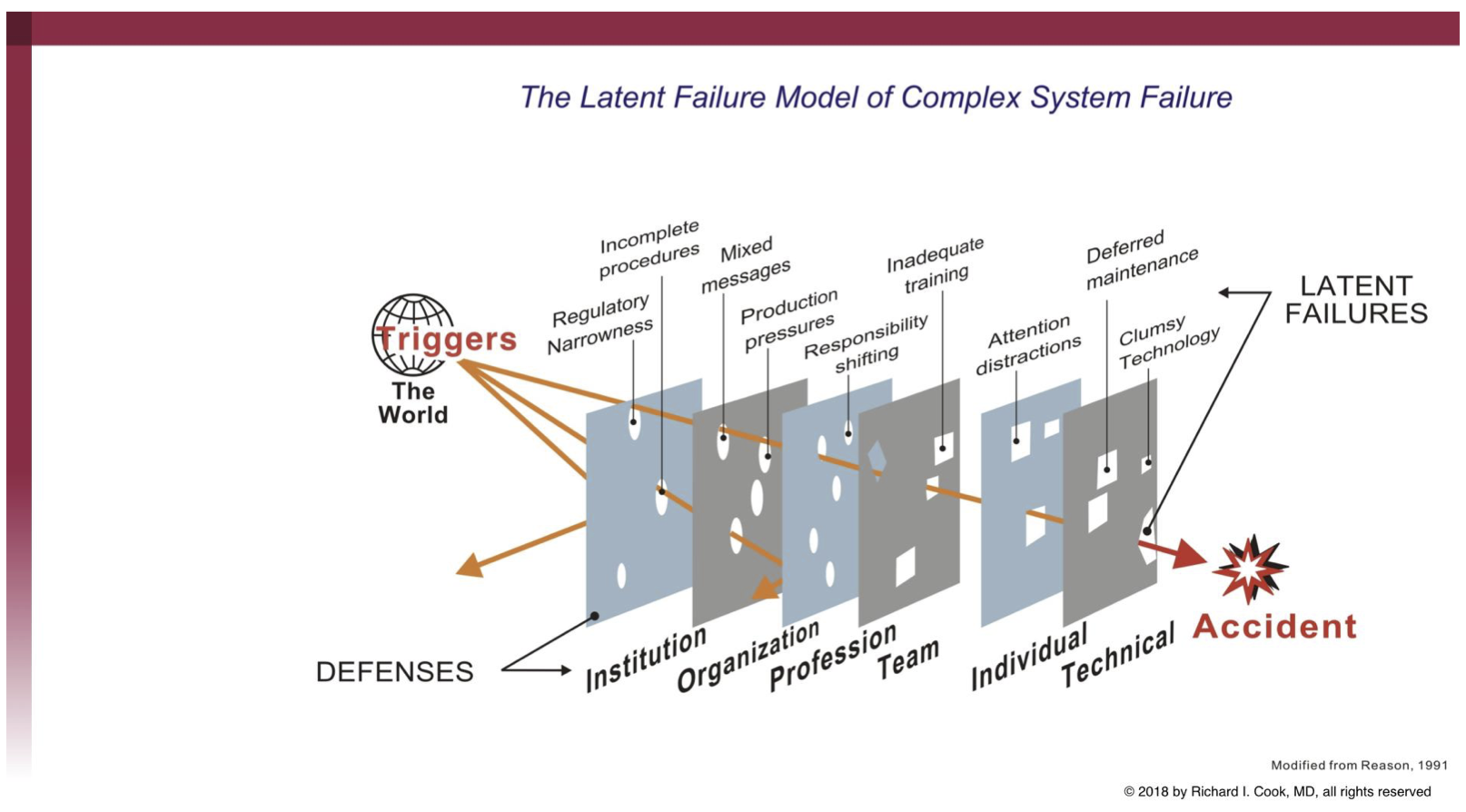

This visual summary above—known as the Latent Failure or "Swiss Cheese" model— shows how multiple failures, across many different teams and capabilities, must align for a major failure to happen. "There is something to be learned at every single step along the way," Tischler said, "because any one of these capabilities could have done something different that might have stopped the incident before it occurred."

Another frequently cited factor in many incidents, Long pointed out, is human error. Yet while it’s easy to blame an individual, doing so rarely tells the whole story.

For example, after the September 2017 Equifax data breach exposed personal data for nearly 150 million people, the company's CEO attributed the incident to human error. Pinning responsibility on a single employee, Long contended, was both too simplistic and extremely unfair. "There's an individual making the change that caused the opening for the data loss, but couldn't there have been a check before that?" she asked. "Was there an alternate way [data] could have been stored?"

By comparison, Long noted, Amazon explicitly refused to blame its February, 2017 S3 outage on the engineer who entered the command that triggered the outage. Instead, she noted, "[AWS] said: 'You should not be able to hit enter at the command line and take down S3 US-East-1'. If you can do that, there's a bigger systemic problem. That's the problem we need to solve.'" As a result, Long stated, Amazon was able to focus on improving its processes.

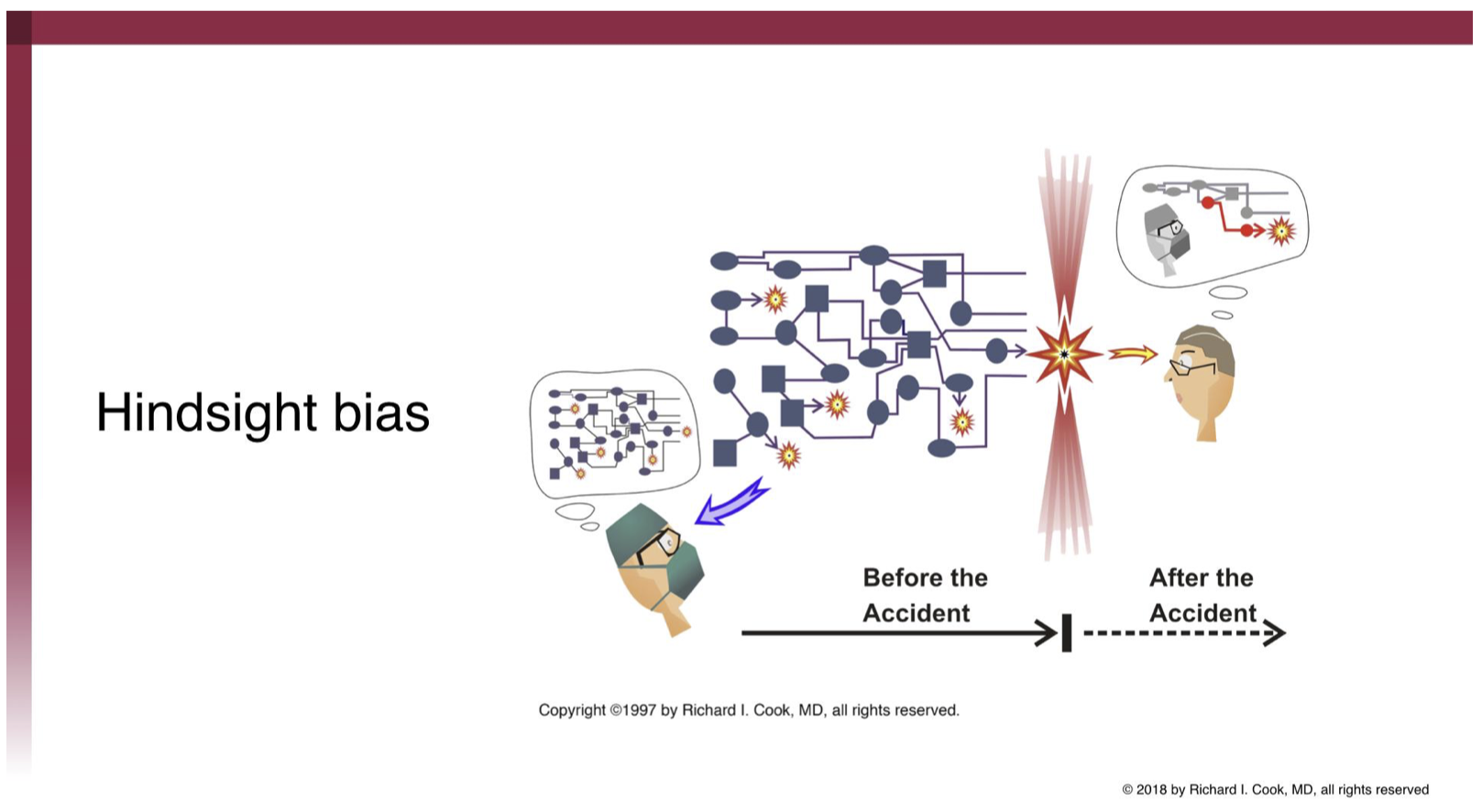

Finally, Tischler addressed hindsight bias, the human tendency (illustrated below) to transform the chaos and uncertainty during an incident into something that looks very different afterwards.

"One of the challenges in running more complex ... incident analysis is making sure that we walk people through the [incident] transcript from the beginning, step by step, so that they're rebuilding the context of the incident as they go," Tischler stated. Asking open-ended questions, on the other hand, invites an idealized recollection of events.

It’s also important to ask quickly. New Relic tries to conduct a retrospective on high-severity incidents within one business day—in order to capture details while they're fresh for the participants. "There's constant pushback" with competing deadlines and priorities, she acknowledged. "But that immediacy is really important."

Improving upon the "unknowable"

Confoundingly, modern, complex systems are "fundamentally unknowable" to some extent, Long cautioned "There's no way that we can fully comprehend these systems."

But incidents and retrospectives, she said, can reveal the most pressing sources of risk within those systems and motivate organizations to invest in useful changes. "Incidents help us look at the areas of the system where we can most productively investigate and dig into that complexity," Long said. "They can help us get the resources that we need to do the work that is usually avoided because risk is typically invisible."

Don’t miss our next FutureTalks event

For more information about our FutureTalks series, make sure to join our Meetup group, New Relic FutureTalks PDX, and follow us on Twitter @newrelic for the latest developments and updates on upcoming events.

Les opinions exprimées sur ce blog sont celles de l'auteur et ne reflètent pas nécessairement celles de New Relic. Toutes les solutions proposées par l'auteur sont spécifiques à l'environnement et ne font pas partie des solutions commerciales ou du support proposés par New Relic. Veuillez nous rejoindre exclusivement sur l'Explorers Hub (discuss.newrelic.com) pour toute question et assistance concernant cet article de blog. Ce blog peut contenir des liens vers du contenu de sites tiers. En fournissant de tels liens, New Relic n'adopte, ne garantit, n'approuve ou n'approuve pas les informations, vues ou produits disponibles sur ces sites.