The AI landscape is continually evolving, and with each advancement comes the promise of greater efficiency, improved performance, and reduced costs. OpenAI's latest offering, GPT-4o, has been positioned as a transformative leap forward, especially for businesses leveraging compound AI systems. GPT-4o is said to offer faster response times, lower operational costs, and enhanced capabilities in areas such as natural language processing and multilingual support. However, transitioning to a new model is a complex decision that requires careful consideration.

We at New Relic are currently evaluating the potential switch from GPT-4 Turbo to GPT-4o for our GenAI assistant, New Relic AI. Having previously navigated the transition from GPT-4 to GPT-4 Turbo, we understand that the reality of adopting new technology often differs from initial expectations. In this blog, we aim to provide a practitioner's perspective on transitioning to GPT-4o. We’ll explore the practical implications of such a move, including performance metrics, integration challenges, capacity considerations, and cost efficiency, based on our preliminary tests and experiences. Our goal is to offer a balanced view that addresses both the potential benefits and the challenges, helping other businesses make informed decisions about whether GPT-4o is the right choice for their AI systems.

Understanding the GPT-4o model

To evaluate GPT-4o effectively, it's important to understand its key features and expected benefits. This section provides a brief overview of what GPT-4o offers.

Analysis capabilities

GPT-4o is designed to enhance natural language processing tasks, offering improved accuracy and understanding in complex queries. It's expected to perform better in multilingual environments and provide more relevant responses.

Resource efficiency

One of the main selling points of GPT-4o is its resource efficiency. It promises to deliver high performance while using fewer computational resources, potentially leading to lower operational costs. This aspect is particularly important for businesses looking to scale their AI operations without significantly increasing their infrastructure costs.

Usability and integration

GPT-4o claims to integrate seamlessly with existing workflows and tools. Its improvements are intended to make it easier for developers to incorporate the model into their applications, reducing the time and effort required for integration. However, as we'll see in the performance evaluation, the practical experience may vary.

Accessibility and pricing

A significant advantage of GPT-4o is its cost efficiency. It’s approximately half as expensive as its predecessors, making it an attractive option for companies aiming to reduce operational costs. This pricing can significantly lower the barrier to entry for advanced AI capabilities, enabling more businesses to leverage the power of GPT-4o in their operations.

Evaluating the hype

While the marketed benefits of GPT-4o are appealing, it’s important to critically assess these through practical testing. Our initial experiences with GPT-4o have provided mixed results, warranting a deeper dive into specific aspects.

To evaluate GPT-4o comprehensively, we conducted a series of experiments focusing on various aspects of performance and integration:

- Latency and throughput tests: We measured the speed of response and processing capabilities during peak and off-peak hours.

- Quality of outputs: We evaluated the accuracy and relevance of responses across different tasks.

- Workflow integration: We assessed how well GPT-4o integrates with our existing tools and workflows.

- Token efficiency: We compared the token usage per prompt between GPT-4 Turbo and GPT-4o to understand cost implications.

- Scalability tests: We monitored performance under increasing loads to assess scalability.

- Cost analysis: We analyzed the cost implications based on token usage and operational efficiency.

Performance evaluation

In our evaluation of GPT-4o, we compared its performance against two other models: GPT-4 Turbo and GPT-4 Turbo PTU, accessed through Azure OpenAI. At New Relic, we primarily use GPT-4 Turbo via the provisioned throughput unit (PTU) option, which offers dedicated resources and lower latency compared to the pay-as-you-go model. This comparison aims to provide a clear picture of how GPT-4o stacks up in terms of throughput and output quality under different conditions.

Throughput analysis

Throughput, measured in tokens per second, reflects a model's ability to handle large volumes of data efficiently. It also gives an indication of latency, as higher throughput generally correlates with lower latency.

Our initial tests on May 24 revealed distinct performance characteristics among the three models:

-

GPT-4 Turbo PTU: Showed a throughput around 35 tokens/sec. The high throughput is indicative of the benefits of dedicated resources provided by the PTU, making it suitable for high-volume data processing tasks where consistent performance is critical.

-

GPT-4 Turbo: Operating under the pay-as-you-go model, GPT-4 Turbo showed a peak throughput around 15-20 tokens/sec. While efficient, it did exhibit some limitations compared to the PTU model, likely due to the shared resource model that introduces variability.

-

GPT-4o: Demonstrated a throughput around 50 tokens/sec, inline with the OpenAI's claim that GPT-4o is 2x faster compared to GPT-4 Turbo. While GPT-4o showed potential in handling large-scale data processing, it also exhibited more variability, suggesting performance could fluctuate based on load conditions.

We continued to monitor the performance of these models over time to understand how they cope with varying loads. Further tests conducted on June 11 provided additional insights into the evolving performance of these models:

- GPT-4 Turbo PTU: Showed a rather consistent performance with peak around 35 tokens/sec. This consistency reaffirms the benefits of PTU for applications requiring reliable and high-speed processing.

- GPT-4 Turbo: Maintained a peak throughput around 15–20 tokens/sec, but showed a slight decrease in peak density indicating less predictable latency due to increased variability.

- GPT-4o: Showed a significant decrease in throughput with peak now around 20 tokens/sec. Based on our experience with the transition from GPT-4 to GPT-4 Turbo, it's reasonable to assume that a higher demand of GPT-4o endpoints (accessed via pay-as-you-go model) could further degrade its efficiency, potentially impacting its suitability for applications that require consistent high throughput and low latency.

Quality of outputs

In addition to throughput, we evaluated the quality of outputs from each model, focusing on their ability to generate accurate and relevant responses across various tasks. This evaluation includes natural language processing, multilingual support, overall consistency, and integration with existing workflows.

Natural language processing and multilingual support

- GPT- 4 Turbo: Both provisioning options of GPT-4 Turbo consistently generate quality responses across various tasks and perform generally well in natural language processing applications.

- GPT-4o: Excels in understanding and generating natural language, making it highly effective for conversational AI tasks. The o200k_base tokenizer is optimized for various languages, enhancing performance in multilingual contexts and reducing token usage. However, GPT-4o tends to provide longer responses and may hallucinate more frequently, which can be a limitation in applications requiring concise answers.

Accuracy and consistency

-

GPT- 4 Turbo: In tasks requiring high precision, GPT-4 Turbo performs reasonably well, correctly identifying 60–80% of the data in complex data extraction tasks. However, consistency in response accuracy and behavior when the same task is repeated can vary depending on the specific use case and setup.

-

GPT-4o: Shows comparable performance to GPT-4 Turbo. In some fields, GPT-4o slightly outperforms GPT-4 Turbo in accuracy, though this varies depending on the specific task. For instance, in complex data extraction tasks, GPT-4o correctly identifies only 60–80% of the data demonstrating a comparable performance. However, GPT-4o shows significant variability in response consistency, particularly when asked to repeat the same task multiple times.

Integrations and workflow efficiency

- GPT- 4 Turbo: Generally integrates well with existing tools and workflows, ensuring smooth operations and consistent performance. It leverages integrations to provide comprehensive and contextually relevant answers, making it a reliable option for various applications. However, the model still lacks precision.

- GPT-4o: Though both the models, GPT-4 Turbo and GPT 4o show comparable performance, GPT-4o sometimes attempts to answer questions directly rather than leveraging integrated tools. This can disrupt workflow efficiency in systems that rely on tool integrations or functions for context-relevant responses.

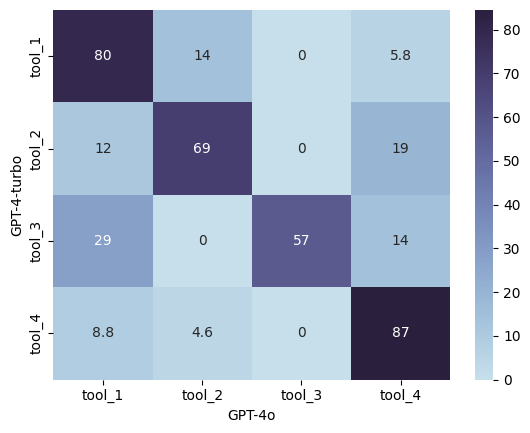

The confusion matrix below provides further insights into how GPT-4 Turbo and GPT-4o handle function calls differently, reflecting their interpretations of function descriptions and user queries in case of New Relic AI.

The models show high agreement on certain tools, such as tool_4 with 87% agreement, indicating consistent interpretation of this function. However, the agreement drops to 57% for tool_3, showing variability in processing this particular tool. Both models exhibit similar patterns of misclassification, highlighting nuanced differences in their function calling behavior. This suggests that even though GPT-4o is faster, better and less expensive on paper, replacing GPT-4 Turbo with GPT-4o will not necessarily yield identical behavior. There are always nuances and models can return an unexpected result.

Tokenizer efficiency

Tokenizer efficiency plays a critical role in the overall performance and cost-effectiveness of the models, especially in multilingual contexts.

| Model | Tokenizer | Efficiency |

|---|---|---|

| ModelGPT-4 Turbo | Tokenizercl100k_base |

Efficiency

|

| ModelGPT-4o | Tokenizero200k_base |

Efficiency

|

Cost efficiency

OpenAI's claim of reduced costs with GPT-4o is a significant selling point. GPT-4o is approximately half as expensive as its predecessors, with input tokens priced at $5 per million and output tokens at $15 per million. Additionally, GPT-4o allows for five times more frequent access compared to GPT-4 Turbo, which can be highly beneficial for applications requiring continuous data processing or real-time analytics.

| Feature | GPT-4 Turbo | GPT-4o |

|---|---|---|

| FeatureInput tokens | GPT-4 Turbo$10 per million tokens | GPT-4o$5 per million tokens |

| FeatureOutput tokens | GPT-4 Turbo$30 per million tokens | GPT-4o$15 per million tokens |

| FeatureRate limits | GPT-4 TurboStandard OpenAI API policies | GPT-4oFive times more frequent access |

However, our previous experience with transitioning from GPT-4 to GPT-4 Turbo highlighted some important lessons. Despite the advertised cost reduction per 1,000 tokens, we didn’t see the expected savings in certain use cases. This discrepancy was primarily observed in use cases without a specified output format, where the model has the freedom to generate as much text as it thinks fit. For example, in retrieval-augmented generation (RAG) tasks where the model tends to be more "chatty", the new version of the model can generate more tokens per response. For instance, for the same question to New Relic AI, How do I instrument my python application, the new version can generate a 360-token answer, while the old one generated a 300-token answer. In such cases, you won't be getting the advertised 50% reduction in cost.

Similarly, while GPT-4o offers lower costs per token, the total token usage per prompt might increase due to the model’s behavior (the model tends to be more "chattier" compared to GPT-4o), potentially offsetting some of the cost savings if prompts are not optimized effectively. Moreover, integration and workflow adjustments required for GPT-4o may necessitate extra development and operational costs. Given the integration challenges previously discussed, businesses may need to invest in optimizing their workflows and ensuring seamless integration with existing tools. These adjustments could incur additional costs, which should be factored into the overall cost efficiency analysis.

Making an informed decision:

After understanding the detailed performance and cost metrics of both GPT-4 Turbo and GPT-4o, it’s crucial to make an informed decision that aligns with your specific needs and objectives. This involves a holistic assessment of your business requirements, cost implications, and performance requirements. Here are key factors to consider:

- Throughput and latency needs: If your application requires high throughput and low latency, GPT-4o’s faster response times and higher rate limits may be beneficial. This is particularly important for real-time applications like chatbots and virtual assistants. However, it's important to monitor the performance of GPT-4o over time. Our tests indicate that GPT-4o's performance may degrade with increased usage, which could impact its reliability for long-term projects. For consistent performance, companies might eventually need to consider moving to GPT-4o PTU, which may offer more stable and reliable performance.

- Quality of outputs: While both models provide high-quality outputs, consider the consistency of responses. GPT-4 Turbo may offer more predictable performance, which is critical for applications where uniform quality is essential.

- Integration with tools: If your workflow relies heavily on integrated tools and context-rich responses, evaluate how each model handles these integrations. GPT-4 Turbo’s ability to leverage existing tools might offer smoother workflow efficiency compared to GPT-4o.

- Cost: Compare the cost per million tokens for both input and output. GPT-4o is less expensive, but ensure that any potential increase in token usage per prompt does not offset these savings. Moreover, be mindful of additional costs associated with integration and workflow adjustments. Transitioning to GPT-4o may require changes to existing processes, which could incur development and operational expenses.

- Token efficiency: Consider the complexity and language diversity of your content. GPT-4o’s o200k_base tokenizer is more efficient for multilingual tasks, potentially reducing overall token usage and cost for non-English content.

- Rate limits and usage: GPT-4o’s higher rate limits can accommodate more frequent interactions, making it suitable for applications with high interaction volumes. This can ensure smoother performance under high demand.

- Scalability: Consider the long-term scalability of your application. GPT-4o’s cost efficiency and performance improvements might offer better scalability, but assess how these benefits align with your growth projections and resource availability.

Conclusion

Deciding between GPT-4 Turbo and GPT-4o requires a careful evaluation of your specific needs and goals. GPT-4o offers cost benefits and superior efficiency in multilingual contexts but may involve higher token usage and potential integration challenges. Monitoring its performance over time is crucial due to possible degradation. For stable, consistent performance, GPT-4 Turbo remains a reliable option. By assessing these factors, you can select the model that best aligns with your operational needs and ensures both optimal performance and cost efficiency.

다음 단계

Stay ahead in AI innovation with New Relic. Sign up for free today and optimize your enterprise AI solutions with comprehensive AI monitoring tools from New Relic.

For more insights on AI performance and integration, explore these essential resources from New Relic:

- Evaluating generative AI performance: When your data is “anything”

- From ideation to launch: Using New Relic insights to develop and monitor your first generative AI chatbot

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.