This post is adapted from a New Relic FutureTalk called Team Health Assessments: Why and How. Honey Darling, a senior software engineering manager for the New Relic Browser team, contributed to that talk and this post.

The New Relic product organization constantly strives to improve our practices. We want to develop a clear understanding of how we work every day, and what it means to have healthy teams. How can we make our organization a center of trust for 50+ engineering teams?

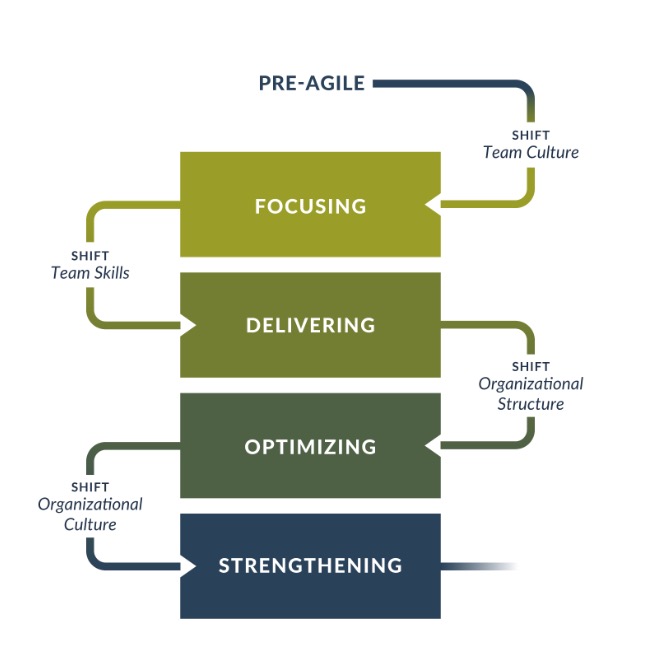

Two years ago during Project Upscale, we made a significant Agile transformation. With the help of Agile specialist Jim Shore, we learned a lot about our organization—which parts were working, which parts weren’t—and then we rewrote the rules. Among other things, we let our engineers self-select onto the teams they wanted to join. Our new org design was strongly influenced by ideas from Jim’s Agile Fluency Model, which is a part of the Agile Fluency Project.

Talking with folks in various leadership roles at New Relic, I heard a lot of stories about the successes and failures of this new model. But it was all anecdotes, it wasn't real data. We needed something more concrete if we wanted to understand what was going on in these new teams and drive the positive changes we wanted to make. At the same time I was having these conversations, the Agile Fluency Project released an agile practices diagnostic for teams, and the proverbial light bulb appeared: We needed to do team health assessments. This is the story of how we developed and implemented our assessments.

About the Agile Fluency Model

The Agile Fluency Model, created by Jim Shore and Diana Larsen, is a model designed to help you understand how far your organization has progressed on its Agile journey. You can also use the model to measure whether the Agile system is performing the way you want it to. During Project Upscale, we baked this model deep into our engineering practices.

From where I sit as a principal engineer and organizational architect, in a unique position between humans, technology, and organizational design, I find this model quite useful.

For Project Upscale, we focused on the Optimize Value level in the Agile Fluency Model. Our goal was to provide each team with the business and market context they needed to become self-directed and focus on delivering new business value for the entire organization.

The Team Health assessment, I thought, would help us understand how—or if—we were meeting this goal. We put together a team of six people known for having Agile chops and facilitation skills, and then we got to work.

Defining our standards

To start, I did some asynchronous collective brainstorming. I sat with everybody in the organization who was at the director level or higher and asked them a simple question: "What do you think makes a healthy team?" I filled up most of a notebook with some 250 different answers to that question, way too many responses to create standards for our assessment.

My core team took those 250 responses and boiled them down until we had 22 health standards that felt true and believable. We wanted everybody in our organization to believe in them, and we wanted them to project the standards of a healthy team.

Here's a high-level view of our health standards, broken down into six categories:

| Management | Software | Culture | Focus on value | Effective delivery | Self-determination |

|---|---|---|---|---|---|

| ManagementOur work is observable | SoftwareOur software is maintainable | CultureWe are inclusive | Focus on valueWe focus on business value | Effective deliveryWe deliver incrementally | Self-determinationWe all pull in the same direction |

| ManagementWe are set up for success | SoftwareOur software is reliable | CultureWe have a culture of flexibility | Focus on valueWe use feedback to improve | Effective deliveryWe deliver maintainable software | Self-determinationWe solve customer problems |

| ManagementWe have the right composition to deliver our vision | SoftwareOur software is secure | CultureWe work effectively with each other | Focus on valueWe work in priority order | Effective deliveryWe keep our inventory lean | Self-determinationWe have a process that works |

| ManagementWe understand our strategy and responsibilities | Software | CultureWe collaborate effectively with other groups |

Focus on value | Effective deliveryWe minimize operational overhead | Self-determinationWe solve problems for ourselves |

The management, software, and culture categories are not part of the Agile Fluency model, but the other three categories are—although we expanded them to better meet the needs of our organization.

Each standard includes multiple indicators (usually 2-4) associated with it; for example:

| Theme | Standard | Indicator |

|---|---|---|

| ThemeHealthy software | StandardOur software is maintainable | IndicatorTeam is fluent in software stacks of all services and libraries it owns |

| ThemeHealthy software | StandardOur software is maintainable | IndicatorTeam uses and improves New Relic tools and frameworks |

| ThemeHealthy software | StandardOur software is maintainable | IndicatorTeam works to accept PRs from other teams |

After we settled on the standards, we had to go and build the assessment.

One spreadsheet to rule them all

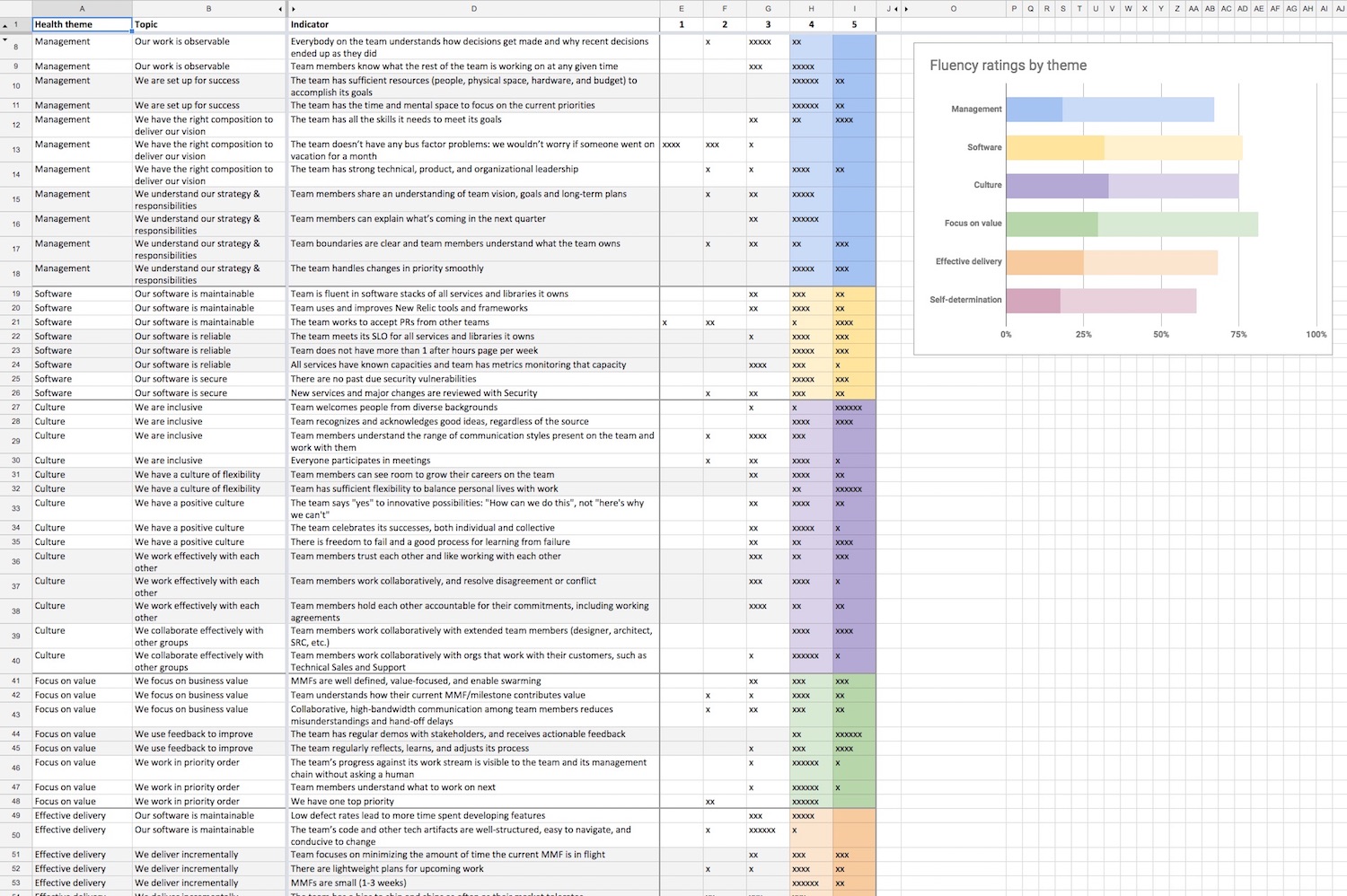

Yep, we used a good old Google spreadsheet—nothing tricky here. (Obviously, this is not the only method to track this. What worked for us may not work for you.)

The spreadsheet shows the health theme, the standard, and the indicator, and adds a scale on which individual team members can assess the indicator.

We asked each participant to answer, on a scale of 1 - 5, "How often is this indicator true for your team?" with 1 being never and 5 being often or always. Team members filled out their responses simultaneously, but independently.

After teams populated their answers, we sat with each team for a two-hour discussion about their scores, and looked at the results standard by standard. If we saw a pattern where the results were clustered too high or too low, we wanted to know why the team felt so good or bad about that particular standard. In situations where there was a giant spread in the assessments, we wanted to know if team members were perceiving reality differently from one another.

As we conversed with teams, their members could go back to the spreadsheet and change their responses. In some cases people hadn’t fully understood a question or gained a new context or insight during the discussions that led them to want to change their responses. This wasn’t a quiz—the questions were there to drive discussion and response—so we encouraged folks to change if they wanted.

An important consideration during the assessment was to respect the confidentiality of the teams. In order to make them feel safe and free to share their opinions about their challenges and their strengths, it was really important that we maintain a certain level of confidentiality with the work that we were doing. To this end, managers weren't present in the assessment process, but they did fill out the same assessment their team members did. During the conversation, the facilitator recorded copious notes that we kept anonymous. Our goal was to understand what was being said or discussed but not to track who was saying it. These notes were shared with the manager after the assessment was complete.

The backchannel period

We built in a two-day waiting period before we turned over the results to team managers, so team members could approach a facilitator about results in confidence. We called this the backchannel period.

Sometimes this information was used to change to results in the spreadsheet, or to amend the facilitator’s notes. Sometimes it was delivered to the manager verbally. And sometimes it was nothing more than helpful context.

Whether or not we used the information, we felt it was important to provided this avenue for feedback, and to ensure the assessments were a safe space for people to speak up. The hardest problems to discuss are frequently the most important ones to solve, and we need honesty and transparency for the process to work.

Team health plans: an overview

After we gathered the responses from each team, we asked them to put together a health plan for themselves. Each team’s plan needed to address four areas:

- What did the team believe to be its strengths?

- What did the team believe to be its weaknesses?

- What did the team need assistance to complete? (For example, what things did the team need investment from the larger organization in order to accomplish?)

- Specify 2-5 things—in your control—that the team will do to improve team health.

Team health plans: a closer look

To see how it all played out, let’s take a closer look at the New Relic Browser team. Composed of six engineers, a product manager, and a designer, this team’s mission is to help New Relic customers understand the performance of their websites.

When the Browser team sat down to start their plan, they began with a series of brainstorming sessions looking to identify their strengths, areas for improvement, goals they wanted to accomplish, and the action items they could walk away with. The goal: to get a better score in their next team health assessment.

In terms of strengths, the Browser team felt they had really high standards for themselves and were constantly improving and seeking to be very intentional about everything they did. In their own words:

- We always support each other's ideas. We listen to each other, and we pay attention. We make sure that everyone on the team has a chance to contribute to our discussions.

- Our work/life balance is extremely important to us. One of the things we've worked really hard on is being able to work from home and to make that experience better.

- We always step in for each other at a moment's notice. We notice when others are feeling overwhelmed, and we provide cover where it’s needed.

One of their weaknesses, however, is what they called “the lottery factor"—folks could get scared when someone went on vacation, as that meant the team would have to pick up slack, maybe in an area they weren’t fully proficient in, or risk velocity dips.

To that end, they knew they needed to remove their knowledge silos and increase confidence on the team so that team members could work in all of the areas of their systems. To gain expertise on the product—and as a team—they knew they needed to own every piece of the product together.

The team also wanted to get closer to their customers. They were already highly collaborative and this stretched into a desire to also collaborate with the users they were building the product for. For example, what technologies were their customers using to build their websites? How could they use this knowledge to stay “one step ahead” of their users’ needs? Being closer to the product and customers would also help new team members more quickly understand who their customers were and what those customer needed from them.

The Browser team’s main action item was that they wanted to move faster. More than just speeding up, though, that meant doing the right thing at the right time for their customers. But how could they measure this?

They’ve tried tracking all kinds of things—story points, minimum marketable features (MMF), and project burndowns (to name a few). Measuring how fast they’re going has been a real challenge because it's not just about how fast they code—it’s about how fast they’re adding value and how fast they’re learning.

The team is evolving so quickly that they haven’t figured out the best way to measure their speed just yet. They’re still a work in progress, but they definitely plan on improving for the next assessment.

So, what did we learn?

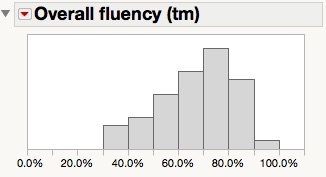

Here is a histogram of the overall fluency of the indicators that scored 4 or 5, indicating healthier teams.

Two teams score above 90% while the center of gravity is near 70%. This means most teams feel they’re doing pretty well. But some groups are having a harder time—and they’re going to need some help.

A good portion of our analysis, though, came from reviewing the notes we took during the assessment conversations. We looked for repeating patterns, and mapped them back on to the teams. We ended up with 35 “common findings” that cropped up on five or more teams. These findings included factors in which teams wanted more training, felt they owned too much technology, or indicated that the overall product organization could improve our recognition processes. We were able to cluster several of the common findings into overarching themes to help drive our progress in our organization. Here are two of those major themes, with their common findings:

| Idea flow | Feedback and connection |

|---|---|

Idea flow

|

Feedback and connection

|

We took this data “on the road,” sharing it with engineering and product management leadership, and eventually with the product organization overall. Occasionally, we had to restrain those who saw the data and wanted to push for too many immediate changes too quickly. We worried that if we overloaded our system with too much change too quickly, we risked watching it fall over in “very interesting ways.” Plus, teams were already planning to execute their health plans to make local changes to address many of these issues. That’s the kind of the change we’re pumping through the system right now—it’s still very much a work in progress.

What’s next?

So far we done one health assessment, so we’ve got one set of data points. That’s fantastic, but we need a second set. And a third and fourth and fifth and sixth. Currently, our plan is to roll out this assessment every six months. Over time, we will create deltas for each team so they can see where they are improving or losing ground. And, since we’re good Agile practitioners, we’re going to retrospect every time.

Just as important, we’re going to be flexible and revise our standards based on what we learn. We’re going to continually turn the crank on this project to make it better and better. That’s our goal: continuous organizational improvement through data.

For more about our team health assessment, watch the full video of our FutureTalk:

Las opiniones expresadas en este blog son las del autor y no reflejan necesariamente las opiniones de New Relic. Todas las soluciones ofrecidas por el autor son específicas del entorno y no forman parte de las soluciones comerciales o el soporte ofrecido por New Relic. Únase a nosotros exclusivamente en Explorers Hub ( discus.newrelic.com ) para preguntas y asistencia relacionada con esta publicación de blog. Este blog puede contener enlaces a contenido de sitios de terceros. Al proporcionar dichos enlaces, New Relic no adopta, garantiza, aprueba ni respalda la información, las vistas o los productos disponibles en dichos sitios.