Apache Kafka is a building block of many cloud architectures. It’s heavily used to move large amounts of data from one place to another because it’s performant and provides good reliability and durability guarantees. Processing large amounts of data in the cloud can easily become one of the more expensive services in your cloud provider bill. Apache Kafka provides many configuration options to reduce the cost, both at the cluster and client level, but it can be overwhelming to find the proper values and optimizations. New Relic is a heavy user of Apache Kafka, and has previously published several blogs such as 20 best practices for Apache Kafka at scale. In this article, we’ll expand those insights using monitoring to highlight ways to tune Apache Kafka and reduce cloud costs.

If you can't measure it, you can't improve it

The first step to optimizing costs is to understand them. Depending on how Apache Kafka is deployed and the cloud provider used, cost data can be provided in a different way. Let’s use Confluent Cloud as an example. Confluent Cloud is a managed service available in the main cloud providers. They provide a billing API with the aggregated-by-day costs. The API is a great first step, but with that data imported into New Relic, it’s possible to understand the evolution over time and even have anomaly detection with applied intelligence with proper alerts and notifications. Nobody likes unexpected cloud costs.

A popular way to send data to New Relic is New Relic Flex. It’s an application-agnostic, all-in-one tool that allows you to collect metric data from a wide variety of services, and it’s bundled with the New Relic infrastructure agent. New Relic Flex can scrape the Confluent billing API every 24 hours and send the data to New Relic using a YAML file with the configuration. Afterwards, you can use all the New Relic features to explore your Apache Kafka costs in the cloud.

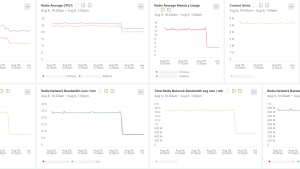

The below image shows the ConfluentCloud dashboard in New Relic.

If you’re using any other provider, the process is similar but with different APIs. If you’re using self-managed Kafka in the cloud, it may require some proper integration and resource tagging to differentiate the Kafka costs from other services. In any case, there are many similarities between the different approaches. Many of the optimizations apply to all of them. The Apache Kafka costs are divided into the following categories:

- Networking: The amount of traffic between brokers or between brokers and clients.

- Storage: The size, I/O throughput, and amount of disks required to operate.

- Server hardware: CPU/RAM or a high-level representation like the number and type of instances.

Networking in the cloud: high impact but usually forgotten

Networking isn’t the usual bottleneck in Kafka when operating in the cloud, so many engineers don’t optimize their services to minimize it. That’s a mistake because it can have a huge impact on costs.

The first step is understanding how services are connected to the Kafka clusters. New Relic provides networking performance monitoring, which helps to identify all the networking hops required to connect to Kafka. Some of the components can have a huge impact on the cloud provider bill. The cost difference between connecting to a service in the same network and connecting to a service in a different network (or region) is significant. Given the high-throughput capabilities of Apache Kafka, this can easily become the biggest cost of running the service.

It’s common to run Kafka in several different availability zones to provide high availability. If there’s an outage in one availability zone, the service should work without interruption because there are still Kafka nodes (brokers) with copies of the data in the other two. Unfortunately, traffic from one availability zone to another is expensive. There’s an optimization on the client side that New Relic uses with great success with different flavours of Kafka: fetch from the closest replica.

The idea is to configure the services reading data to connect to the Kafka nodes in the same availability zone if possible. This significantly reduces the cross-AZ traffic and the cost associated with it. There’s still cost associated with the services sending data or internal data replication between nodes, but it’s a significant improvement. As usual, it’s a trade-off. It will increase the end-to-end latency of the data pipelines, so it’s important to measure how the latency will be affected. The New Relic Java agent already supports distributed tracing with Kafka in addition to many more Kafka metrics to measure the impact of such changes.

The below image shows the distributed tracing for Kafka with the Java Agent.

In applications sending data to Apache Kafka, another optimization that makes a big difference is compression. Kafka producers send data to Kafka in batches, instead of individual messages. It’s possible to apply compression to those batches before sending them. Apache Kafka stores them compressed and the application consuming from Kafka decompresses them to process the messages. This not only reduces the amount of traffic sent on the wire but also the amount of storage required in Kafka nodes. It also increases the CPU usage in services consuming and producing to Kafka, but it’s usually a good trade-off. It’s important to measure how compression affects the latency and throughput of your pipelines. The New Relic Java agent provides several metrics that help to understand how compression is behaving. The outgoing-byte-rate metric will show changes in throughput, and the request-latency-avg metric will show changes in latency. Apache Kafka supports several compression algorithms and they behave differently depending on the type of data sent by applications. It’s interesting to test with different algorithms to figure out which one is better for every use case. The compression-rate-avg metric shows how well compression is working, but it’s also important to understand how it affects the latency, throughput, and CPU usage of the producers and consumers.

Storage: a simple way to increase the traffic with a minimal change

Capacity planning for Apache Kafka isn’t easy. There are many factors involved. One of them is storage throughput, also called disk I/O. In high-throughput pipelines, storage throughput can easily become the bottleneck. Adding more brokers mitigates this problem but also increases the costs. The good news is that it’s usually easy and inexpensive in the cloud to use faster disks.

If you’re self-managing Kafka, the infrastructure agent provides metrics to understand how close to the disk's limit Kafka is running. If you’re using a managed service, they usually provide some metrics. Let’s use, for example, Amazon Managed Streaming for Apache Kafka (Amazon MSK), another popular Kafka service. New Relic has an integration for Amazon MSK available using the AWS CloudWatch Metric Streams integration.

Amazon MSK provides metrics like VolumeQueueLength, VolumeTotalWriteTime, VolumeWriteOps, VolumeWriteBytes, and the equivalent for Read, which show how saturated the disk is. If that’s the case, Amazon MSK allows you to increase the provisioned storage throughput easily, without re-creating the cluster or impacting the current traffic. This change will improve reliability, reducing recovery times, and the need to add more brokers, reducing the overall cost of your pipelines. The same principle applies to self-managed clusters.

This type of optimization was a massive success for New Relic in the past. Increasing the disk I/O allowed the processing of 20% more traffic per cluster so New Relic could decommission the equivalent number of Kafka clusters. The cost of the disk I/O increase is significantly lower than the total cost of running a whole cluster, leading to significant savings.

Server hardware performance

After network and throughput, the next item to optimize cloud costs is the number of Kafka nodes (brokers). It’s almost always limited by CPU usage in the machines running Kafka. There are many ways to optimize CPU usage: select the more optimal machine types, minimize the creation of compacted topics (it requires extra work on the server side), reduce the replication factor (fewer copies of the data), etc.

Interestingly, one of the configurations with a major impact on New Relic cloud costs isn’t a cluster configuration, but in the clients. Apache Kafka clients usually don’t send individual messages to the server, they group them in batches. This introduces some latency, but it’s more efficient at the network level. Also, compression works much better with batches than with individual messages.

By default, the Apache Kafka client configurations aren’t efficient for batching. Many batches have a small number of messages and a small size. Because of that, applications sending data to Kafka send many more requests. The more requests that are received by Kafka brokers, the higher the CPU needed to process them.

If the producers use the New Relic Java agent, the Kafka client metric batch-size-avg shows the average size of batches, and the request-rate metric shows the average number of requests per second. It’s possible to configure the applications to send bigger batches. For example, New Relic recommends internally to use linger.ms with a value of 100 ms. This makes the producer wait for 100 ms to send the batch to the cluster, resulting in bigger batches and fewer requests to the brokers. This change in only one application in New Relic reduced the CPU usage in the cluster by 15%. This lets us scale down the clusters to fewer brokers with significant savings in cloud costs.

Kafka metrics widget with the Java Agent versions 8.1.0 or higher

Kafka showbacks

Apache Kafka is an amazing piece of software in terms of performance. Part of how it accomplishes that is by giving more responsibility to the applications using it. This works really well, but it also makes capacity planning and governance harder. The previous case with the size of batches is a good example. There’s no easy way to force users to do the right thing and tune their applications for optimal CPU usage in the clusters.

At scale, this can lead to easily avoidable cloud costs. An interesting approach to solving this problem is to use showbacks or chargebacks. The first step is to have proper monitoring at three different levels:

- Billing/costs: For example, using New Relic Flex.

- Kafka clusters:

- Using the infrastructure agent and the on-host integration for Kafka for self-managed Kafka clusters.

- New Relic integrations for Kafka managed services like the New Relic integration for Amazon MSK or the New Relic integration for Confluent Cloud.

- Kafka clients: For example, using the New Relic Java agent.

With the monitoring in place, the idea is to assign the cost of every component (network, storage, server hardware) to teams using Kafka. This is an example implementation:

- Cost of networking: Assigned to teams based on the throughput consuming/producing to Kafka.

- Cost of storage: Assigned to teams based on retention configuration and client throughput. Unused storage is required to deal with traffic spikes. The amount of unused storage is defined by the team owning the cluster. It should be assigned to that team to ensure it’s adequately sized.

- Server hardware (CPU): Assigned to teams based on the number of requests made to Kafka brokers. Unused CPU shouldn’t be assigned to teams, so it’s possible to identify capacity planning issues.

With this approach, it’s easy to enforce good practices and ensure teams are using Kafka resources efficiently. Teams can review Kafka showbacks periodically to identify anomalies or potential optimizations. It’s also possible to use anomaly detection with applied intelligence with proper alerts and notifications and change tracking to identify how new developments affect cloud costs.

Deployment change tracking in New Relic

Summary

Apache Kafka is a great technology to move big amounts of data in a fast and reliable way. It allows many configurations to improve performance and reduce cloud costs. Although it can be challenging to identify those optimizations and measure how they will affect the existing services and pipelines, adding proper monitoring in the Kafka servers and clients helps to smooth that process. Automating the correlation between billing data, Kafka metrics, and users builds a showbacks/chargebacks strategy to ensure the long-term optimization of cloud costs.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.