If you’ve been following this blog series, you’ll notice that many of the topics share a common theme around observing the health of software and/or the infrastructure behind it. This post focuses on a more non-traditional use case—data pipeline observability—and how you can use platforms like New Relic to uplevel your data pipeline observability practices.

A bit of background

It’s no secret that in any modern digital business, data is king. It’s often at the core of the decision-making process. At New Relic, a subset of our data is even more vital because it’s the basis for the invoices we generate. This is because in 2020 New Relic switched from a license-based purchasing model to a consumption-based model. While consumption-based pricing is not a new concept, the shift we made continues to have a large impact on our own data pipeline observability practices. This is especially true for our usage pipeline, which tracks billable usage for each customer. When observing billable usage data, accuracy is critical; it’s paramount that we correctly invoice each customer. With thousands of invoices going out every month, it’s simply not possible to manually verify the usage for each one. Below is a framework for thinking about how to do data pipeline observability well. While it stems from our own journey in this space, the points raised should be applicable to other contexts.

1. Know your goals

A good starting point when approaching this domain is to ask yourself: What do I want to achieve with data pipeline observability? There are some general goals which can apply to most pipelines: monitoring for SLA attainment, alerting on errors, watching for specific traffic patterns or outliers, and so on. But goals can also be specific to your business. In our case, we have two primary goals:

- Ensure we capture billable usage accurately and invoice based on that.

- Ensure the usage pipeline has automated data quality checks.

2. Know your data

This may seem like an obvious suggestion, but truly understanding your data is an often overlooked step. When you take time to do this, it can help reveal where you need to make investments in order to achieve your goals. Some questions to explore as you dive in include:

- What is the shape of the data? What’s its schema and does it have all of the attributes needed to meet your goals? Or do you need to enrich it with more data sources?

- What is the volume of data? How many data points per second are your systems processing? Are there spikes in volume?

If you don’t know or don’t have good answers to these questions, one easy way to start is by using custom events and/or custom metrics to instrument your data processing pipelines. By doing this you can then easily write New Relic Query Language (NRQL) queries that will help you get baseline answers to these questions.

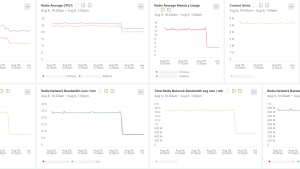

In our case, one of the many items we track in our pipeline is the number of usage events produced by product teams. These events represent the “raw” usage amounts collected by an individual service or API, and serve as the main input to our pipeline. In order to facilitate consistency, we built an internal library that all product teams use to produce these raw events. This library not only standardizes the usage event payload, but it also provides functionality for reporting custom metrics about the events produced. These custom metrics allow us to write NRQL queries like this:

FROM Metric SELECT sum(usageClient.eventsEmitted) where environment = 'production' since 30 days ago TIMESERIES limit maxThis specific query will generate a graph like the following, giving us insight into any macro level deviations in the trend (we also set up alert conditions on this data as well).

Another use for this type of data is capacity planning. Hypothetically, if you know that X million events/hour requires Y amount of Kafka brokers, then you can reasonably forecast how many additional brokers are needed if the event rate increases by a certain amount.

3. Start small

If you’re in the beginning phases of creating a new data pipeline, the best thing you can do is build in observability from the start. However, if you have an established pipeline, then a good approach is to start small.

A typical data processing pipeline may have several stages between its input and output.

Rather than attempting to instrument the entire thing at once, focus on smaller, high value areas first. Some things to consider during prioritization include:

- Known areas of potential instability. For example, if you know that a particular stage has the potential of being unstable (due to code, infrastructure, etc), consider instrumenting those first.

- Highest areas of business impact. For example, if a specific stage is calculating a business critical metric, that may be a good candidate to prioritize.

Once you have identified where to focus your efforts, you can use the aforementioned custom events/metrics APIs, as well as any of New Relic’s instant observability quickstarts to bring your observability to life.

4. Look for quick wins

While advanced data pipeline observability techniques exist (for example, artificial intelligence [AI] and machine learning [ML]), don’t underestimate the value of implementing simpler ones that can deliver quick wins. One that we use internally is to periodically send sample data through our entire usage pipeline and then check the transformed output for correctness. Below is an illustration of what this technique can look like in practice.

Expanding on the sample pipeline diagram from above, you can create a process that produces an input (X) on some interval, then use New Relic to compare the actual output against the expected value (Y). You can further automate this monitoring by creating alert conditions that notify various channels when input X does not have output Y.

While this doesn’t tell you where an issue exists within the pipeline, it serves as a good baseline signal to verify the pipeline is functioning (or not functioning) normally. At New Relic, we have one of these simple, holistic data checks for each measurement we bill on. If an input doesn’t match the expected output, the platform immediately pages the appropriate team(s) to investigate.

Conclusion

Data pipeline observability is a large domain with many complexities. While each pipeline brings with it a unique set of technical and business requirements, they all share at least one common theme—they need to be observed. We hope this post gives you a framework and some practical tips for implementing your own observability practices. We also hope it highlights how platforms like New Relic allow you to get started quickly and easily.

다음 단계

Ready to take your data pipeline observability to the next level? Sign up with New Relic for free and ensure enterprise data program success through robust data pipeline observability.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.