Organizations are increasingly adopting an insights-driven approach. The expansion and evolution of data programs are crossing their traditional boundaries, which were once confined to enterprise reporting on business performance. Presently, data programs are expanding by embedding the actionable insights in business operations such as customer acquisitions, expansion, retention and churn

With more business-critical functions relying on the accuracy and completeness of data insights, it’s not enough to ensure that daily, weekly dashboards are published in a timely manner. Organizations must also ensure the data egress pipelines that feed data to enterprise applications like CRM, customer support and enterprise marketing solutions are running and providing accurate insights in a timely manner with high data quality.

Business impact and data pipeline SLAs

Unreliable data pipelines have a significant risk to crucial business operations, such as:

- Not having the right data to pull marketing campaigns

- Customer churn when account teams fall short in customer meetings due to a lack of updated 360-degree views of customers

- Being unable to present next best recommendation to customers

As a response to these risks, businesses increasingly establish service level agreements (SLAs) on the datasets, dashboards, reports and actionable insights provided by the EDW (Enterprise Data warehouse).The business ramifications of SLA non-compliance are notably amplified in a mature data programs, resulting in substantial financial implications.

What is data pipeline observability and how does it work?

Data pipeline observability refers to the ability to track, monitor and alert on the status of data pipelines and data quality.

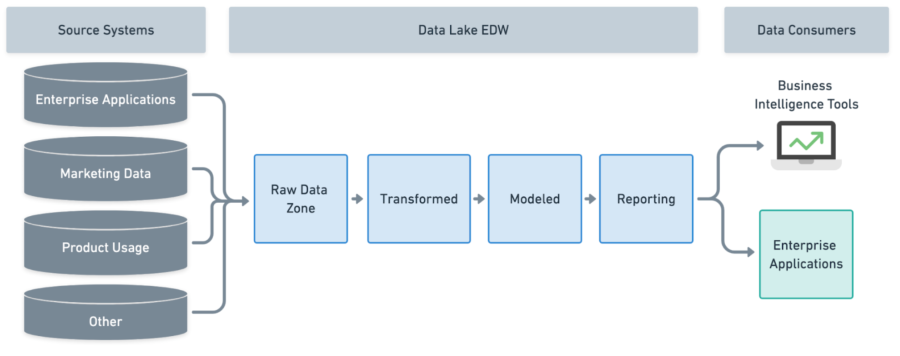

Data pipelines range from the end-to-end process of ingesting data from source systems to making it available for reporting and or in business operations. The stages include: data ingestion from source to EDW; further refining, standardizing, or normalizing the raw data; data modeling; and finally reporting the data sets.

In case of data failure, understanding where the issue is in the end-to-end data pipeline, leads to faster triage and reduced data downtime. The full cost of data downtime includes the cost of:

- Number of hours to fix the data bug

- Time to run the data pipeline data refresh

- Compute cost of data infrastructure to refresh the data

- Lost opportunities due to stalled business decisions

Why enterprises need data pipeline observability

Visibility into the end-to-end data pipeline is essential for modern data programs as it provides insights into the state of the data and an opportunity for proactive intervention, ensuring the continuous integrity of the data pipeline before it truly impacts the business and results in data downtime.

Data downtime refers to the period after running the data pipeline when the resulting dataset is incomplete, inaccurate, or not available on time, thus missing the Service Level Agreement (SLA).

Insights gained from data pipeline observability can help identify which parts of the pipeline are causing delays. This enables teams to fine-tune and redesign the query or Directed Acyclic Graph (DAG), leading to faster time-to-insights for the business.

Actionable insights downtime

In the data analytics world, ETL (Extract, Transform, Load) jobs, much like software processes, are run daily to produce executive dashboards and reports. These are used by all lines of business for decision-making. Inaccurate data can significantly hinder the ability to make correct decisions or even lead to an inability to make decisions.

Data pipeline observability and shift left

Data once loaded into data lake/EDW goes through various stages before the final actionable insights are available. The ability to detect and catch issues in the earlier stages is instrumental to saving time, computing cost, and level of effort required to address the issue. With “shift left” many data quality (DQ) checks can be moved to the raw data zone, the extract and load (EL) part of the data pipeline. These DQ checks can include variance in the number of daily rows, null values in a column or accepted range for a particular column (for example, checking that a price is between a specified range).

핵심 요약

Trustworthy data is fundamental to actionable insights. It is important to think about an end-to-end holistic data landscape—how data is ingested, stored, transformed and consumed. Key activities include: establishing an SLO for each aspect of the data landscape, creating the right set of data contracts between the upstream data providers, and publishing SLOs for data consumers based on those. Implementing a comprehensive observability framework that monitors each step in the pipeline covering all dimensions of data quality (DQ) such as freshness, volume, lineage, accuracy, and schema is vital. Stay tuned to learn how New Relic can assist you on this journey.

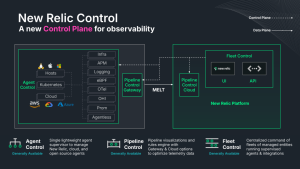

Start your observability journey with New Relic. Go to New Relic Instant Observability and choose from over 750 integrations to monitor any part of your data and engineering stack.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.