I’ve seen remarkable progress in AI capabilities and recently the go-to-market of practical tools powered by generative AI. But in addition to witnessing this astounding innovation, as director of Product and Privacy Counsel at New Relic, I also recognize that legislative bodies are taking action to protect the rights, privacy, and security of their constituents. New laws and regulations place compliance obligations on companies that develop, provide, or deploy AI systems, especially ones that are considered high risk. While this blog post is not legal advice for your specific situation, this is an interesting area where technologies that I’m familiar with can help companies meet applicable compliance needs.

Current state of AI laws

United States

In the United States, individual states are taking proactive steps to regulate AI development and deployment (due to a lack, at present, of similar regulation on the federal level). And these individual state laws can vary widely. Colorado’s Artificial Intelligence Act regulates high-risk AI systems and focuses on developers and deployers, and seeks to review, document, mitigate, disclose, and notify the Colorado attorney general of certain harms and potentials for algorithmic discrimination against Colorado residents. In contrast, the Utah Artificial Intelligence Policy Act is much more limited in scope, obligating generative AI products to disclose whether the individual is interacting with a human when asked by the individual.

Meanwhile in Washington, President Biden signed an executive order on October 30, 2023 to guide AI usage within government agencies and to also impose direct obligations on private companies, requiring them to disclose certain information about especially powerful AI models and computing clusters. Agencies, including the National Institute of Standards and Technology (NIST), were tasked to produce AI standards and guidelines, such as developing a companion resource to the NIST AI Risk Management Framework, NIST Secure Software Development Framework, and guidance and benchmarks for evaluating AI capabilities, with the focus on the capability to cause harm1.

European Union

The world is awaiting the much anticipated European Union’s forthcoming Artificial Intelligence Act was published on 12 July 2024 and is due to come into force on 1 August 2024. This regulation has a much broader application than any of the US laws as it specifically:

- applies to developers, deployers, importers, distributors, product manufactures, and authorized representatives;

- outright prohibits certain categories of AI systems;

- places numerous obligations on “high risk AI systems”; and

- places obligations on providers of “general-purpose AI models.”

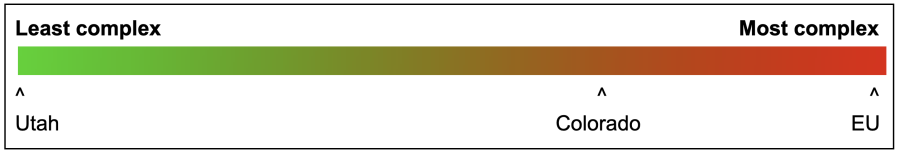

One way to visualize some of the AI laws by jurisdiction and where they fall on a spectrum of complexity. Author’s own subjective opinion.

As more jurisdictions recognize the need for AI governance, it is anticipated that the proliferation of regulations like these will continue, shaping the future of responsible AI development and deployment.

EU AI Act - Article 12 Record-Keeping Obligations

Are you a provider or a deployer of a ‘High Risk AI System’?

If yes, New Relic may be able to assist with your Article 12 obligations under the EU AI Act.

One provision which may be relevant for New Relic customers who operate a ‘High Risk AI Systems’ AND who are looking to add a holistic observability solution for their entire tech stack and tech estate is the logging requirements for high-risk AI systems2 under Article 12 of the EU AI Act.

Article 12 mandates that there be logging capabilities in place for High Risk systems to enable providers and deployers to monitor their high-risk AI systems.

Section 12 at a high-level currently specifies three main requirements: (1) enabling automatic recording of “events (logs)” over the lifetime of the system, (2) logging capabilities that record events which may assist with compliance with other obligations under of the EU AI Act, and (3) specific “logging capabilities” thresholds.

The first requirement states that the high-risk AI system must “technically allow for the automatic recording of events (logs) over the lifetime of the system” (emphasis added).

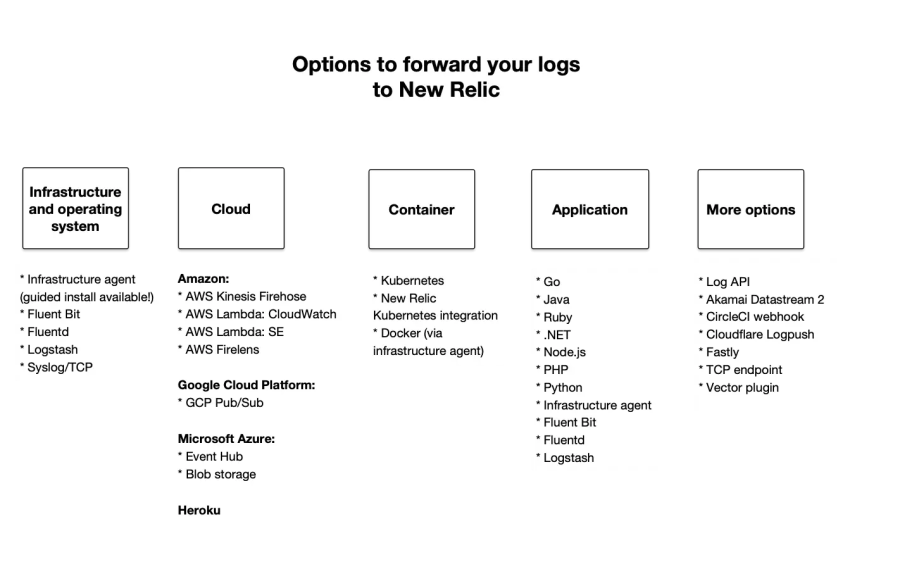

The tech industry already employs a number of solutions generating and forwarding logs, which New Relic currently supports including Fluent Bit, Fluentd, Logstash, and logs from cloud service providers (CSPs) like Amazon and Microsoft. If you don’t have one of these solutions already, our application performance monitoring (APM) agents can automatically report logs out of applications, and our infrastructure agent can capture and report logs from your hosts. New Relic supports a growing number of log-forwarding options.

For example, if a third-party provides an image or container that’s part of your high-risk AI system and the developer did not provide APIs or logging functionality out-of-the-box for that image, logs out of the container or Kubernetes are options available to you in addition to logging out of the other portions of your microservices architecture.\

For companies that are embracing a vendor-neutral, open standards via OpenTelemetry, New Relic supports a growing number of support and integrations with OpenTelemetry solutions.

The second set of requirements states that the purpose is to “...ensure a level of traceability of the functioning of a high-risk AI system that is appropriate to the intended purpose of the system,” and is followed with the requirement, “logging capabilities shall enable the recording of events relevant for…” (emphasis added) other portions of the EU AI Act. These portions include:

- Article 79(1), concerning risks of the product having the potential to adversely affect the health safety of numerous interests that is beyond what’s reasonable and acceptable3;

- Article 72, concerning post-market monitoring by providers and post-market monitoring plan for high-risk AI systems;

- Article 26(5), requiring deployers to “monitor the operation of the high-risk AI system” and including reporting obligations when deployers have reason to believe that use of the high-risk AI system presents a risk to the provider, distributor and the market surveillance authority.

The third set of requirements under Article 12 states that “the logging capabilities shall provide, at a minimum:” period of use, reference databases utilized, the input data, and identification of natural persons verifying the results as set forth in subsection 5 of Article 14 (Human Oversight).

Comprehensive observability solutions for monitoring and logging needs

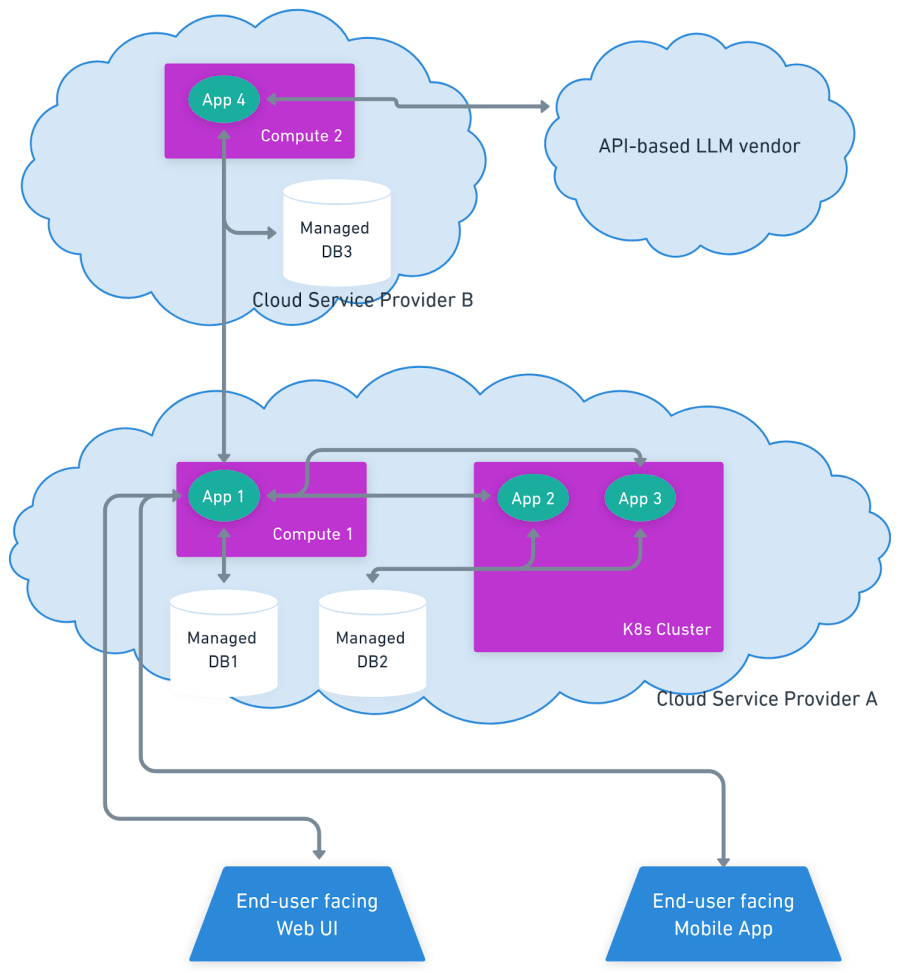

Here’s a hypothetical microservice architecture for your AI system, whereby you have two cloud environments and are utilizing a third-party large language model (LLM) APIs for services running solely in the second cloud environment. Your setup may be more complex if you leverage a set of existing applications and infrastructure that’s already been built-out, or if you break out your services into smaller ones managed by various product and engineering experts across your organization.

The more complex your team organization, the more microservices, cloud environments, and distributed your techstack, the more critical it is to maintain oversight into all of the components of your AI system for compliance purposes. Here is where New Relic can help.

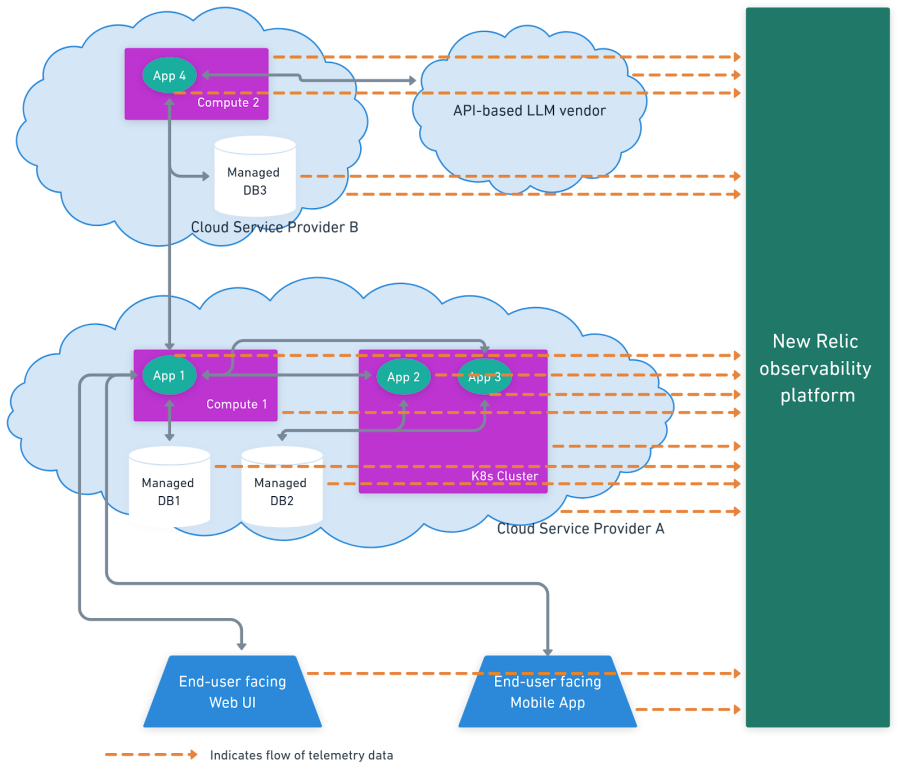

Fortunately, New Relic APIs allow you to send all logs, events, and other telemetry data from each component shown to centralize and maintain end-to-end visibility over your tech estate:

- Web UI events and other telemetry data can be sent to New Relic browser monitoring APIs.

- Mobile app events and other telemetry data can be sent to New Relic mobile monitoring APIs.

- Logs, events, and other telemetry data from major cloud service providers can be sent to New Relic infrastructure monitoring APIs.

Application logs, events, and other telemetry data can be sent to New Relic APM APIs.

A number of key capabilities within New Relic make it easy for companies to get started with complying with the logging capability requirements:

- Where some of the AI capabilities are performed at the application level, New Relic AI monitoring supports a number of programming languages and includes monitoring of the prompts and responses.

- Where some of the AI capabilities are performed on third-party platforms, several machine-learning operations (MLOps) quickstarts like Azure OpenAI and PyTorch make it easy to monitor third-party LLMs, MLOps platforms for model drifts, and overall centralize monitoring of those third-party systems by sending applicable telemetry data to New Relic. Additionally, we have quickstarts for LangChain app, Pinecone (LangChain), LangChain vector stores, and several Nvidia resources.

- Where traceability is critical, distributed tracing and service maps help you track the transaction throughout your tech estate and monitor each piece of your AI systems so each piece is not out of sight, out of monitoring, and out of compliance. For example, New Relic surfaces upstream and downstream dependencies of services used by your teams in the AI system. For adding monitoring to database downstream dependencies, use a New Relic database quickstarts to easily get telemetry data in from those third-party databases.

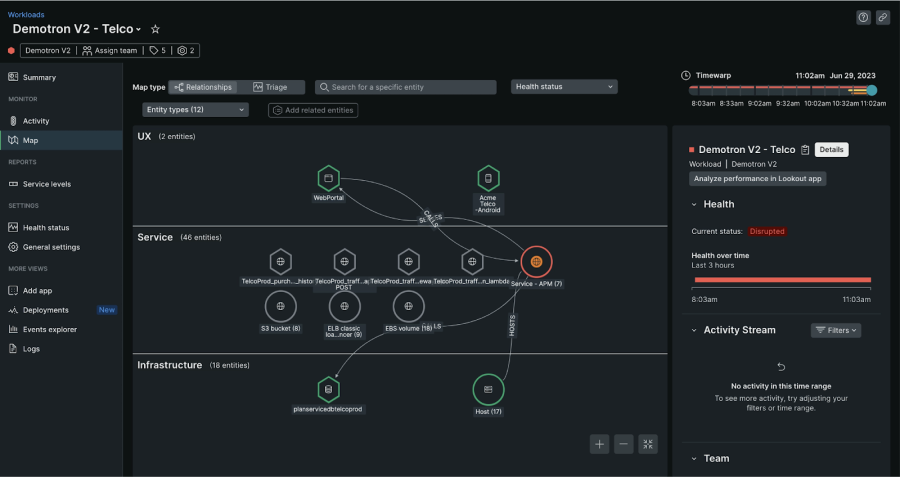

- Where keeping track of the applicable components of the AI system is critical, New Relic workloads help keep those applicable components within a central view. Workloads maps provide a great visualization for the individual (or individuals) tasked with monitoring the AI system.

- Where an out-of-the-box chart or visualization may not fit your particular compliance needs, New Relic provides companies tools to create custom charts and dashboards for creating the single-pane viewing into your AI systems.

- Where companies have identified the scenarios and environment conditions that need to trigger notifications to developers, operators, and other reviewers to investigate, New Relic alerts help ensure that when those conditions for notifications are always met, notifications are sent to applicable teams.

- Where companies need to keep logs for the duration of the lifetime of their system under Article 12,4 which could be years or decades equating to mounting storage and discovery costs that can be effectively addressed with New Relic Live Archives. This cost-effective solution makes it easy for you to send all essential logs to New Relic’s existing log APIs, retain them for up to seven years at a fraction of typical storage spend, and use many of the features and functionalities available with New Relic logs. You can also utilize the New Relic historical data export to retrieve copies of your logs after the seven years for your longer-term storage needs.

AI laws and compliance requirements

New Relic’s AI teams are acutely aware of the growing number of AI laws and compliance challenges. New Relic AI helps make observability more accessible to users outside of the traditional DevSecOps roles, which lowers the barrier for compliance teams to utilize the same tools that DevSecOps team members use to monitor their tech stack for the purposes of complying with requirements like Article 12 recording-keeping of the EU AI Act.

Innovations in the AI space continue to grow, with cutting-edge models like GPT-4o and GTP-4 Turbo, which can explain what’s happening in an image. As new AI innovations continue to be developed by leading companies like OpenAI, Anthropic, Google, and Meta, the use cases where these AI innovations can be used in areas like healthcare, manufacturing, security, marketing, transportation, retail, and finance also grows.

Companies that are subject to the growing number of AI laws around the world need to look at an array of tools to meet their compliance needs; the New Relic observability platform is a great addition to a company’s compliance toolbelt.

1 Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence | NIST

2 The record-keeping and logging requirements in Article 12 apply to high-risk AI systems, which has a specific definition in the EU AI Act. Consult your favorite legal ally to determine whether your AI product falls under the definition of ‘high-risk AI system’ which has a particular meaning under the EU AI Act; this is especially important as the EU AI Act is updated and corrected prior to publication. The latest corrigendum as of the first publication date. New Relic restricts use of our services for certain high-risk activities. You should refer to New Relic's terms of service when considering how you may use our services.

3“product presenting a risk” means a product having the potential to affect adversely health and safety of persons in general, health and safety in the workplace, protection of consumers, the environment, public security and other public interests, protected by the applicable EU harmonization legislation, to a degree which goes beyond that considered reasonable and acceptable in relation to its intended purpose or under the normal or reasonably foreseeable conditions of use of the product concerned, including the duration of use and, where applicable, its putting into service, installation and maintenance requirements; see https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32019R1020

4 Latest corrigendum as of the publication date.

다음 단계

If you are a provider or a deployer of a High Risk AI system and have record keeping obligations under Article 12 of the EU AI Act, you can:

Sign up with New Relic for a free trial

Read about Live Archives for compliance

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.