After completing a 10-year effort to migrate systems from mainframe to a modern Java stack in 2015, Matson, Inc.—a 138-year-old leading U.S. shipping carrier in the Pacific and my current employer—spent the next year closing four internal data centers and moving operations to AWS. It was a tour de force year, to say the least.

Going “all-in” on the AWS cloud completed the first leg of Matson’s cloud-native voyage. With the data-center “lift-and-shift” now behind us, a focus on innovation and digital transformation could begin.

Here’s the story of our voyage to serverless.

First sight of Lambda

Like many, I was intrigued with AWS Lambda functions, but did not do much with them right away. Meanwhile, Matson’s Innovation & Architecture team, of which I am part, was starting to make heavy use of Amazon EC2 in our lab account for experimentation and running proofs of concept. This, of course, came with a nicely-sized monthly bill.

To automate the scheduling of Amazon EC2 instances to be available only during business hours was a low-risk opportunity to start experimenting with AWS Lambda functions. And that turned out to be a valuable, zero-cost solution.

Additionally, we integrated some Amazon API Gateways to provide our offshore contract team with a simple, secure way to stop/start servers without creating additional AWS user accounts.

The outcome of this experimentation yielded two positive results:

- Monthly savings on our Amazon EC2 spend (the desired result)

- A realization of the benefits of a serverless approach, which made us very excited about the possibilities of serverless computing for the wider Matson enterprise (bonus)

Setting a course for serverless

One of the Innovation & Architecture team’s responsibilities is leading mobile product development. We were presented with an opportunity to build a flagship mobile application for global container tracking, along with a few additional value-adds such as interactive vessel schedules, location-based port maps, and live gate camera feeds.

With the known spiky traffic patterns from our web-based online tracking system, building the mobile backend as a highly-available—including a likely low total cost of ownership—serverless solution was a perfect fit.

Architecture overview

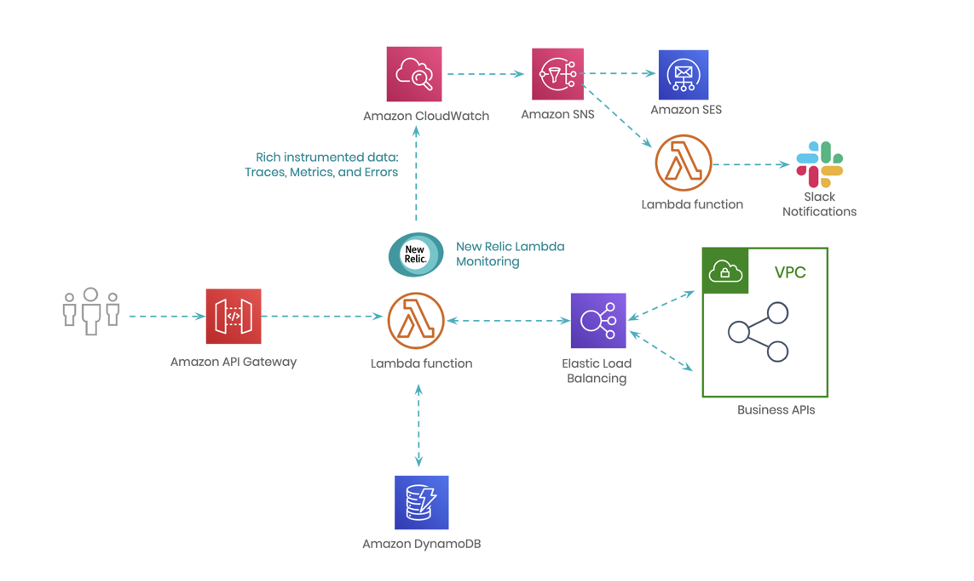

All mobile device access into the system is via Amazon API Gateway. Using Amazon API Gateway ensures that we have a highly-available and scalable set of endpoints for mobile access across the U.S. We also do a fair amount of caching in this service.

Much of the data needed for the app is available in a set of existing business APIs within our Amazon VPC. Some of these APIs are not entirely mobile-friendly. AWS Lambdas provide a great place to perform any needed data transformations for optimizing mobile payloads.

The AWS Lambda functions are architected as microservices, with each service having a bounded-context for a given business function, e.g., tracking service, vessel service, etc.

Amazon DynamoDB is used primarily to externalize environment configuration for both the mobile devices and the internal system.

High-level architecture

Importance of observability

Our customers expect accurate, up-to-the-minute container tracking and vessel status updates, so it is imperative that we have proper observability and alerting mechanisms in place for our running systems. To achieve this, we use a combination of Amazon CloudWatch Logs with custom Amazon CloudWatch Metric Filters, Amazon SNS, Amazon SES, AWS Lambda functions, Slack, and New Relic.

New Relic gives us a serverless monitoring and tracing tool that provides instant observability and alerting into the health of our running AWS Lambda functions. With its clean, easy-to-use, intuitive user interface, New Relic has proven itself an invaluable tool for our entire mobile product team.

Resource orchestration

Having previous experience (pain) with integrating AWS Lambda & Amazon API Gateway in the AWS web console, we knew that attempting to manage a larger app by solely using the web console would not be something to entertain.

Seeing emergent tooling like the Serverless Framework, we became fans from the start of the initial prototype. Using it felt like a very natural way to express the resource provisioning and orchestration, as well as the deployment of the entire application. It’s AWS CloudFormation all the way down.

Automation is everything

Without a fully-automated deployment pipeline, the application would only be half done, so deployment automation was not an option.

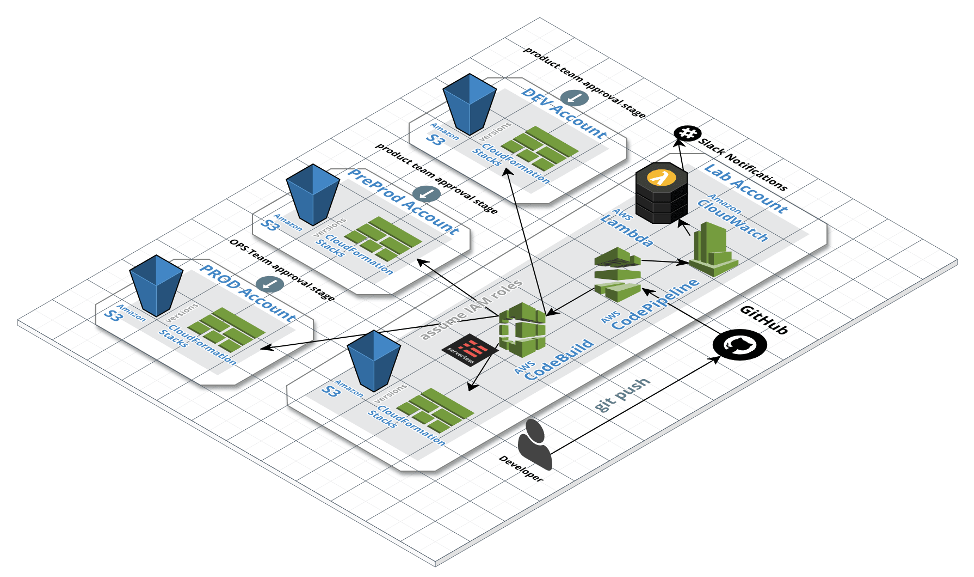

Internally we have both Bamboo and Jenkins running on Amazon EC2 instances for continuous integration and various operations automation tasks, but for this application we wanted to stay as close to 100% serverless as possible. We found AWS CodePipeline and AWS CodeBuild to be great for this, not only for functionality but also because both services follow the pay-per-execution-only model; hence severlessness.

The main challenge we faced setting up the deployment pipeline was running it from a single AWS account and deploying the application across multiple AWS accounts (Lab, DEV, Pre-Prod, Production). But once we sorted out how to use AWS cross-account roles in AWS CodeBuild, it was smooth sailing.

Deployment pipeline

Pipeline process

All commits to the GitHub master branch trigger an automated build, test, and deploy to the Lab account. AWS CodePipeline approval stages are used to add a level of control for the product and OPS teams. The product team controls the deployments up to production. When the pipeline lands on the production approval stage, a Slack message goes to the OPS team providing a one-click production deployment process.

Infrastructure as code

Every part of the application has been codified with AWS CloudFormation and version-controlled. We have really grown to love the practice of building, maintaining and deploying serverless applications using the “infrastructure as code” model, which gives us a versioned reproducible process for creating the environment. This practice also greatly simplifies our disaster recovery process.

In addition, paraphrasing Paul Johnston: Without infrastructure as code, you really do not understand your system as well as you might think. I would have to agree.

Future destinations

The mobile product team is currently working on the next set of serverless products to support both our mobile platform and upcoming internet of things (IoT) initiatives.

Beyond the mobile space, we are looking at other areas of the enterprise that can benefit from a serverless approach. Internally, Matson’s Innovation & Architecture group is discussing what a “serverless-first” approach to building applications—with fallback to a container platform—might look like.

Final thoughts

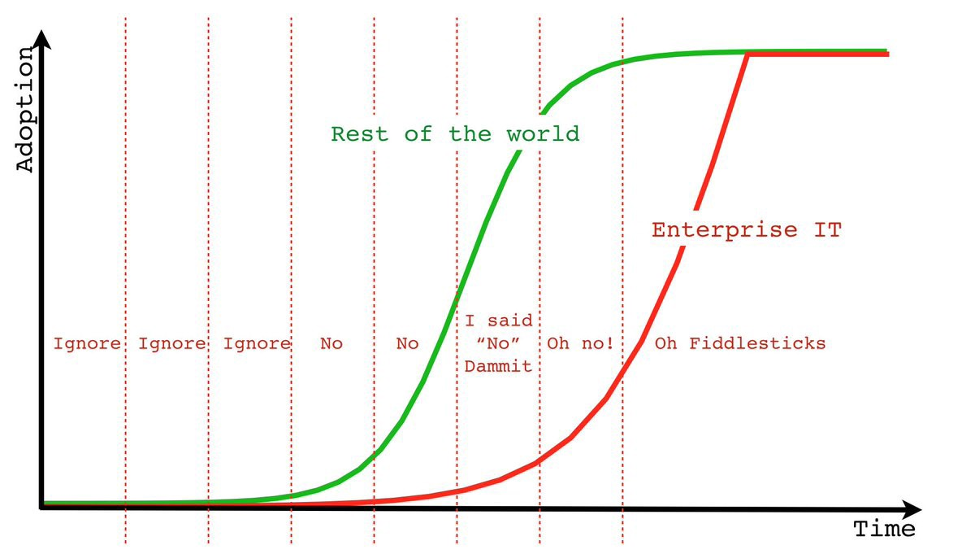

Serverless is just hitting the mainstream in terms of enterprise adoption. I tend to agree with folks like Simon Wardley, who say that it is going to land in a big way over the next few years.

Based on Simon’s well-known “Enterprise IT Adoption Cycle” graph, I’d like to think we are now in a good position to skip the “Oh, Fiddlesticks!” moment.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.