Across the industry, parsing and understanding logs can be a challenge, especially since logs often include large semi-structured text fields which have been historically difficult to handle using New Relic Query Language (NRQL). In a previous post, I outlined how to use the regex capture in New Relic to extract values from URLs in NRQL queries. In this blog, I’m going to show you how to query your data faster and more efficiently by using a handful of new NRQL capabilities, including NRQL variables, regex multi-capture, and more. These NRQL productivity improvements will help you get concise and faster insights into how your applications are performing.

Parsing complex logs

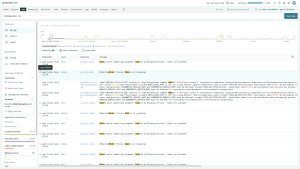

Let's start by looking at a potential challenge presented by log data in the past. Like most complex text data fields, log data often includes key information that needs to be parsed from a longer string. Here’s an example:

FROM Log SELECT message

Let’s take a closer look at several lines from this example:

ts=2022-10-04T17:23:52.685261818Z caller=middlewares.go:75 method=GetUsers id=57a98d98e4b00679b4a830b2 result=1 took=375.146 µs

ts=2022-10-04T17:23:52.685261818Z caller=middlewares.go:75 id=57a98d98e4b00679b4a830b2 method=Get result=1 took=2.146 msLet's assume the only data we're getting from this service is the Log data above, and we need to calculate the 99th percentile took duration each endpoint method is operating at. Unfortunately, took values in these log lines have values in both µs and ms. Traditionally, these logs would be challenging to extract and with standardized time units. In the past, before New Relic added the new productivity features you’ll learn about later in this post, you might need something like this monstrous query:

FROM Log

SELECT percentile((

numeric(capture(message, r'.*took=(?P<microTime>\d*\.?\d*) µs')) / 1000

OR

numeric(capture(message, r'.*took=(?P<milliTime>\d*\.?\d*) ms'))

),

99) AS 'duration 99th'

FACET capture(message, r'.*method=(?P<method>[[:alpha:]]+) id=.*')This is a complex and expensive query, but it works. You’ll get a result like this:

This query is difficult to read, needs three regexes, and uses obscure query features. To someone without context, this query could be very hard to interpret.

Fortunately, you can now use NRQL variables, regex multi-capture, and anchor parse to greatly simplify queries like this one. Let's break this query down step by step, using the new features along the way, to see how they massively simplify the problem.

Reduce complexity with NRQL variables

To begin breaking down the complex query we're using above, let's take a look at the first new feature: NRQL variables. These variables allow you to assign a value computed from a non-aggregate function or attribute (row-wise) to an identifier that can be referenced elsewhere in the query. NRQL variables use a new clause WITH, which is best used following the FROM clause for clarity. WITH uses AS for assignment (rather than programming languages that use =).

...WITH round(attribute, 10) AS roundedAttribute ...

Here is what the previous monstrous query looks like with NRQL variables:

FROM Log

WITH numeric(capture(message, r'.*took=(?P<microTime>\d*\.?\d*) µs')) AS microTime,

numeric(capture(message, r'.*took=(?P<milliTime>\d*\.?\d*) ms')) AS milliTime,

microTime / 1000 OR milliTime AS duration

SELECT percentile(duration, 99) AS 'duration 99th'

FACET capture(message, r'.*method=(?P<method>[[:alpha:]]+) id=.*')With NRQL variables, the computation is now clearly identified as the variables microTime and milliTime, making the query easier to read. The variables are then assigned to a single standardized duration variable which can then be used in the query. If you then wanted to look at logs with a duration longer than one millisecond, you’d just need to add ...WHERE duration > 1…. Imagine how unwieldy it would be to do that without variables!

Important notes:

- If

duration(or any other variables) was already an attribute on the log event, any bound variables take precedence, similar to functional overloading for our object-oriented readers. - You cannot assign an aggregator like a percentile function to a variable. You can only assign a row-wise function to a variable. As a quick rule of thumb, if you can use a function to create a non-faceted table view with attributes, it's row-wise.

Better performance and increased simplicity with regex multi-capture and If function

Let's continue to refine this further. NRQL variables made the query more readable and easier to adjust, but there are still three regexes all reading the message field, adding complexity to the query and reducing performance. Let’s simplify the query with regex multi-capture and the If function.

Regex multi-capture

Regex multi-capture allows a single regex to pull multiple values (up to 16) from a single attribute, leveraging the multi-assignment feature of NRQL variables. Multi-assignment uses the names provided in the regex capture to extract the captured values, so assignment order doesn't matter:

...WITH capture(message, r'(?P<A>.)(?P<C>.)(?P<B>.)') AS (A, B, C)...

If function

The If function provides another way to add a conditional to a New Relic query. If has the following structure:

If(<condition>, <trueValue>, [falseValue])

- The first argument of

Ifis our condition, similar to those found in aWHEREclause or functions likepercentageandfilter. - The second and third arguments are the values returned based on the result of the condition. These can either be fixed values or computed values like

round(attribute). The third argument is optional and returnsnullby default.

Please refer to the NRQL documentation for more information.

Using regex multi-capture to simplify a query

Using these features, you can simplify the query to:

FROM Log

WITH capture(message, r'.*method=(?P<method>[[:alpha:]]+)id=.*took=(?P<value>\d*\.?\d*) (?P<units>µs|ms)') AS (method, value, units),

numeric(value) / if(units = 'µs', 1000, 1) AS duration

SELECT percentile(duration, 99) AS 'duration 99th'

FACET methodNow the query uses a single regex to capture all three values and uses the If function to easily navigate the tricky detail of handling units.

Converting this query into a single regex should reduce the computational costs of running the query, which means less time staring at a load bar. In this case, this query is about 280% faster (7 seconds → 2.5 seconds).

Important note

- The

Iffunction doesn't includeWHEREbefore the filter condition, as you might see in afilterorpercentagefunction. With this release,WHEREis optional. Note that there are a few edge cases where it's still required to interpret the query correctly.

Anchor parse

There is still one last detail about the previous query that can be refined with these new features: the regex itself. You can replace RegexCapture with our new simplified (and faster) function AnchorParse (or aparse for brevity) which leverages a syntax similar to LIKE to capture values. It has the following forms:

AnchorParse(<evaluable>, <capturePattern>)

aparse(<evaluable>, <capturePattern>)

The function arguments are formatted like RegexCapture, but the pattern operates similarly to the LIKE clause with the addition of a capturing symbol:

%is an on-capturing wildcard, as you'd see in aLIKEclause.*is a capturing wildcard, similar to capturing in regex capture.

Refer to the NRQL documentation for more detailed information about AnchorParse.

Since this example doesn't need any advanced regex-matching behavior to extract these values, we can leverage AnchorParse to simplify the query further:

FROM Log

WITH aparse(message,'%method=* id=%took=* *') AS (method, value, units),

numeric(value) / if(units = 'µs', 1000, 1) AS duration

SELECT percentile(duration, 99) AS 'duration 99th'

FACET methodInstead of the complex regex capture groups, we are now simply declaring the text locations we'd like to parse out (*), with anchors ("method=", "took=") and wild cards (%) to create a pattern of how and where to match.

Converting this query from a regex into an anchor parse should further reduce the computational costs of running the query, which means even less time staring at a load bar! In this case, the query is another 150% faster (2.5 seconds → 1.6 seconds).

Important notes

- The above anchor parse leverages the space between the value and units. Without that space, regex capture is still necessary.

- Unlike regex multi-capture (which uses named capture groups to extract values),

AnchorParseuses the order of declaration, so variable assignment order matters here. - Similar to

RegexCapture, all results ofAnchorParseare still strings. Therefore, you must use thenumericfunction to cast numeric values to a number if needed, as shown in the example.

Conclusion

With these features, you can simplify some complex queries, particularly those which operate on structured text data. It does take time to reorient how you read and write queries, but it will produce vastly faster and more readable queries. The above query is much simpler, easier to read, and nearly 440% faster (7 seconds → 1.6 seconds) than what we started with.

Hopefully, this example gives you a stepping stone toward using these new features to better parse and comprehend your complex text data. This example focuses on log messages, but these features are generally applicable to all event types and data types.

다음 단계

- Take a deep dive into our documentation on NRQL.

- Watch our instructional video on these improvements.

- If you’re not already using New Relic, get started with New Relic for free. Your free account includes 100 GB/month of free data ingest, one free full-access user, and unlimited free basic users.

이 블로그에 표현된 견해는 저자의 견해이며 반드시 New Relic의 견해를 반영하는 것은 아닙니다. 저자가 제공하는 모든 솔루션은 환경에 따라 다르며 New Relic에서 제공하는 상용 솔루션이나 지원의 일부가 아닙니다. 이 블로그 게시물과 관련된 질문 및 지원이 필요한 경우 Explorers Hub(discuss.newrelic.com)에서만 참여하십시오. 이 블로그에는 타사 사이트의 콘텐츠에 대한 링크가 포함될 수 있습니다. 이러한 링크를 제공함으로써 New Relic은 해당 사이트에서 사용할 수 있는 정보, 보기 또는 제품을 채택, 보증, 승인 또는 보증하지 않습니다.